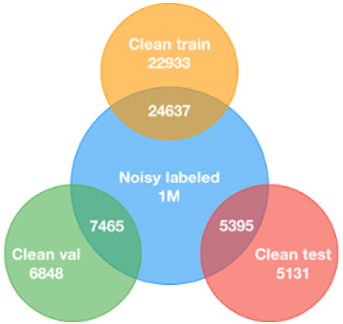

In this paper, dataset is applied from the perspective of semi-supervised learning, using a small amount of clean annotated data and combining a large amount of misannotated data for training. Clothing1M was used in the experiments. Therefore, the purpose of this study is to tackle the problem of noisy datasets to boost the models’ performance. From the perspective of semi-supervised learning, the clean dataset is treated as the labeled dataset, and the remaining noisy data are regarded as the unlabeled data. The initial model was trained on the labeled dataset first, and then the model was used to perform feature extraction on the unlabeled dataset. The “prototypes” for each category can be obtained via feature matching and clustering. As a result, the dual screening scheme is proposed to take the model’s predictions and the predictions from the prototypes method into account, reducing the impact of noisy data. The clean dataset after screening and the remaining data with noisy labels were trained by MixMatch to further enhance the robustness of models. Experimental results show that the proposed methods can boost the classification performance by 3% in accuracy, and outperform the state-of-the-art method by 1%. It achieves (1) cost reduction in labeling, (2) impact mitigation of noisy data via the dual screening scheme, and (3) performance boosting by semi-supervised learning.

After image stitching, there are often problems such as large stitching gap, stitching error and image distortion, which affect the display effect of the image. In this study, the gray level co-occurrence matrix is used to propose the feature points in the image, and the image registration and image fusion techniques are used to eliminate the stitching seam. Image fusion algorithm uses image variance, correlation and Tenengrad gradient as indicators to evaluate image quality. It can be seen from the experiments that the algorithm proposed in this study has better seam elimination effect compared with other algorithms, and the display effect of the spliced image is clearer.

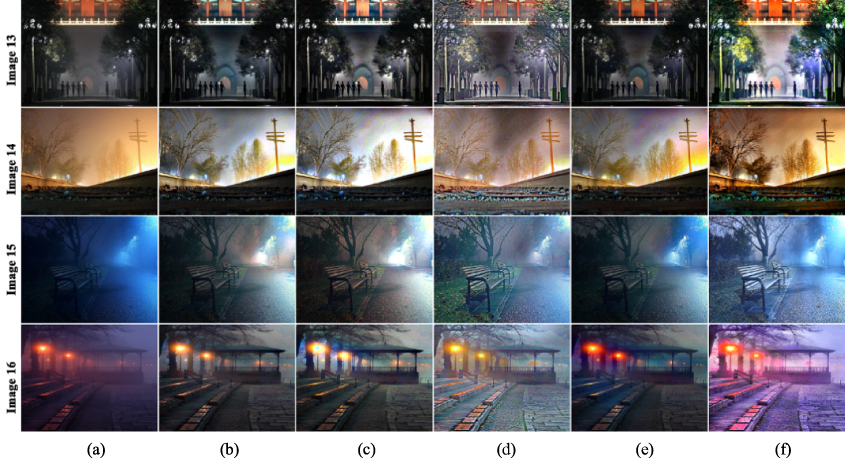

Image haze removal is a very essential preprocessing step in machine vision systems. Due to different imaging mechanisms of daytime hazy images and nighttime hazy images (such as different light sources), it is difficult to have a common method to achieve the dehazing effect of daytime and nighttime hazy images at the same time. Therefore, we propose a daytime image dehazing method based on a physical model and image enhancement, and a nighttime hazy image dehazing method based on image enhancement. For daytime hazy images, we propose an image dehazing method with improved dark channel priors, and image enhancement through an improved sparrow search algorithm. For nighttime hazy images, we propose an image dehazing method based on the improved multiple-scale Retinex, and finally use the improved sparrow search algorithm for post-processing to improve the visual effect of dehazing and enhance the details. Quantitative and qualitative experimental results prove that our method can achieve excellent sharpening effects on daytime and nighttime hazy images.

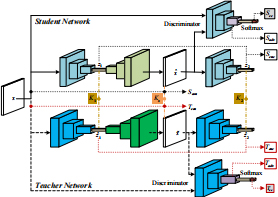

One-class anomaly detection is conducted to identify anomalous instances with different distributions from the expected normal instances. For this task, an Encoder-Decoder-Encoder typed Generative Adversarial Network (EDE-GAN) in previous research has shown state-of-the-art performance. However, there is a lack of research exploration on why this structure has such superior performance and the impact of hyperparameter settings on model performance. Therefore, in this paper, we first construct two GAN architectures to study these issues. We conclude that the following three factors play a key important role: (1) The EDE-GAN calculates the distance between two latent vectors as the anomaly score, which is unlike the previous methods by utilizing the reconstruction error between images. (2) Unlike other GAN architectures, the EDE-GAN model always obtains best results when the batch size is set to 1. (3) There is also evidence of how beneficial constraint on the latent space are when engaging in model training. Furthermore, to learn a compact and fast model, we also propose a Progressive Knowledge Distillation with GANs (P-KDGAN), which connects two standard GANs through the designed distillation loss. Two-step progressive learning continuously augments the performance of student GANs with improved results over single-step approach. Our experimental results on CIFAR-10, MNIST, and FMNIST datasets illustrate that P-KDGAN improves the performance of the student GAN by 2.44%, 1.77%, and 1.73% when compressing the computation at ratios of 24.45:1, 311.11:1, and 700:1, respectively.

During emergencies like fire and smoke or active shooter events, there is a need to address the vulnerability and assess plans for evacuation. With the recent improvements in technology for smartphones, there is an opportunity for geo-visual environments that offer experiential learning by providing spatial analysis and visual communication of emergency-related information to the user. This paper presents the development and evaluation of the mobile augmented reality application (MARA) designed specifically for acquiring spatial analysis, situational awareness, and visual communication. The MARA incorporates existing permanent features such as room numbers and signages in the building as markers to display the floor plan of the building and show navigational directions to the exit. Through visualization of integrated geographic information systems and real-time data analysis, MARA provides the current location of the person, the number of exits, and user-specific personalized evacuation routes. The paper also describes a limited user study that was conducted to assess the usability and effectiveness of the MARA application using the widely recognized System Usability Scale (SUS) framework. The results show the effectiveness of our situational awareness-based MARA in multilevel buildings for evacuations, educational, and navigational purposes.

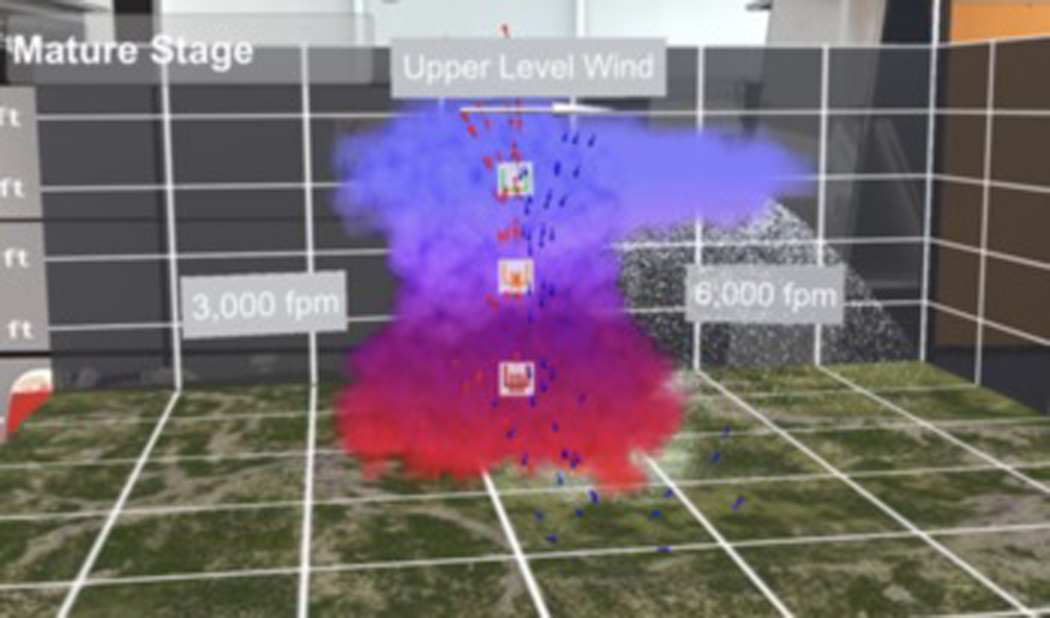

In 2021, there were 1,157 general aviation (GA) accidents, 210 of them fatal, making GA the deadliest civil aviation category. Research shows that accidents are partially caused by ineffective weather theory training. Current weather training in classrooms relies on 2D materials that students often find difficult to map into a real 3D environment. To address these issues, Augmented Reality (AR) was utilized to provide 3D immersive content while running on commodity devices. However, mobile devices have limitations in rendering, camera tracking, and screen size. These limitations make the implementation of mobile device based AR especially challenging for complex visualization of weather phenomena. This paper presents research on how to address the technical challenges of developing and implementing a complex thunderstorm visualization in a marker-based mobile AR application. The development of the system and a technological evaluation of the application’s rendering and tracking performance across different devices is presented.

For translationally moving objects with fixed cameras, such as robots and cars, blurring can often be more pronounced in objects that are closer to the camera. A depth-normalized, least-squares objective function is proposed for the simultaneous recovery of shape and motion parameters from optical flow, together with an efficient iterative optimization algorithm. Simulation and experiments demonstrate that for scenes with sufficient depth variation, our algorithm provides robust, statistically consistent estimates of shape and motion.

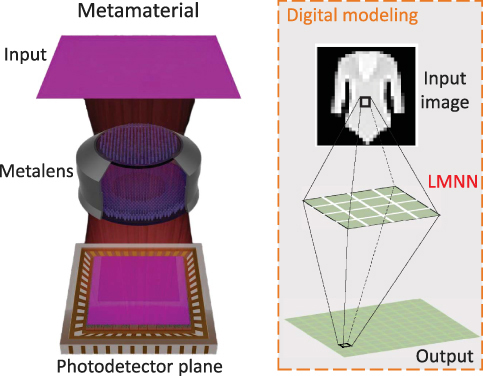

Deep neural networks (DNNs) utilized recently are physically deployed with computational units (e.g., CPUs and GPUs). Such a design might lead to a heavy computational burden, significant latency, and intensive power consumption, which are critical limitations in applications such as Internet of Things (IoT), edge computing, and usage of drones. Recent advances in optical computational units (e.g., metamaterial) have shed light on energy-free and light-speed neural networks. However, the digital design of the metamaterial neural network (MNN) is fundamentally limited by its physical limitations, such as precision, noise, and bandwidth during fabrication. Moreover, the unique advantages of MNN’s (e.g., light-speed computation) are not fully explored via standard 3×3 convolution kernels. In this paper, we propose a novel large kernel metamaterial neural network (LMNN) that maximizes the digital capacity of the state-of-the-art (SOTA) MNN with model re-parametrization and network compression, while also considering the optical limitation explicitly. The new digital learning scheme can maximize the learning capacity of MNN while modeling the physical restrictions of meta-optics. With the proposed LMNN, the computation cost of the convolutional front-end can be offloaded to fabricated optical hardware. The experimental results on two publicly available datasets demonstrate that the optimized hybrid design improved classification accuracy while reducing computational latency. The development of the proposed LMNN is a promising step towards the ultimate goal of energy-free and light-speed AI.

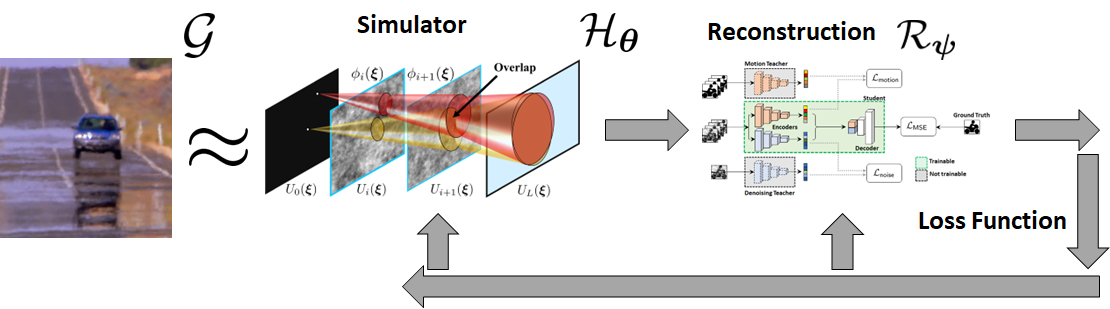

At the pinnacle of computational imaging is the co-optimization of camera and algorithm. This, however, is not the only form of computational imaging. In problems such as imaging through adverse weather, the bigger challenge is how to accurately simulate the forward degradation process so that we can synthesize data to train reconstruction models and/or integrating the forward model as part of the reconstruction algorithm. This article introduces the concept of computational image formation (CIF). Compared to the standard inverse problems where the goal is to recover the latent image x from the observation y=G(x), CIF shifts the focus to designing an approximate mapping Hθ such that Hθ≈G while giving a good image reconstruction result. The word “computational” highlights the fact that the image formation is now replaced by a numerical simulator. While matching Mother Nature remains an important goal, CIF pays even greater attention to strategically choosing an Hθ so that the reconstruction performance is maximized.

The goal of this article is to conceptualize the idea of CIF by elaborating on its meaning and implications. The first part of the article is a discussion on the four attributes of a CIF simulator: accurate enough to mimic G, fast enough to be integrated as part of the reconstruction, provides a well-posed inverse problem when plugged into the reconstruction, and differentiable to allow backpropagation. The second part of the article is a detailed case study based on imaging through atmospheric turbulence. A plethora of simulators, old and new ones, are discussed. The third part of the article is a collection of other examples that fall into the category of CIF, including imaging through bad weather, dynamic vision sensors, and differentiable optics. Finally, thoughts about the future direction and recommendations to the community are shared.

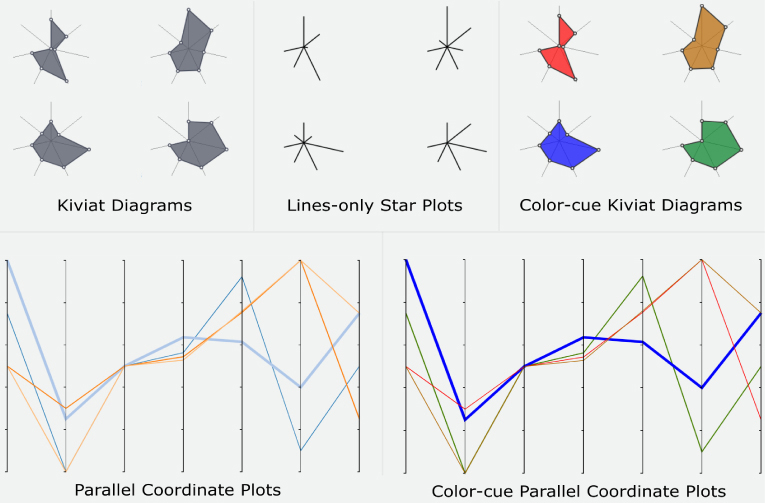

Similarity detection seeks to identify similar, but distinct items over multivariate datasets. Often, similarity cannot be defined computationally, leading to a need for visual analysis, such as in cases with ensemble, computational, patient cohort, or geospatial data. In this work, we empirically evaluate the effectiveness of common visual encodings for multivariate data in the context of visual similarity detection. We conducted a user study with 40 participants to measure similarity detection performance and response time under moderate scale (16 items) and large scale (36 items). Our analysis shows that there are significant differences in performance between encodings, especially as the number of items increases. Surprisingly, we found that juxtaposed star plots outperformed superposed parallel coordinate plots. Furthermore, color-cues significantly improved response time, and attenuated error at larger scales. In contrast to existing guidelines, we found that filled star plots (Kiviats) outperformed other encodings in terms of scalability and error.