References

1

2

3BoydD. D.In-flight decision-making by general aviation pilots operating in areas of extreme thunderstormsAerosp. Med. Hum. Perform.2017Vol. 88ASMAAlexandria, VA106610721066–7210.3357/AMHP.4932.2017

4BoydD. D.A review of general aviation safety (1984–2017)Aerosp Med Hum Perform2017Vol. 88ASMAAlexandria, VA657664657–6410.3357/AMHP.4862.2017

5BlickensderferB.LanicciJ.GuinnT. A.ThomasR.ThroppJ. E.KingJ.Cruit,J.DeFilippisN.BerendschotK.McSorleyJ.KleberJ.Combined Report: Aviation Weather Knowledge Assessment & General Aviation (GA) Pilots’ Interpretation of Weather ProductsGeneral Aviation Weather Display Interpretation2019

6A.N.AdministrationR.Office of the Federal Register, “14 CFR 61.105 - Aeronautical knowledgeOffice of the Federal Register, National Archives and Records Administration

7Weather theoryPilot’s Handbook of Aeronautical Knowledge20161261–26

8OrtizY.BlickensderferB.KingJ.Assessment of general aviation cognitive weather tasks: Recommendations for autonomous learning and training in aviation weatherProc. Human Factors and Ergonomics Society Annual Meeting2017Vol. 61Sage PublicationsLos Angeles, CA186118651861–510.1177/1541931213601946

9LanicciJ.GuinnT.KingJ.BlickensderferB.ThomasR.OrtizY.2020A proposed taxonomy for general aviation pilot weather education and trainingJ. Aviation/Aerospace Education Res.2910.15394/jaaer.2020.1815

10WiegmannD. A.TalleurD. A.JohnsonC. M.Redesigning weather training and testing of general aviation pilots by applying traditional curriculum evaluation and advanced simulation-based methodsHuman Factors Division Institute of Aviation2008FAAWashington, DC

11GuinnT.RaderK.Disparities in weather education across professional flight baccalaureate degree programsCollegiate Aviation Review Int.2012Vol. 30UAAMillington, TN112311–2310.22488/okstate.18.100432

12

13RisukhinV. N.Integration of affordable information technology products into general aviation training and research16th AIAA Aviation Technology, Integration, and Operations Conf.2016AIAAReston, VA1111–1110.2514/6.2016-3917

14PenningtonE.HaferR.NistlerE.SeechT.TossellC.Integration of advanced technology in initial flight training2019 Systems and Information Engineering Design Symposium, SIEDS 20192019IEEEPiscataway, NJ151–510.1109/SIEDS.2019.8735628

15ReismanR.A brief introduction to the art of flight simulationVirtuelle Welten, Ars Electronica1990Linz, Austria159170159–70

16DouradoA. O.MartinC. A.New concept of dynamic flight simulator, Part IAerosp. Sci. Technol.2013Vol. 30ElsevierAmsterdam798279–8210.1016/j.ast.2013.07.005

17RudiD.KieferP.RaubalM.The instructor assistant system (iASSYST) – utilizing eye tracking for commercial aviation training purposesErgonomics2020Vol. 63Taylor & FrancisOxfordshire617961–7910.1080/00140139.2019.1685132

18YavrucukI.KubaliE.TarimciO.A low cost flight simulator using virtual reality toolsIEEE Aerospace and Electronic Systems Magazine2011Vol. 26IEEEPiscataway, NJ101410–410.1109/MAES.2011.5763338

19RossR.SlavinskasD.MazzaconeE.JungT.DaltonJ.US air force weather training platform: Use of virtual reality to reduce training and equipment maintenance costs whilst improving operational efficiency and retention of US air force personnelXR Case Studies: Using Augmented Reality and Virtual Reality Technology in Business2021SpringerCham9110291–10210.1007/978-3-030-72781-9_12

20AlizadehsalehiS.HadaviA.HuangJ. C.2020From BIM to extended reality in AEC industryAutom Constr11610.1016/J.AUTCON.2020.103254

21MilgramP.KishinoF.1994A taxonomy of mixed reality visual displaysIEICE Trans. Information SystemsE77-D132113291321–9

22RollandJ.CakmakciO.2009Head-worn displays: The future through new eyesOpt. Photon. News20202720–710.1364/OPN.20.4.000020

23AzumaR. T.A survey of augmented realityPresence: Teleoperators and Virtual Environments1997Vol. 6355385355–85

24MillerJ.HooverM.WinerE.2020Mitigation of the Microsoft HoloLens’ hardware limitations for a controlled product assembly processInt. J. Adv. Manufact. Technol.109174117541741–5410.1007/s00170-020-05768-y

25

26İbiliE.ÇatM.ResnyanskyD.ŞahinS.BillinghurstM.2020An assessment of geometry teaching supported with augmented reality teaching materials to enhance students’ 3D geometry thinking skillsInt. J. Math. Educ. Sci. Technol.51224246224–4610.1080/0020739X.2019.1583382

27Pérez-LópezD.ConteroM.AlcañizM.Collaborative development of an augmented reality application for digestive and circulatory systems teaching2010 10th IEEE Int’l. Conf. on Advanced Learning Technologies2010IEEEPiscataway, NJ173175173–510.1109/ICALT.2010.54

28BaccaJ.BaldirisS.FabregatR.KinshukGrafS.Mobile augmented reality in vocational education and trainingProcedia Comput. Sci.2015Vol. 75ElsevierAmsterdam495849–5810.1016/j.procs.2015.12.203

29CastilloR. I. BarrazaCruz SánchezV. G.Vergara VillegasO. O.2015A pilot study on the use of mobile augmented reality for interactive experimentation in quadratic equationsMath Probl. Eng.201510.1155/2015/946034

30KüçükS.KapakinS.GöktaşY.2016Learning anatomy via mobile augmented reality: Effects on achievement and cognitive loadAnat. Sci. Educ.9411421411–2110.1002/ase.1603

31FidanM.TuncelM.2019Integrating augmented reality into problem based learning: The effects on learning achievement and attitude in physics educationComput. Educ.14210363510.1016/j.compedu.2019.103635

32CristinaF.DapotoS.ThomasP.PesadoP.Performance evaluation of a 3D engine for mobile devicesCommunications in Computer and Information Science2018Vol. 790155163155–6310.1007/978-3-319-75214-3_15/FIGURES/6

33NazriN. I. A. M.RambliD. R. A.Current limitations and opportunities in mobile augmented reality applications2014 Int’l. Conf. on Computer and Information Sciences, ICCOINS 2014 – A Conference of World Engineering, Science and Technology Congress, ESTCON 2014 – Proceedings2014IEEEPiscataway, NJ141–410.1109/ICCOINS.2014.6868425

34GohE. S.SunarM. S.IsmailA. W.20193D object manipulation techniques in handheld mobile augmented reality interface: A reviewIEEE Access7405814060140581–60110.1109/ACCESS.2019.2906394

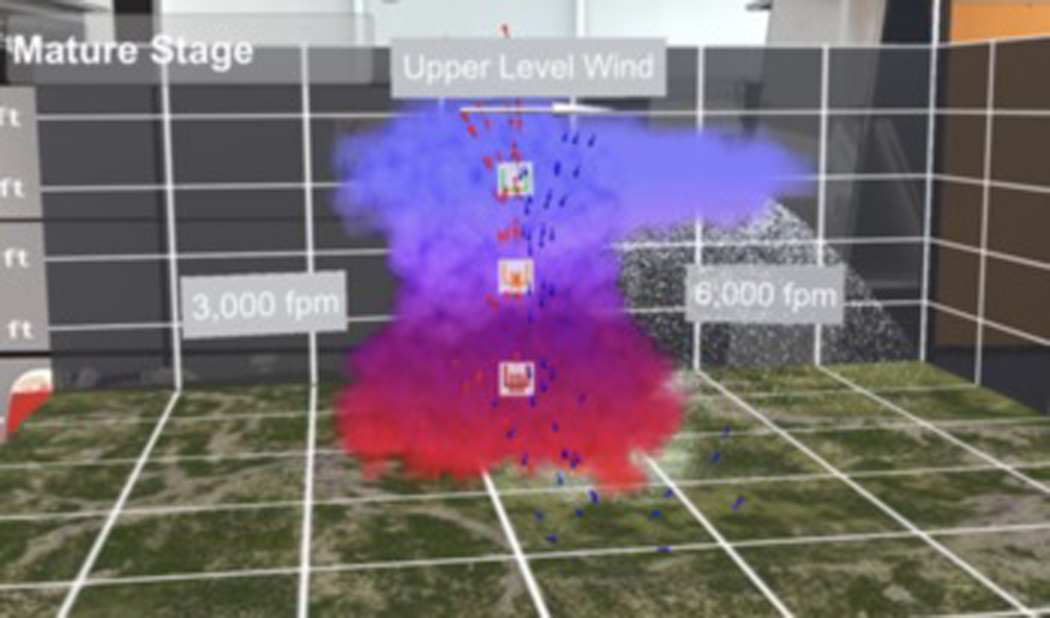

35MeisterP.MillerJ.WangK.DorneichM. C.WinerE.BrownL. J.WhitehurstG.2022Designing three-dimensional augmented reality weather visualizations to enhance general aviation weather educationIEEE Trans Prof. Commun.65321336321–3610.1109/TPC.2022.3155920

36MeisterP.WangK.DorneichM. C.WinerE.BrownL.WhitehurstG.2022Augmented reality enhanced thunderstorm learning experiences for general aviationJ. Air Transportation30113124113–2410.2514/1.D0308

37

38BallJ.StatesU.

39RolfeK. J.JohnM.StaplesFlight Simulation1988Cambridge University PressCambridge

40HuangY.PoolD. M.StroosmaO.ChuQ. P.MulderM.A review of control schemes for hydraulic stewart platform flight simulator motion systemsAIAA Modeling and Simulation Technologies Conf.2016AIAAReston, VA10.2514/6.2016-1436

41StewartD.1965A platform with six degrees of freedomAircraft Engineering and Aerospace Technology38303530–510.1108/eb034141

42PageR.RayL.Brief history of flight simulationSimTecT 2000 Proc.20001111–11

43Gabriella Severe-ValsaintM.phD Ada MishlerLT Michael NataliP.Randolph AstwoodP.LT Todd SeechP.Cecily McCoy-FisherP.Training effectiveness evaluation of an adaptive virtual instructor for naval aviation training2022

44HöllererT.FeinerS.2004Mobile augmented realityTelegeoinformatics: Location-based computing and services21221260221–60

45ClemensA. R. T. H.GruberL.GrassetR.LanglotzT.MilloniA.SchmalstiegD.WagnerD.

46ChatzopoulosDi.BermejoC.HuangZ.HuiP.2017Mobile augmented reality survey: From where we are to where we goIEEE Access5691769506917–5010.1109/ACCESS.2017.2698164

47RaduI.Why should my students use AR? A comparative review of the educational impacts of augmented-reality2012 IEEE Int’l. Symposium on Mixed and Augmented Reality (ISMAR)2012IEEEPiscataway, NJ313314313–410.1109/ISMAR.2012.6402590

48WebsterR.Declarative knowledge acquisition in immersive virtual learning environmentsInteractive Learning Environments2015Vol. 24Taylor & FrancisOxfordshire131913331319–3310.1080/10494820.2014.994533

49IrwansyahF. S.YusufY. M.FaridaI.RamdhaniM. A.2018Augmented reality (AR) technology on the android operating system in chemistry learningIOP Conf. Ser Mater. Sci. Eng.2881206810.1088/1757-899x/288/1/012068

50MacchiarellaN. D.VincenziD. A.Augmented reality in a learning paradigm for flight and aerospace maintenance trainingAIAA/IEEE Digital Avionics Systems Conf. – Proc.2004Vol. 1IEEEPiscataway, NJ10.1109/DASC.2004.1391342

51BroyN.AndréE.SchmidtA.Is stereoscopic 3D a better choice for information representation in the car?Proc. 4th Int’l. Conf. on Automotive User Interfaces and Interactive Vehicular ApplicationsAutomotiveUI ’122012Association for Computing MachineryNew York, NY, USA9310093–10010.1145/2390256.2390270

52RaduI.2014Augmented reality in education: A meta-review and cross-media analysisPers Ubiquitous Comput18153315431533–4310.1007/s00779-013-0747-y

53SolakE.CakirR.Investigating the role of augmented reality technology in the language classroomCroatian J. Education : Hrvatski c̆asopis za odgoj i obrazovanje2016Vol. 18106710851067–8510.15516/cje.v18i4.1729

54CuervoE.Wolman,A.CoxL. P.LebeckK.RazeenA.SaroiuS.MusuvathiM.Kahawai: High-quality mobile gaming using GPU offloadProc. 13th Ann. Int’l. Conf. on Mobile Systems, Applications, and ServicesMobiSys ’152015Association for Computing MachineryNew York, NY, USA121135121–3510.1145/2742647.2742657

55

56ArthC.SchmalstiegD.Challenges of large-scale augmented reality on smartphonesISMAR 2011 Workshop on Enabling Large-Scale Outdoor Mixed Reality and Augmented Reality2011

57CapinT.PulliK.Akenine-MöllerT.2008The state of the art in mobile graphics researchIEEE Comput. Graph. Appl.28748474–8410.1109/MCG.2008.83

58ShiS.NahrstedtK.CampbellR.2012A real-time remote rendering system for interactive mobile graphicsACM Trans. Multimedia Comput. Commun. Appl.810.1145/2348816.2348825

59BoosK.ChuD.CuervoE.FlashBack: Immersive virtual reality on mobile devices via rendering memoizationProc. 14th Annual Int’l. Conf. on Mobile Systems, Applications, and ServicesMobiSys ’162016Association for Computing MachineryNew York, NY291304291–30410.1145/2906388.2906418

60NusratF.HassanF.ZhongH.WangX.How developers optimize virtual reality applications: A study of optimization commits in open source unity projects2021 IEEE/ACM 43rd Int’l. Conf. on Software Engineering (ICSE)2021IEEEPiscataway, NJ473485473–8510.1109/ICSE43902.2021.00052

61WagnerD.ReitmayrG.MulloniA.DrummondT.SchmalstiegD.2009Real-time detection and tracking for augmented reality on mobile phonesIEEE Trans. Vis. Comput. Graph.16355368355–6810.1109/TVCG.2009.99

62OlssonT.SaloM.Online user survey on current mobile augmented reality applications2011 10th IEEE Int’l. Symposium on Mixed and Augmented Reality2011IEEEPiscataway, NJ758475–8410.1109/ISMAR.2011.6092372

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78LongT.2022Analysis of weather-related accident and incident data associated with section 14 CFR Part 91 operationsCollegiate Aviation Review40253925–39

79

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed

Open access

Open access