References

1LucianiT.BurksA.SugiyamaC.KomperdaJ.MaraiG. E.2018Details-first, show context, overview last: Supporting exploration of viscous fingers in large-scale ensemble simulationsIEEE Trans. Vis. Comp. Graph.251111–11

2MaC.

3ChoiJ.LeeS.-E.ChoE.KashiwagiY.OkabeS.ChangS.JeongW. K.Interactive dendritic spine analysis based on 3D morphological featuresProc. 2019 IEEE Visualization Conf. (VIS)2019IEEEPiscataway, NJ171175171–510.1109/VISUAL.2019.8933795

4MaraiG. E.MaC.BurksA. T.PellolioF.CanahuateG.VockD. M.MohamedA. S.FullerC. D.2018Precision risk analysis of cancer therapy with interactive nomograms and survival plotsIEEE Trans. Vis. Comp. Graph.25173217451732–4510.1109/TVCG.2018.2817557

5ThomasM.KannampallilT.AbrahamJ.MaraiG. E.Echo: A large display interactive visualization of ICU data for effective care handoffs2017 IEEE Workshop on Visual Analytics in Healthcare (VAHC)2017IEEEPiscataway, NJ475447–5410.1109/VAHC.2017.8387500

6Meyer-SpradowJ.SteggerL.DöringC.RopinskiT.HinrichsK.2008Glyph-based spect visualization for the diagnosis of coronary artery diseaseIEEE Trans. Vis. Comp. Graph.14149915061499–50610.1109/TVCG.2008.136

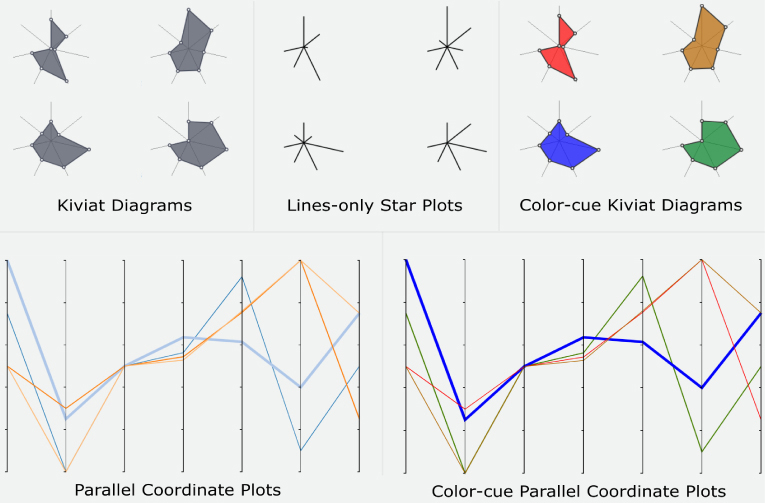

7JäckleD.FuchsJ.KeimD.2016Star glyph insets for overview preservation of multivariate dataElec. Imaging2016191–910.2352/ISSN.2470-1173.2016.1.VDA-506

8FischerF.FuchsJ.MansmannF.Clockmap: Enhancing circular treemaps with temporal glyphs for time-series dataEuroVis Short Papers2012IEEEPiscataway, NJ9710197–101

9KintzelC.FuchsJ.MansmannF.Monitoring large IP spaces with clockviewProc. 8th Int’l. Symposium on Visualization for Cyber Security2011Association for Computing MachineryNew York10.1145/2016904.2016906

10LeggP A.ChungD. H.ParryM. L.JonesM. W.LongR.GriffithsI. W.ChemM.2012Matchpad: Interactive glyph-based visualization for real-time sports performance analysisComput. Graph. Forum31125512641255–6410.1111/j.1467-8659.2012.03118.x

11GeveciB.GarthC.

12RicheN. H.HurterC.DiakopoulosN.CarpendaleS.Data-Driven Storytelling2018CRC PressBoca Raton, FL

13MunznerT.Visualization Analysis and DesignAK Peters Visualization Series2014CRC PressBoca Raton, FL

14LeeM. D.ReillyR. E.ButaviciusM. E.An empirical evaluation of Chernoff faces, star glyphs, and spatial visualizations for binary dataProc. Australian Symposium on Information Visualisation2003Australian Computer SocietyAustralia1101–10

15BorgoR.KehrerJ.ChungD. H.MaguireE.LarameeR. S.HauserH.WardM.ChenM.Glyph-based visualization: Foundations, design guidelines, techniques and applicationsEurographics (STARs)2013COREMilton Keynes396339–63

16O’BrienT.RitzA.RaphaelB.LaidlawD.2010Gremlin: An interactive visualization model for analyzing genomic rearrangementsIEEE Trans. Vis. Comput. Graph.16918926918–2610.1109/TVCG.2010.163

17HoltzY.

18FuchsJ.IsenbergP.BezerianosA.FischerF.BertiniE.2014The influence of contour on similarity perception of star glyphsIEEE Trans. Vis. Comp. Graph.20225122602251–6010.1109/TVCG.2014.2346426

19KosaraR.JohanssonJ.SadloF.MaraiG. E.Circular part-to-whole charts using the area visual cueEuroVis Short Papers2019The Eurographics AssociationEindhoven

20KosaraR.JohanssonJ.SadloF.MaraiG. E.The impact of distribution and chart type on part-to-whole comparisonsEuroVis Short Papers2019The Eurographics AssociationEindhoven

21ChanW. W.-Y.

22LucianiT.CherinkaB.OliphantD.MyersS.Wood-VaseyW. M.LabrinidisA.MaraiG. E.2014Large-scale overlays and trends: Visually mining, panning and zooming the observable universeIEEE Trans. Vis. Comput. Graph.20104810611048–6110.1109/TVCG.2014.2312008

23LucianiT.WenskovitchJ.ChenK.KoesD.TraversT.MaraiG. E.FixingTIM: Interactive exploration of sequence and structural data to identify functional mutations in protein familiesBMC Proc.2014SpringerCham10.1186/1753-6561-8-S2-S3

24KeimD.2000Designing pixel-oriented visualization techniques: Theory and applicationsIEEE Trans. Vis. Comput. Graph.6597859–7810.1109/2945.841121

25KeimD.KriegelH.1996Visualization techniques for mining large databases: A comparisonIEEE Trans. Know. Data Eng.8923938923–3810.1109/69.553159

26FerstlF.KanzlerM.RautenhausM.WestermannR.2016Time-hierarchical clustering and visualization of weather forecast ensemblesIEEE Trans. Vis. Comp. Graph.23831840831–4010.1109/TVCG.2016.2598868

27RopinskiT.PreimB.Taxonomy and usage guidelines for glyph-based medical visualizationProc. Simulation and Visualization (SimVis)2008121138121–38

28HolzingerA.BiemannC.PattichisC. S.KellD. B.

29GleicherM.2018Considerations for visualizing comparisonIEEE Trans. Vis. Comput. Graph.24413423413–2310.1109/TVCG.2017.2744199

30MacEachrenA. M.How Maps Work: Representation, Visualization, and Design2004Guilford PressNew York, NY

31ChernoffH.1973The use of faces to represent points in k-dimensional space graphicallyJ. Am. Stat. Assoc.68361368361–810.1080/01621459.1973.10482434

32WareC.Information Visualization: Perception for Design20123rd ed.Morgan Kaufmann Publishers Inc.San Francisco, CA

33FuchsJ.IsenbergP.BezerianosA.KeimD.2017A systematic review of experimental studies on data glyphsIEEE Trans. Vis. Comp. Graph.23186318791863–7910.1109/TVCG.2016.2549018

34BorgI.StaufenbielT.1992Performance of snow flakes, suns, and factorial suns in the graphical representation of multivariate dataMultivariate Behav. Res.27435543–5510.1207/s15327906mbr2701˙4

35KlippelA.HardistyF.WeaverC.2009Star plots: How shape characteristics influence classification tasksCartography Geo. Inf. Sci.36149163149–6310.1559/152304009788188808

36MillerM.ZhangX.FuchsJ.BlumenscheinM.Evaluating ordering strategies of star glyph axesProc. VIS Short Papers2019IEEEPiscataway, NJ919591–510.1109/VISUAL.2019.8933656

37KeimD.Visual techniques for exploring databasesProc. Knowledge Discovery Databases1997AAAI PressWashington, DC

38PickettR. M.GrinsteinG. G.Iconographic displays for visualizing multidimensional dataProc. 1988 IEEE Int’l. Conf. on Systems, Man, and Cybernetics1988IEEEPiscataway, NJ514519514–910.1109/ICSMC.1988.754351

39SiirtolaH.Combining parallel coordinates with the reorderable matrixProc. CMV2003IEEEPiscataway, NJ637463–7410.1109/CMV.2003.1215004

40SchmidC.HinterbergerH.Comparative multivariate visualization across conceptually different graphic displaysInt. Conf. Scientific Stat. Database Management1994IEEEPiscataway, NJ425142–5110.1109/SSDM.1994.336963

41BotellaC.JolyA.BonnetP.MonestiezP.MunozF.2018Species distribution modeling based on the automated identification of citizen observationsApps. Plant Sci.6e102910.1002/aps3.1029

42PocoJ.DasguptaA.WeiY.HargroveW.SchwalmC.CookR.BertiniE.SilvaC.2014SimilarityExplorer: A visual inter-comparison tool for multifaceted climate dataComput. Graph. Forum33341350341–5010.1111/cgf.12390

43ZhaoY.LuoF.ChenM.WangY.XiaJ.ZhouF.WangY.ChenY.ChenW.2019Evaluating multi-dimensional visualizations for understanding fuzzy clustersIEEE Trans. Vis. Comput. Graph.25122112–2110.1109/TVCG.2018.2865020

44MariesA.LucianiT.PisciuneriP. H.NikM. B.YilmazS. L.GiviP.MaraiG. E.A clustering method for identifying regions of interest in turbulent combustion tensor fieldsVisualization and Processing of Higher Order Descriptors for Multi-Valued Data2015SpringerCham323338323–3810.1007/978-3-319-15090-1_16

45MorrisM. F.1974Kiviat graphs: conventions and figures of meritACM Sigmetrics Perf. Eval. Rev.3282–810.1145/1041691.1041692

46BrewerC.

47BrownS.-A.2016Patient similarity: Emerging concepts in systems and precision medicineFrontiers Physiol.71110.3389/fphys.2016.00561

48HuangA.Similarity measures for text document clusteringProc. Sixth New Zealand Computer Science Research Student Conf. (NZCSRSC2008)20086495649–56

49HoaglinD. C.IglewiczB.TukeyJ. W.1986Performance of some resistant rules for outlier labelingJ. American Stats. Assoc.81991999991–9

50OrloffJ.BloomJ.

51YeoI.-K.JohnsonR. A.A new family of power transformations to improve normality or symmetryBiometrika2000OUPOxford

52CrainiceanuC. M.RuppertD.2004Likelihood ratio tests in linear mixed models with one variance componentJ. R. Stat. Soc.: Series B (Stat. Method.)66165185165–8510.1111/j.1467-9868.2004.00438.x

53SteigerJ. H.Beyond the f test: Effect size confidence intervals and tests of close fit in the analysis of variance and contrast analysisPsychological Methods2004APAWashington, DC

54HolmS.1979A simple sequentially rejective multiple test procedureScandinavian J. Statistics6657065–70

55HarrisonL.

56SeaboldS.PerktoldJ.Statsmodels: Econometric and statistical modeling with pythonProc. Python Sci. Conf.2010ScipyAustin, TX

57van OnzenoodtC.VázquezP.-P.RopinskiT.2023Out of the plane: Flower vs. star glyphs to support high-dimensional exploration in two-dimensional embeddingsIEEE Trans. Vis. Comp. Graph.29546854825468–8210.1109/TVCG.2022.3216919

58KeckM.KammerD.GründerT.ThomT.KleinsteuberM.MaaschA.GrohR.Towards glyph-based visualizations for big data clusteringProc. 10th Int. Symp. Vis. Inf. Comm. Interact.2017ACMNew York, NY129136129–3610.1145/3105971.3105979

59DuncanJ.HumphreysG. W.1989Visual search and stimulus similarityPsychol. Rev.96433458433–5810.1037/0033-295X.96.3.433

60WertheimerM.1923Untersuchungen zur lehre von der gestalt. iiPsychol. Forsch.4301350301–5010.1007/BF00410640

61HarozS.WhitneyD.2012How capacity limits of attention influence information visualization effectivenessIEEE Trans. Vis. Comput. Graph.18240224102402–1010.1109/TVCG.2012.233

62GramazioC.SchlossK.LaidlawD.2014The relation between visualization size, grouping, and user performanceIEEE Trans. Vis. Comp. Graph.20195319621953–6210.1109/TVCG.2014.2346983

63SchlossK.GramazioC.SilvermanA. T.ParkerM.WangA.2019Mapping color to meaning in colormap data visualizationsIEEE Trans. Vis. Comput. Graph.25810819810–910.1109/TVCG.2018.2865147

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed

Open access

Open access