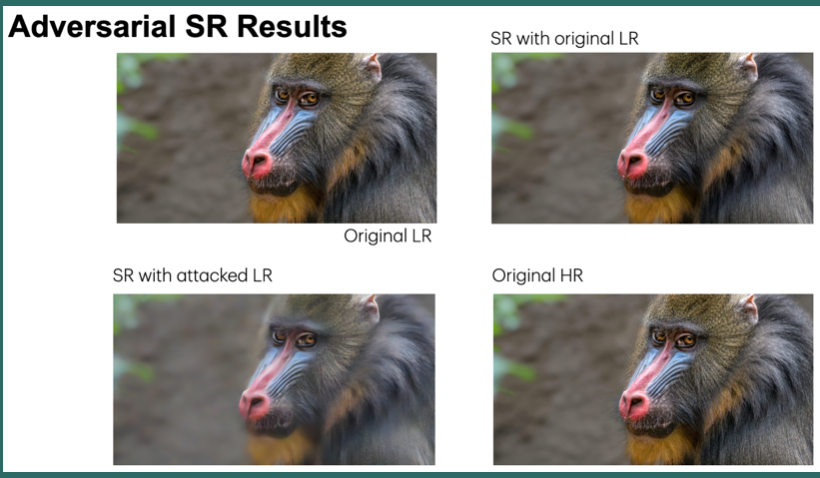

Machine learning and image enhancement models are prone to adversarial attacks, where inputs can be manipulated in order to cause misclassifications. While previous research has focused on techniques like Generative Adversarial Networks (GANs), there’s limited exploration of GANs and Synthetic Minority Oversampling Technique (SMOTE) in image super-resolution, and text and image classification models to perform adversarial attacks. Our study addresses this gap by training various machine learning models and using GANs and SMOTE to generate additional data points aimed at attacking super-resolution and classification algorithms. We extend our investigation to face recognition models, training a Convolutional Neural Network(CNN) and subjecting it to adversarial attacks with fast gradient sign perturbations on key features identified by GradCAM, a technique used to highlight key image characteristics of CNNs use in classification. Our experiments reveal a significant vulnerability in classification models. Specifically, we observe a 20% decrease in accuracy for the top-performing text classification models post-attack, along with a 30% decrease in facial recognition accuracy.

Hyperspectral image classification has received more attention from researchers in recent years. Hyperspectral imaging systems utilize sensors, which acquire data mostly from the visible through the near infrared wavelength ranges and capture tens up to hundreds of spectral bands. Using the detailed spectral information, the possibility of accurately classifying materials is increased. Unfortunately conventional spectral cameras sensors use spatial or spectral scanning during acquisition which is only suitable for static scenes like earth observation. In dynamic scenarios, such as in autonomous driving applications, the acquisition of the entire hyperspectral cube in one step is mandatory. To allow hyperspectral classification and enhance terrain drivability analysis for autonomous driving we investigate the eligibility of novel mosaic-snapshot based hyperspectral cameras. These cameras capture an entire hyperspectral cube without requiring moving parts or line-scanning. The sensor is mounted on a vehicle in a driving scenario in rough terrain with dynamic scenes. The captured hyperspectral data is used for terrain classification utilizing machine learning techniques. A major problem, however, is the presence of shadows in captured scenes, which degrades the classification results. We present and test methods to automatically detect shadows by taking advantage of the near-infrared (NIR) part of spectrum to build shadow maps. By utilizing these shadow maps a classifier may be able to produce better results and avoid misclassifications due to shadows. The approaches are tested on our new hand-labeled hyperspectral dataset, acquired by driving through suburban areas, with several hyperspectral snapshotmosaic cameras.

A system-on-chip (SoC) platform having a dual-core microprocessor (μP) and a field-programmable gate array (FPGA), as well as interfaces for sensors and networking, is a promising architecture for edge computing applications in computer vision. In this paper, we consider a case study involving the low-cost Zynq- 7000 SoC, which is used to implement a three-stage image signal processor (ISP), for a nonlinear CMOS image sensor (CIS), and to interface the imaging system to a network. Although the highdefinition imaging system operates efficiently in hard real time, by exploiting an FPGA implementation, it sends information over the network on demand only, by exploiting a Linux-based μP implementation. In the case study, the Zynq-7000 SoC is configured in a novel way. In particular, to guarantee hard real time performance, the FPGA is always the master, communicating with the μP through interrupt service routines and direct memory access channels. Results include a validation of the overall system, using a simulated CIS, and an analysis of the system complexity. On this low-cost SoC, resources are available for significant additional complexity, to integrate a computer vision application, in future, with the nonlinear CMOS imaging system.

Hyperspectral imaging increases the amount of information incorporated per pixel in comparison to normal color cameras. Conventional hyperspectral sensors as used in satellite imaging utilize spatial or spectral scanning during acquisition which is only suitable for static scenes. In dynamic scenarios, such as in autonomous driving applications, the acquisition of the entire hyperspectral cube at the same time is mandatory. In this work, we investigate the eligibility of novel snapshot hyperspectral cameras in dynamic scenarios such as in autonomous driving applications. These new sensors capture a hyperspectral cube containing 16 or 25 spectra without requiring moving parts or line-scanning. These sensors were mounted on land vehicles and used in several driving scenarios in rough terrain and dynamic scenes. We captured several hundred gigabytes of hyperspectral data which were used for terrain classification. We propose a random-forest classifier based on hyperspectral and spatial features combined with fully connected conditional random fields ensuring local consistency and context aware semantic scene segmentation. The classification is evaluated against a novel hyperspectral ground truth dataset specifically created for this purpose.

We present a new image enhancement algorithm based on combined local and global image processing. The basic idea is to apply α-rooting image enhancement approach for different image blocks. For this purpose, we split image in moving windows on disjoint blocks with different size (8 by 8, 16 by 16, 32 by 32 and, i.e.). The parameter alfa for every block driven through optimization of measure of enhancement (EME). The resulting image is a weighted mean of all processing blocks. This strategy for image enhancement allows getting more contrast image with the following properties: irregular lighting and brightness gradient. Some experimental results are presented to illustrate the performance of the proposed algorithm.