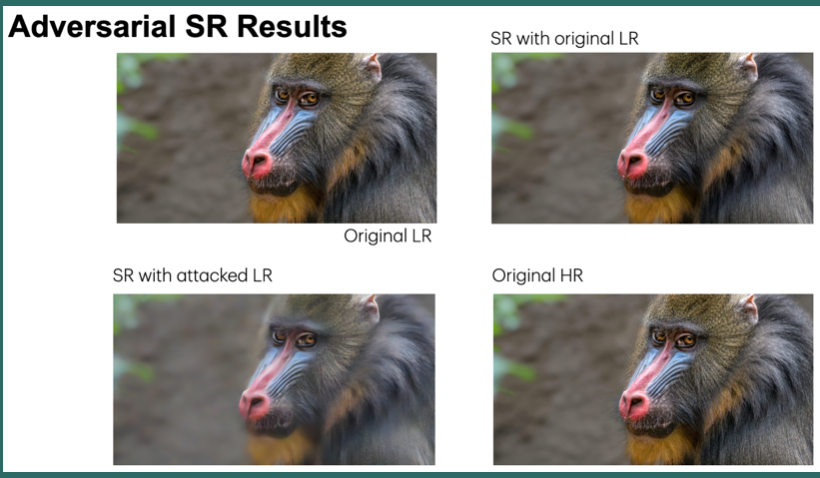

Machine learning and image enhancement models are prone to adversarial attacks, where inputs can be manipulated in order to cause misclassifications. While previous research has focused on techniques like Generative Adversarial Networks (GANs), there’s limited exploration of GANs and Synthetic Minority Oversampling Technique (SMOTE) in image super-resolution, and text and image classification models to perform adversarial attacks. Our study addresses this gap by training various machine learning models and using GANs and SMOTE to generate additional data points aimed at attacking super-resolution and classification algorithms. We extend our investigation to face recognition models, training a Convolutional Neural Network(CNN) and subjecting it to adversarial attacks with fast gradient sign perturbations on key features identified by GradCAM, a technique used to highlight key image characteristics of CNNs use in classification. Our experiments reveal a significant vulnerability in classification models. Specifically, we observe a 20% decrease in accuracy for the top-performing text classification models post-attack, along with a 30% decrease in facial recognition accuracy.

Langalibalele Lunga, Suhas Sreehari, "Can Adversarial Modifications Undermine Super-resolution Algorithms?" in Electronic Imaging, 2025, pp 152-1 - 152-8, https://doi.org/10.2352/EI.2025.37.14.COIMG-152

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed