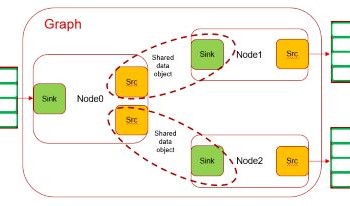

OpenVX is an open standard for accelerating computer vision applications on a heterogeneous platform with multiple processing elements. OpenVX is accepted by Automotive industry as a go-to framework for developing performance-critical, power-optimized and safety compliant computer vision processing pipelines on real-time heterogeneous embedded SoCs. Optimizing OpenVX development flow becomes a necessity with ever growing demand for variety of vision applications required in both Automotive and Industrial market. Although OpenVX works great when all the elements in the pipeline is implemented with OpenVX, it lacks utilities to effectively interact with other frameworks. We propose a software design to make OpenVX development faster by adding a thin layer on top of OpenVX which simplifies construction of an OpenVX pipeline and exposes simple interface to enable seamless interaction with other frameworks like v4l2, OpenMax, DRM etc....

This paper investigates the relationship between image quality and computer vision performance. Two image quality metrics, as defined in the IEEE P2020 draft Standard for Image quality in automotive systems, are used to determine the impact of image quality on object detection. The IQ metrics used are (i) Modulation Transfer function (MTF), the most commonly utilized metric for measuring the sharpness of a camera; and (ii) Modulation and Contrast Transfer Accuracy (CTA), a newly defined, state-of-the-art metric for measuring image contrast. The results show that the MTF and CTA of an optical system are impacted by ISP tuning. Some correlation is shown to exist between MTF and object detection (OD) performance. A trend of improved AP5095 as MTF50 increases is observed in some models. Scenes with similar CTA scores can have widely varying object detection performance. For this reason, CTA is shown to be limited in its ability to predict object detection performance. Gaussian noise and edge enhancement produce similar CTA scores but different AP5095 scores. The results suggest MTF is a better predictor of ML performance than CTA.

A typical edge compute SoC capable of handling deep learning workloads at low power is usually heterogeneous by design. It typically comprises multiple initiators such as real-time IPs for capture and display, hardware accelerators for ISP, computer vision, deep learning engines, codecs, DSP or ARM cores for general compute, GPU for 2D/3D visualization. Every participating initiator transacts with common resources such as L3/L4/DDR memory systems to seamlessly exchange data between them. A careful orchestration of this dataflow is important to keep every producer/consumer at full utilization without causing any drop in real-time performance which is critical for automotive applications. The software stack for such complex workflows can be quite intimidating for customers to bring-up and more often act as an entry barrier for many to even evaluate the device for performance. In this paper we propose techniques developed on TI’s latest TDA4V-Mid SoC, targeted for ADAS and autonomous applications, which is designed around ease-of-use but ensuring device entitlement class of performance using open standards such as DL runtimes, OpenVx and GStreamer.

This paper presents the design of an accurate rain model for the commercially-available Anyverse automotive simulation environment. The model incorporates the physical properties of rain and a process to validate the model against real rain is proposed. Due to the high computational complexity of path tracing through a particle-based model, a second more computationally efficient model is also proposed. For the second model, the rain is modeled using a combination of a particle-based model and an attenuation field. The attenuation field is fine-tuned against the particle-only model to minimize the difference between the models.

Optimizing exposure time for low light scenarios involves a trade-off between motion blur and signal to noise ratio. A method for defining the optimum exposure time for a given function has not been described in the literature. This paper presents the design of a simulation of motion blur and exposure time from the perspective of a real-world camera. The model incorporates characteristics of real-world cameras including the light level (quanta), shot noise and lens distortion. In our simulation, an image quality target chart called the Siemens Star chart will be used, and the simulation outputs a blurred image as if captured from a camera of set exposure and set movement speed. The resulting image is then processed in Imatest in which image quality readings will be extracted from the image and consequently the relationship between exposure time, motion blur and the image quality metrics can be evaluated.

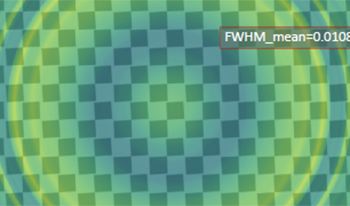

We present a novel metric Spatial Recall Index to assess the performance of machine-learning (ML) algorithms for automotive applications, focusing on where in the image which performance occurs. Typical metrics like intersection-over-union (IoU), precisionrecallcurves or average precision (AP) quantify the performance over a whole database of images, neglecting spatial performance variations. But as the optics of camera systems are spatially variable over the field of view, the performance of ML-based algorithms is also a function of space, which we show in simulation: A realistic objective lens based on a Cooke-triplet that exhibits typical optical aberrations like astigmatism and chromatic aberration, all variable over field, is modeled. The model is then applied to a subset of the BDD100k dataset with spatially-varying kernels. We then quantify local changes in the performance of the pre-trained Mask R-CNN algorithm. Our examples demonstrate the spatial dependence of the performance of ML-based algorithms from the optical quality over field, highlighting the need to take the spatial dimension into account when training ML-based algorithms, especially when looking forward to autonomous driving applications.

Autonomous driving plays a crucial role to prevent accidents and modern vehicles are equipped with multimodal sensor systems and AI-driven perception and sensor fusion. These features are however not stable during a vehicle’s lifetime due to various means of degradation. This introduces an inherent, yet unaddressed risk: once vehicles are in the field, their individual exposure to environmental effects lead to unpredictable behavior. The goal of this paper is to raise awareness of automotive sensor degradation. Various effects exist, which in combination may have a severe impact on the AI-based processing and ultimately on the customer domain. Failure mode and effects analysis (FMEA) type approaches are used to structure a complete coverage of relevant automotive degradation effects. Sensors include cameras, RADARs, LiDARs and other modalities, both outside and in-cabin. Sensor robustness alone is a well-known topic which is addressed by DV/PV. However, this is not sufficient and various degradations will be looked at which go significantly beyond currently tested environmental stress scenarios. In addition, the combination of sensor degradation and its impact on AI processing is identified as a validation gap. An outlook to future analysis and ways to detect relevant sensor degradations is also presented.

In this paper, we present an overview of automotive image quality challenges and link them to the physical properties of image acquisition. This process shows that the detection probability based KPIs are a helpful tool to link image quality to the tasks of the SAE classified supported and automated driving tasks. We develop questions around the challenges of the automotive image quality and show that especially color separation probability (CSP) and contrast detection probability (CDP) are a key enabler to improve the knowhow and overview of the image quality optimization problem. Next we introduce a proposal for color separation probability as a new KPI which is based on the random effects of photon shot noise and the properties of light spectra that cause color metamerism. This allows us to demonstrate the image quality influences related to color at different stages of the image generation pipeline. As a second part we investigated the already presented KPI Contrast Detection Probability and show how it links to different metrics of automotive imaging such as HDR, low light performance and detectivity of an object. As conclusion, this paper summarizes the status of the standardization status within IEEE P2020 of these detection probability based KPIs and outlines the next steps for these work packages.