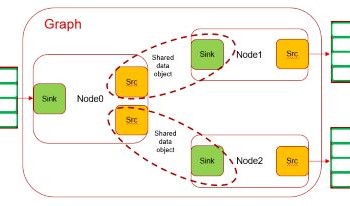

OpenVX is an open standard for accelerating computer vision applications on a heterogeneous platform with multiple processing elements. OpenVX is accepted by Automotive industry as a go-to framework for developing performance-critical, power-optimized and safety compliant computer vision processing pipelines on real-time heterogeneous embedded SoCs. Optimizing OpenVX development flow becomes a necessity with ever growing demand for variety of vision applications required in both Automotive and Industrial market. Although OpenVX works great when all the elements in the pipeline is implemented with OpenVX, it lacks utilities to effectively interact with other frameworks. We propose a software design to make OpenVX development faster by adding a thin layer on top of OpenVX which simplifies construction of an OpenVX pipeline and exposes simple interface to enable seamless interaction with other frameworks like v4l2, OpenMax, DRM etc....

Deep learning has enabled rapid advancements in the field of image processing. Learning based approaches have achieved stunning success over their traditional signal processing-based counterparts for a variety of applications such as object detection, semantic segmentation etc. This has resulted in the parallel development of hardware architectures capable of optimizing the inferencing of deep learning algorithms in real time. Embedded devices tend to have hard constraints on internal memory space and must rely on larger (but relatively very slow) DDR memory to store vast data generated while processing the deep learning algorithms. Thus, associated systems have to be evolved to make use of the optimized hardware balancing compute times with data operations. We propose such a generalized framework that can, given a set of compute elements and memory arrangement, devise an efficient method for processing of multidimensional data to optimize inference time of deep learning algorithms for vision applications.

A typical edge compute SoC capable of handling deep learning workloads at low power is usually heterogeneous by design. It typically comprises multiple initiators such as real-time IPs for capture and display, hardware accelerators for ISP, computer vision, deep learning engines, codecs, DSP or ARM cores for general compute, GPU for 2D/3D visualization. Every participating initiator transacts with common resources such as L3/L4/DDR memory systems to seamlessly exchange data between them. A careful orchestration of this dataflow is important to keep every producer/consumer at full utilization without causing any drop in real-time performance which is critical for automotive applications. The software stack for such complex workflows can be quite intimidating for customers to bring-up and more often act as an entry barrier for many to even evaluate the device for performance. In this paper we propose techniques developed on TI’s latest TDA4V-Mid SoC, targeted for ADAS and autonomous applications, which is designed around ease-of-use but ensuring device entitlement class of performance using open standards such as DL runtimes, OpenVx and GStreamer.

Auto-Valet parking is a key emerging function for Advanced Driver Assistance Systems (ADAS) enhancing traditional surround view system providing more autonomy during parking scenario. Auto-Valet parking system is typically built using multiple HW components e.g. ISP, micro-controllers, FPGAs, GPU, Ethernet/PCIe switch etc. Texas Instrument’s new Jacinto7 platform is one of industry’s highest integrated SoC replacing these components with a single TDA4VMID chip. The TDA4VMID SoC can concurrently do analytics (traditional computer vision as well as deep learning) and sophisticated 3D surround view, making it a cost effective and power optimized solution. TDA4VMID is a truly heterogeneous architecture and it can be programmed using an efficient and easy to use OpenVX based middle-ware framework to realize distribution of software components across cores. This paper explains typical functions for analytics and 3D surround view in auto-valet parking system with data-flow and its mapping to multiple cores of TDA4VMID SoC. Auto-valet parking system can be realized on TDA4VMID SOC with complete processing offloaded of host ARM to the rest of SoC cores, providing ample headroom for customers for future proofing as well as ability to add customer specific differentiation.

In this paper, we present an overview of automotive image quality challenges and link them to the physical properties of image acquisition. This process shows that the detection probability based KPIs are a helpful tool to link image quality to the tasks of the SAE classified supported and automated driving tasks. We develop questions around the challenges of the automotive image quality and show that especially color separation probability (CSP) and contrast detection probability (CDP) are a key enabler to improve the knowhow and overview of the image quality optimization problem. Next we introduce a proposal for color separation probability as a new KPI which is based on the random effects of photon shot noise and the properties of light spectra that cause color metamerism. This allows us to demonstrate the image quality influences related to color at different stages of the image generation pipeline. As a second part we investigated the already presented KPI Contrast Detection Probability and show how it links to different metrics of automotive imaging such as HDR, low light performance and detectivity of an object. As conclusion, this paper summarizes the status of the standardization status within IEEE P2020 of these detection probability based KPIs and outlines the next steps for these work packages.

Will autonomous vehicles ever be able to drive around safely? In Germany lane markers on streets are white. In construction zones temporary markers are installed on top of the standard ones that are yellow and they direct the traffic e.g. into shifted lanes. So, the only differentiation is the color. But what about the white markers at times close to the sunset? Then we drive into Austria and the temporary markers are turning orange. Or driving in the US makes the whole country a construction zone because markers are always yellow. Automotive cameras are often times not RGB cameras. They have other color filters to maximize sensitivity, which often times does not help differentiating colors. So does the spectral reflectance matter? Which impact does the illumination have? And there are many different ones like daylight at different times of the day, different kinds of streetlights, different headlights of the cars etc. Traffic signs create another color problem. We drive from Switzerland to France. In Switzerland the freeway signs are green and the major road signs are blue. When you cross the French border, it is the other way around. How can these problems be solved?

The automotive industry formed the initiative IEEE-P2020 to jointly work on key performance indicators (KPIs) that can be used to predict how well a camera system suits the use cases. A very fundamental application of cameras is to detect object contrasts for object recognition or stereo vision object matching. The most important KPI the group is working on is the contrast detection probability (CDP), a metric that describes the performance of components and systems and is independent from any assumptions about the camera model or other properties. While the theory behind CDP is already well established, we present actual measurement results and the implementation for camera tests. We also show how CDP can be used to improve low light sensitivity and dynamic range measurements.

This paper explores the use of stixels in a probabilistic stereo vision-based collision-warning system that can be part of an ADAS for intelligent vehicles. In most current systems, collision warnings are based on radar or on monocular vision using pat- tern recognition (and ultra-sound for park assist). Since detect- ing collisions is such a core functionality of intelligent vehicles, redundancy is key. Therefore, we explore the use of stereo vi- sion for reliable collision prediction. Our algorithm consists of a Bayesian histogram filter that provides the probability of collision for multiple interception regions and angles towards the vehicle. This could additionally be fused with other sources of informa- tion in larger systems. Our algorithm builds upon the dispar- ity Stixel World that has been developed for efficient automotive vision applications. Combined with image flow and uncertainty modeling, our system samples and propagates asteroids, which are dynamic particles that can be utilized for collision prediction. At best, our independent system detects all 31 simulated collisions (2 false warnings), while this setting generates 12 false warnings on the real-world data.

In recent years, the use of LED lighting has become widespread in the automotive environment, largely because of their high energy efficiency, reliability, and low maintenance costs. There has also been a concurrent increase in the use and complexity of automotive camera systems. To a large extent, LED lighting and automotive camera technology evolved separately and independently. As the use of both technologies has increased, it has become clear that LED lighting poses significant challenges for automotive imaging i.e. so-called "LED flicker". LED flicker is an artifact observed in digital imaging where an imaged light source appears to flicker, even though the light source appears constant to a human observer. This paper defines the root cause and manifestations of LED flicker. It defines the use cases where LED flicker occurs, and related consequences. It further defines a test methodology and metrics for evaluating an imaging systems susceptibility to LED flicker.

Driven by the mandated adoption of advanced safety features enforced by governments around the global as well as strong demands for upgraded safety and convenience experience from the consumer side, the automotive industry is going through an intensified arms race of equipping vehicles with more sensors and boosted computation capacity. Among various sensors, camera and radar stand out as a popular combination offering complementary capabilities. As a result, camera radar fusion (or CRF in short) has been regarded as one of the key technology trends for future advanced driving assistant system (ADAS). This paper reports a camera radar fusion system developed at TI, which is powered by a broad set of TI silicon products, including CMOS radar, TDA SoC processor, FPD-Link II/III SerDes, PMIC, and so forth. The system is developed to not only showcase algorithmic benefits of fusion, but also the competitiveness of TI solutions as a whole in terms of coverage of capabilities, balance between performance and energy efficiency, and rich supports from the associated HW and SW ecosystem.