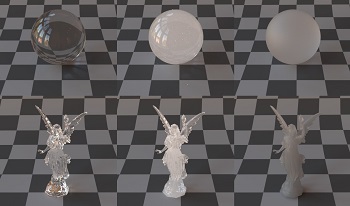

Light-permeable materials are usually characterized by perceptual attributes of transparency, translucency, and opacity. Technical definitions and standards leave room for subjective interpretation on how these different perceptual attributes relate to optical properties and one another, which causes miscommunication in industry and academia alike. A recent work hypothesized that a Gaussian function or a similar bell-shaped curve describes the relationship between translucency on the one hand, and transparency and opacity, on the other hand. Another work proposed a translucency classification system for computer graphics, where transparency, translucency and opacity are modulated by three optical properties: subsurface scattering, subsurface absorption, and surface roughness. In this work, we conducted two psychophysical experiments to scale the magnitude of transparency and translucency of different light-permeable materials to test the hypothesis that a Gaussian function can model the relationship between transparency and translucency, and to assess how well the aforementioned classification system describes the relationship between optical and perceptual properties. We found that the results vary significantly between the shapes. While bell-shaped relationship between transparency and translucency has been observed for spherical objects, this was not generalized to a more complex shape. Furthermore, how optical properties modulate transparency and translucency is also dependent on the object shape. We conclude that these cross-shape differences are rooted in different image cues generated by different object scales and surface geometry.

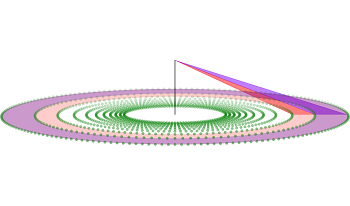

Both natural scene statistics and ground surfaces have been shown to play important roles in visual perception, in particular, in the perception of distance. Yet, there have been surprisingly few studies looking at the natural statistics of distances to the ground, and the studies that have been done used a loose definition of ground. Additionally, perception studies investigating the role of the ground surface typically use artificial scenes containing perfectly flat ground surfaces with relatively few non-ground objects present, whereas ground surfaces in natural scenes are typically non-planar and have a large number of non-ground objects occluding the ground. Our study investigates the distance statistics of many natural scenes across three datasets, with the goal of separately analyzing the ground surface and non-ground objects. We used a recent filtering method to partition LiDAR-acquired 3D point clouds into ground points and non-ground points. We then examined the way in which distance distributions depend on distance, viewing elevation angle, and simulated viewing height. We found, first, that the distance distribution of ground points shares some similarities with that of a perfectly flat plane, namely with a sharp peak at a near distance that depends on viewing height, but also some differences. Second, we also found that the distribution of non-ground points is flatter and did not vary with viewing height. Third, we found that the proportion of non-ground points increases with viewing elevation angle. Our findings provide further insight into the statistical information available for distance perception in natural scenes, and suggest that studies of distance perception should consider a broader range of ground surfaces and object distributions than what has been used in the past in order to better reflect the statistics of natural scenes.

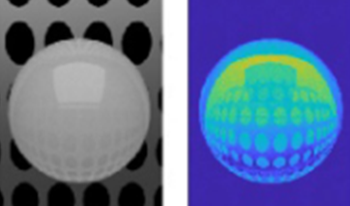

In this work we study the perception of suprathreshold translucency differences to expand the knowledge about material appearance perception in imaging and computer graphics, and 3D printing applications. Translucency is one of the most considerable appearance attributes that significantly affects the look of objects and materials. However, the knowledge about translucency perception remains limited. Even less is known about the perception of translucency differences between materials. We hypothesize that humans are more sensitive to small changes in absorption and scattering coefficients when optically thin materials are examined and when objects have geometrically thin parts. To test these hypotheses, we generated images of objects with different shapes and subsurface scattering properties and conducted psychophysical experiments with these visual stimuli. The analysis of the experimental data supports these hypotheses and based on post experiment comments made by the observers, we argue that the results could be a demonstration of a fundamental difference between translucency perception mechanisms in see-through and non-see-through objects and materials.

Medical image data is critically important for a range of disciplines, including medical image perception research, clinician training programs, and computer vision algorithms, among many other applications. Authentic medical image data, unfortunately, is relatively scarce for many of these uses. Because of this, researchers often collect their own data in nearby hospitals, which limits the generalizabilty of the data and findings. Moreover, even when larger datasets become available, they are of limited use because of the necessary data processing procedures such as de-identification, labeling, and categorizing, which requires significant time and effort. Thus, in some applications, including behavioral experiments on medical image perception, researchers have used naive artificial medical images (e.g., shapes or textures that are not realistic). These artificial medical images are easy to generate and manipulate, but the lack of authenticity inevitably raises questions about the applicability of the research to clinical practice. Recently, with the great progress in Generative Adversarial Networks (GAN), authentic images can be generated with high quality. In this paper, we propose to use GAN to generate authentic medical images for medical imaging studies. We also adopt a controllable method to manipulate the generated image attributes such that these images can satisfy any arbitrary experimenter goals, tasks, or stimulus settings. We have tested the proposed method on various medical image modalities, including mammogram, MRI, CT, and skin cancer images. The generated authentic medical images verify the success of the proposed method. The model and generated images could be employed in any medical image perception research.