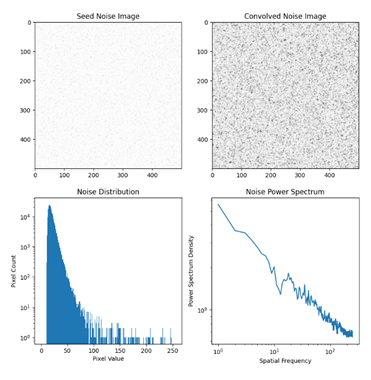

Gaussian distribution models are widely used for characterizing and modeling noise in CMOS sensors. Although it provides simplicity and speeds needed in real-time applications, it is usually not a very good representation of dark current characteristics observed in real devices. The statistical distribution of CMOS sensor dark noise is typically right-skewed with a long tail, i.e. with more “hot” pixels than described in a normal distribution. Furthermore, the spatial distribution in real devices typically exhibit a 1/f-like power spectrum instead of a flat spectrum from a simple Gaussian distributions model. When simulating sensor images, for example generating images and videos for training and testing image processing algorithms, it is important to reproduce both characteristics accurately. We propose a simple convolution-type algorithm using seed images with a log-normal distribution and randomized kernels to more accurately reproduce both statistical and spatial distributions. The convolution formulation also enables relatively easy GPU acceleration to support real-time execution for driving simulation platforms.

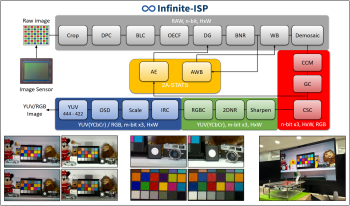

While traditionally not available in the public domain, access to the complete image signal processing (ISP) algorithmic pipeline and its hardware implementation are necessary to enable new imaging use cases and to improve the performance of high level deep learning vision networks. In this paper, we present Infinite-ISP: A complete hardware ISP development suite, comprising of an ISP algorithm development platform, a bit-accurate fixed-point reference model, an ISP register transfer level development platform, an FPGA development workflow and an ISP tuning tool. To aid the hardware development process, we develop end-to-end reference designs for the KV260 vision AI starter kit (AMD Xilinx Kria SOM) and Efinix Ti180J484 kit, with support for 3 image sensors (Sony IMX219, Onsemi AR1335 and Omnivision OV5647) using the Infinite-ISP framework. These ISP reference designs support 10-bit 2592×1536 4 Megapixel (MP) Bayer image sensors with a maximum pixel throughput of 125 MP/s or 30 frames per second. We demonstrate that the image quality of Infinite-ISP is comparable to commercial ISPs found in Skype Certified Cameras and also performs competitively with digital still cameras in terms of the perceptual IQ metrics BRISQUE, PIQE, and NIQE. We envision the Infinite-ISP (available at https: // github. com/ 10x-Engineers/Infinite-ISP ) under the permissive open-source license to streamline ISP development from algorithmic design to hardware implementation and to foster community building and further research around it for both academia and industry.

Very low cost consumer-oriented commodity products often can be modified to support more sophisticated uses. The current work explores a variety of ways in which any of a family of small rechargeable-battery stand-alone cameras, intended for use of kids as young as three years old, can be modified to support more sophisticated use. Unfortunately, the image quality and exposure controls available are similar to what would be found in a cheap webcam; to be precise, the camera contains two separate camera modules each comparable to a webcam. However, simple modifications convert these toys into interchangeable-lens mirrorless cameras accepting lenses in a variety of standard mounts. Cameras so modified can capture full spectrum images or can employ a filter providing any desired spectral sensitivity profile. One also has limited access to the internals of the camera, easily allowing options like wiring to an external exposure trigger. The cameras record on a TF card, and typically a 32GB or 64GB card is included with the camera at a total cost virtually identical to the cost of the TF card alone. The current work can be considered as both a study revealing the internal construction of a kids camera and a guide to adapting it for more serious uses as the KAMF mirrorless camera.

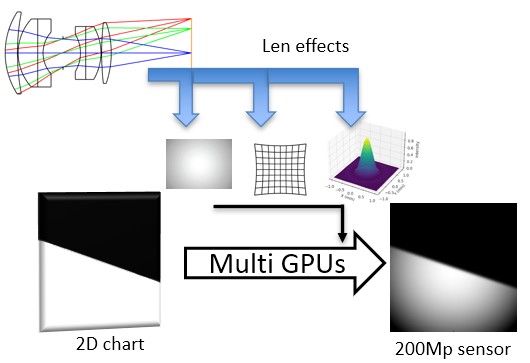

In a lens-based camera, light from an object is projected onto an image sensor through a lens. This projection involves various optical effects, including the point spread function (PSF), distortion, and relative illumination (RI), which can be modeled using lens design software such as Zemax and Code V. To evaluate lens performance, a simulation system can be implemented to apply these effects to a scene image. In this paper, we propose a lens-based multispectral image simulation system capable of handling images with 200 million pixels, matching the resolution of the latest mobile devices. Our approach optimizes the spatially variant PSF (SVPSF) algorithm and leverages a distributed multi-GPU system for massive parallel computing. The system processes 200M-pixel multispectral 2D images across 31 wavelengths ranging from 400 nm to 700 nm. We compare edge spread function, RI, and distortion results with Zemax, achieving approximately 99% similarity. The entire computation for a 200M-pixel, 31-wavelength image is completed in under two hours. This efficient and accurate simulation system enables pre-evaluation of lens performance before manufacturing, making it a valuable tool for optical design and analysis.

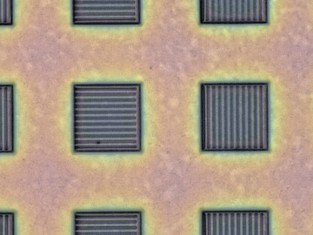

In this study, we investigated the improvement of the characteristics of the pixels of a polarization image sensor used for high-frequency electric field imaging. It was confirmed that the signal-to-noise ratio can be improved by increasing the number of metal wiring layers constituting the polarizer and by expanding the pixel dimensions. The combination of these improvements is expected to enable high-frequency field imaging with higher sensitivity.

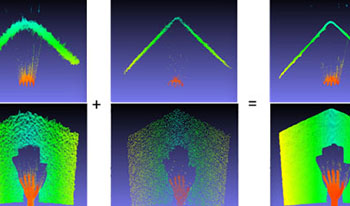

We introduce an innovative 3D depth sensing scheme that seamlessly integrates various depth sensing modalities and technologies into a single compact device. Our approach dynamically switches between depth sensing modes, including iTOF and structured light, enabling real-time data fusion of depth images. We successfully demonstrated iToF depth imaging without multipath interference (MPI), simultaneously achieving high image resolution (VGA) and high depth accuracy at a frame rate of 30 fps.