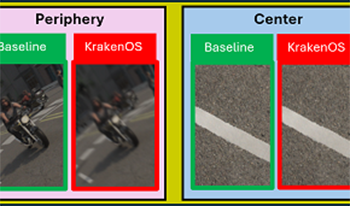

Automotive Simulation is a potentially cost-effective strategy to identify and test corner case scenarios in automotive perception. Recent work has shown a significant shift in creating realistic synthetic data for road traffic scenarios using a video graphics engine. However, a gap exists in modeling realistic optical aberrations associated with cameras in automotive simulation. This paper builds on the concept from existing literature to model optical degradations in simulated environments using the Python-based ray-tracing library KrakenOS. As a novel pipeline, we degrade automotive fisheye simulation using an optical doublet with +/-2◦ Field of View(FOV), introducing realistic optical artifacts into two simulation images from SynWoodscape and Parallel Domain Woodscape. We evaluate KrakenOS by calculating the Root Mean Square Error (RMSE), which averaged around 0.023 across the RGB light spectrum compared to Ansys Zemax OpticStudio, an industrial benchmark for optical design and simulation. Lastly, we measure the image sharpness of the degraded simulation using the ISO12233:2023 Slanted Edge Method and show how both qualitative and measured results indicate the extent of the spatial variation in image sharpness from the periphery to the center of the degradations.

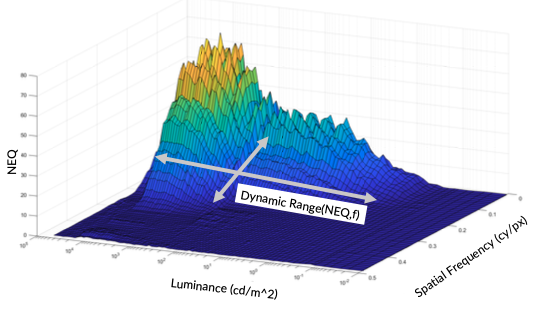

This paper investigates the application of Noise Equivalent Quanta (NEQ) as a comprehensive metric for assessing dynamic range in imaging systems. Building on previous work that demonstrated NEQ’s utility in characterizing noise and resolution trade-offs in imaging systems using the Dead Leaves technique, this study seeks to validate the use of NEQ for dynamic range characterization, especially in high-dynamic-range (HDR) systems where conventional metrics may fall short. This paper makes use of previous work that showed the possibility to measure noise and NEQ on the dead leaves pattern which is otherwise typically used for the measurement of the loss of low contrast fine details, also called texture loss. This shall now be used to improve the measurement of the dynamic range.

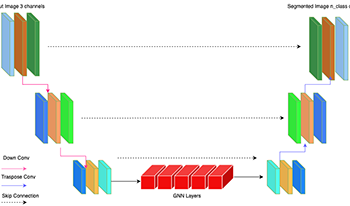

This study explores the potential of graph neural networks (GNNs) to enhance semantic segmentation across diverse image modalities. We evaluate the effectiveness of a novel GNN-based U-Net architecture on three distinct datasets: PascalVOC, a standard benchmark for natural image segmentation, Wood-Scape, a challenging dataset of fisheye images commonly used in autonomous driving, introducing significant geometric distortions; and ISIC2016, a dataset of dermoscopic images for skin lesion segmentation. We compare our proposed UNet-GNN model against established convolutional neural networks (CNNs) based segmentation models, including U-Net and U-Net++, as well as the transformer-based SwinUNet. Unlike these methods, which primarily rely on local convolutional operations or global self-attention, GNNs explicitly model relationships between image regions by constructing and operating on a graph representation of the image features. This approach allows the model to capture long-range dependencies and complex spatial relationships, which we hypothesize will be particularly beneficial for handling geometric distortions present in fisheye imagery and capturing intricate boundaries in medical images. Our analysis demonstrates the versatility of GNNs in addressing diverse segmentation challenges and highlights their potential to improve segmentation accuracy in various applications, including autonomous driving and medical image analysis. Code Available at GitHub.

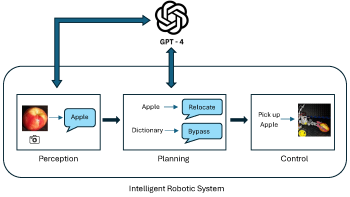

Robotics has traditionally relied on a multitude of sensors and extensive programming to interpret and navigate environments. However, these systems often struggle in dynamic and unpredictable settings. In this work, we explore the integration of large language models (LLMs) such as GPT-4 into robotic navigation systems to enhance decision-making and adaptability in complex environments. Unlike many existing robotics frameworks, our approach uniquely leverages the advanced natural language and image processing capabilities of LLMs to enable robust navigation using only a single camera and an ultrasonic sensor, eliminating the need for multiple specialized sensors and extensive pre-programmed responses. By bridging the gap between perception and planning, this framework introduces a novel approach to robotic navigation. It aims to create more intelligent and flexible robotic systems capable of handling a broader range of tasks and environments, representing a major leap in autonomy and versatility for robotics. Experimental evaluations demonstrate promising improvements in the robot’s effectiveness and efficiency across object recognition, motion planning, obstacle manipulation, and environmental adaptability, highlighting its potential for more advanced applications. Future developments will focus on enabling LLMs to autonomously generate motion profiles and executable code for tasks based on verbal instructions, allowing these actions to be carried out without human intervention. This advancement will further enhance the robot’s ability to perform specific actions independently, improving both its autonomy and operational efficiency.

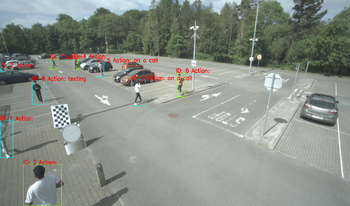

In this paper, we present a database consisting of the annotations of videos showing a number of people performing several actions in a parking lot. The chosen actions represent situations in which the pedestrian could be distracted and not fully aware of her surroundings. Those are “looking behind”, “on a call”, and “texting”, with another one labeled as “no action” when none of the previous actions is performed by the person. In addition to actions, also the speed of the person is labeled. There are three possible values for such speed: “standing”, “walking” and “running”. Bounding boxes of people present in each frame are also provided, along with a unique identifier for each person. The main goal is to provide the research community with examples of actions that can be of interest for surveillance or safe autonomous driving. The addition of the speed of the person when performing the action can also be of interest, as it can be treated as a more dangerous behavior “running” than “waking”, when “on a call” or “looking behind”, for example, providing the researchers with richer information.

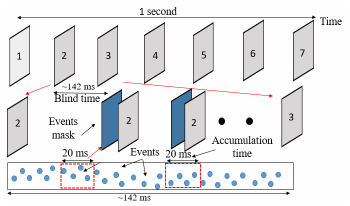

Automotive vision plays a vital role in advanced driver assistance systems (ADAS), enabling key functionalities such as collision avoidance. The effectiveness of models designed for automotive vision is typically measured based on their ability to accurately detect objects in a scene. However, an often-overlooked factor for automotive vision is the speed of the detections that depends on the data collection rate of the sensors. With conventional image sensors (CIS), the object detection rate is limited by the no information region between two consecutive frames (hereafter we refer to it as blind time), which affects the response time of drivers and ADAS to external stimuli. While increasing the CIS frame rate decreases the blind time and enables faster decision-making, it comes at the cost of increased data rate and power consumption. In contrast, lower CIS frame rates reduce data rate and have lower power consumption, but result in longer blind intervals between frames, delaying response time, which could be critical in high-risk situations. This trade-off between data rate and decision-making speed can be addressed by utilizing hybrid sensors for automotive vision. Hybrid sensors integrate event pixels alongside with CIS pixels. Event pixels provide sparse yet high-temporal-resolution data, continuously capturing changes in scene contrast that complements dense low temporal information of CIS. In this work, we demonstrate that 7fps CIS frames combined with EVS data can achieve ~40% lower data rate compared to 20fps CIS, without compromising performance of object detections. Moreover, 7fps CIS combined with EVS maintains almost constant performance within the blind time and thus enables faster detection with low data rate and power.

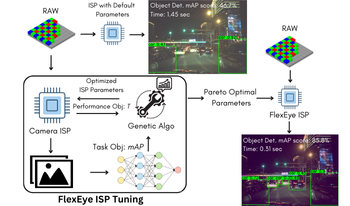

As AI becomes more prevalent, edge devices face challenges due to limited resources and the high demands of deep learning (DL) applications. In such cases, quality scalability can offer significant benefits by adjusting computational load based on available resources. Traditional Image-Signal-Processor (ISP) tuning methods prioritize maximizing intelligence performance, such as classification accuracy, while neglecting critical system constraints like latency and power dissipation. To address this gap, we introduce FlexEye, an application-specific, quality-scalable ISP tuning framework that leverages ISP parameters as a control knob for quality of service (QoS), enabling trade-off between quality and performance. Experimental results demonstrate up to 6% improvement in Object Detection accuracy and a 22.5% reduction in ISP latency compared to state of the art. In addition, we also evaluate Instance Segmentation task, where 1.2% accuracy improvement is attained with a 73% latency reduction.

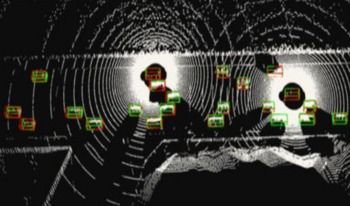

Collaborative perception for autonomous vehicles aims to overcome the limitations of individual perception. Sharing information between multiple agents resolve multiple problems, such as occlusion, sensor range limitations, and blind spots. One of the biggest challenge is to find the right trade-off between perception performance and communication bandwidth. This article proposes a new cooperative perception pipeline based on the Where2comm algorithm with optimization strategies to reduce the amount of transmitted data between several agents. Those strategies involve a data reduction module in the encoder part for efficient selection of the most important features and a new representation of messages to be exchanged in a V2X manner that takes into account a vector of information and its positions instead of a high-dimensional feature map. Our approach is evaluated on two simulated datasets, OPV2V and V2XSet. The accuracy is increased by around 7% with AP@50 on both datasets and the communication volume is reduced by 89.77% and 92.19% on V2XSet and OPV2V respectively.

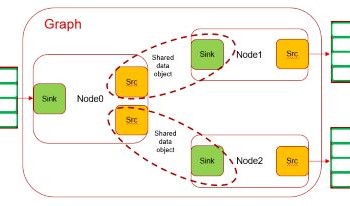

OpenVX is an open standard for accelerating computer vision applications on a heterogeneous platform with multiple processing elements. OpenVX is accepted by Automotive industry as a go-to framework for developing performance-critical, power-optimized and safety compliant computer vision processing pipelines on real-time heterogeneous embedded SoCs. Optimizing OpenVX development flow becomes a necessity with ever growing demand for variety of vision applications required in both Automotive and Industrial market. Although OpenVX works great when all the elements in the pipeline is implemented with OpenVX, it lacks utilities to effectively interact with other frameworks. We propose a software design to make OpenVX development faster by adding a thin layer on top of OpenVX which simplifies construction of an OpenVX pipeline and exposes simple interface to enable seamless interaction with other frameworks like v4l2, OpenMax, DRM etc....

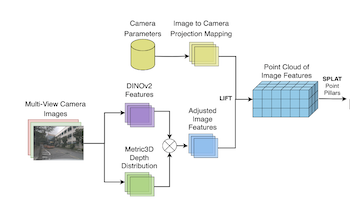

Birds Eye View perception models require extensive data to perform and generalize effectively. While traditional datasets often provide abundant driving scenes from diverse locations, this is not always the case. It is crucial to maximize the utility of the available training data. With the advent of large foundation models such as DINOv2 and Metric3Dv2, a pertinent question arises: can these models be integrated into existing model architectures to not only reduce the required training data but surpass the performance of current models? We choose two model architectures in the vehicle segmentation domain to alter: Lift-Splat-Shoot, and Simple-BEV. For Lift-Splat-Shoot, we explore the implementation of frozen DINOv2 for feature extraction and Metric3Dv2 for depth estimation, where we greatly exceed the baseline results by 7.4 IoU while utilizing only half the training data and iterations. Furthermore, we introduce an innovative application of Metric3Dv2’s depth information as a PseudoLiDAR point cloud incorporated into the Simple-BEV architecture, replacing traditional LiDAR. This integration results in a +3 IoU improvement compared to the Camera-only model.