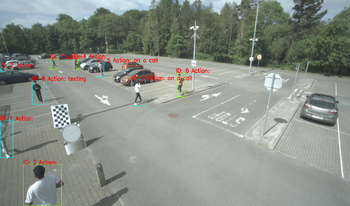

In this paper, we present a database consisting of the annotations of videos showing a number of people performing several actions in a parking lot. The chosen actions represent situations in which the pedestrian could be distracted and not fully aware of her surroundings. Those are “looking behind”, “on a call”, and “texting”, with another one labeled as “no action” when none of the previous actions is performed by the person. In addition to actions, also the speed of the person is labeled. There are three possible values for such speed: “standing”, “walking” and “running”. Bounding boxes of people present in each frame are also provided, along with a unique identifier for each person. The main goal is to provide the research community with examples of actions that can be of interest for surveillance or safe autonomous driving. The addition of the speed of the person when performing the action can also be of interest, as it can be treated as a more dangerous behavior “running” than “waking”, when “on a call” or “looking behind”, for example, providing the researchers with richer information.

Itsaso Rodríguez-Moreno, Brian Deegan, Dara Molloy, José María Martínez-Otzeta, Martin Glavin, Edward Jones, Basilio Sierra, "An Annotated Database for Pedestrian Temporal Action Recognition" in Electronic Imaging, 2025, pp 106-1 - 106-7, https://doi.org/10.2352/EI.2025.37.15.AVM-106

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed