This study aims to investigate how swapping realistic avatars between users in shared VR spaces affects self-body ownership and changes perceptions of others. In the experiment, two participants shared the same VR space. Two conditions were presented in random order: one where participants used their own realistic avatar (matched condition) and one where participants swapped avatars and used the other's realistic avatar (swapped condition). During the task, participants were instructed to perform specific physical movements while alternating between observing their own body and the other’s. After completing the experimental tasks, participants answered 16 questions on a Likert scale (7-point), addressing items related to immersion in the VR environment, self-body perception, and perception of others. The results showed significantly higher ratings for presence, body ownership, and body awareness in the matched condition. On the other hand, when another person used the participant’s realistic avatar, it led to increased distrust and negatively impacted communication. Additionally, several participants commented that they felt more balanced using their own realistic avatar. This suggests that avatar appearance, particularly differences in visual body proportions, may influence somatic perception and the sense of agency.

In 2024, we brought London closer to the ocean: Nearly 700 design students at the Royal College of Art participated in the Grand Challenge 2023/24. In teams of five, the students were tasked with tackling challenges around London and the Ocean from a design perspective. The challenge involved multiple methodologies, including design engineering, speculative design, service design, materials- and fashion-related approaches. Each team had one month to develop a compelling proposal. Fifty students participating in the Grand Challenge were able to join the Extended Reality/XR Stream: Ten teams of five students each came up with different design solutions using the Unreal Engine 5. This paper presents how Unreal Engine 5 was introduced to students through lectures and hand-on sessions, and how XR technologies were employed to creatively interpret the original brief of the Grand Challenge and how it inspired our students to come up with unique design propositions. In particular, we discuss here two case studies in detail: XRiver and SuDScape. These two student projects were among the top 13 teams exhibited at the final Grand Challenge show, offering insights and recommendations for incorporating XR into design education.

The future of Extended Reality (XR) technologies is revolutionising our interactions with digital content, transforming how we perceive reality, and enhancing our problem-solving capabilities. However, many XR applications remain technology-driven, often disregarding the broader context of their use and failing to address fundamental human needs. In this paper, we present a teaching-led design project that asks postgraduate design students to explore the future of XR through low-fidelity, screen-free prototypes with a focus on observed human needs derived from six specific locations in central London, UK. By looking at the city and built environment as lenses for exploring everyday scenarios, the project encourages design provocations rooted in real-world challenges. Through this exploration, we aim to inspire new perspectives on the future states of XR, advocating for human-centred, inclusive, and accessible solutions. By bridging the gap between technological innovation and lived experience, this project outlines a pathway toward XR technologies that prioritise societal benefit and address real human needs.

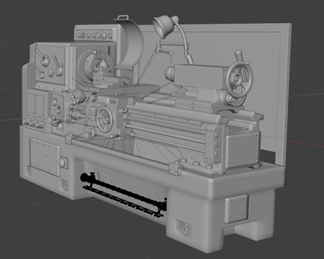

Utilizing a Value Engineering (VE) approach towards solving educational student throughput bottlenecks caused by equipment and space capacity issues in university machine shop learning, Virtual Reality (VR) presents an opportunity to provide scalable, customizable, and cost-effective means of easing these constraints. An experimental method is proposed to demonstrate applying VR towards increasing the output of the value function of an educational system. This method seeks to yield a high Transfer-Effectiveness-Ratio (TER) such that traditional educational strategies are supplemented by VR sufficiently so that further growth in classroom enrollment is enabled.

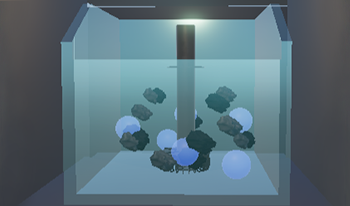

Virtual reality (VR) has increasingly become a popular tool in education and is often compared with traditional teaching methods for its potential to improve learning experiences. However, VR itself holds a wide range of experiences and immersion levels within, from less interactive environments to fully interactive, immersive systems. This study explores the impact of different levels of immersion and interaction within VR on learning outcomes. The project, titled Eureka, focuses on teaching the froth flotation process in mining by comparing two VR modalities: a low-interaction environment that presents information through text and visuals without user engagement, and an immersive, high-interaction environment where users can actively engage with the content. The purpose of this research is to investigate how these varying degrees of immersion affect user performance, engagement, and learning outcomes. The results of a user study involving 12 participants revealed that the high-interaction modality significantly improved task efficiency, with participants completing tasks faster than those of the low-interaction modality. Both modalities were similarly effective in conveying knowledge, as evidenced by comparable assessment scores. However, qualitative feedback highlighted design considerations, such as diverse user preferences for navigation and instructional methods. These findings suggest that, while interactive immersion can improve efficiency, effective VR educational tools must accommodate diverse learning styles and needs. Future work will focus on scaling participant diversity and refining VR design features.

Virtual Reality technologies are on the rise! Commercial Head-mounted Display Devices made VR applications affordable and available to a wide user range. Still, VR companies are far from being satisfied with the market penetration of their VR devices and related software. VR companies and VR enthusiasts are waiting for VR to become omnipresent! But what if everything is different? What, if VR has already taken over our world – we just are not aware of this fact? In this essay, I will discuss the Trojan Horses of Virtual Reality – those technologies and approaches, which started taking over our life years ago, we just do not acknowledge this factum. In this essay I will argue that Virtual Reality is already part of our daily life and that the still pending takeover of VR technologies will only be the final casing stone on top of the pyramid.

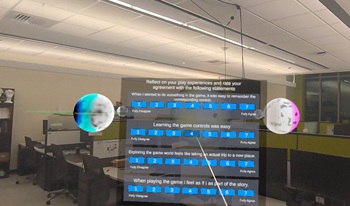

Virtual Reality (VR) technology has experienced remarkable growth, steadily establishing itself within mainstream consumer markets. This rapid expansion presents exciting opportunities for innovation and application across various fields, from entertainment to education and beyond. However, it also underscores a pressing need for more comprehensive research into user interfaces and human-computer interaction within VR environments. Understanding how users engage with VR systems and how their experiences can be optimized is crucial for further advancing the field and unlocking its full potential. This project introduces ScryVR, an innovative infrastructure designed to simplify and accelerate the development, implementation, and management of user studies in VR. By providing researchers with a robust framework for conducting studies, ScryVR aims to reduce the technical barriers often associated with VR research, such as complex data collection, hardware compatibility, and system integration challenges. Its goal is to empower researchers to focus more on study design and analysis, minimizing the time spent troubleshooting technical issues. By addressing these challenges, ScryVR has the potential to become a pivotal tool for advancing VR research methodologies. Its continued refinement will enable researchers to conduct more reliable and scalable studies, leading to deeper insights into user behavior and interaction within virtual environments. This, in turn, will drive the development of more immersive, intuitive, and impactful VR experiences.

Emergency response and active shooter training drills and exercises are necessary to train for emergencies as we are unable to predict when they do occur. There has been progress in understanding human behavior, unpredictability, human motion synthesis, crowd dynamics, and their relationships with active shooter events, but challenges remain. With continuing advancements in technology, virtual reality (VR) based training incorporates real-life experience that creates a “sense of presence” in the environment and becomes a viable alternative to traditional based training. This paper presents a collaborative virtual reality environment (CVE) module for performing active shooter training drills using immersive and non-immersive environments. The collaborative immersive environment is implemented in Unity 3D and is based on run, hide, and fight modes for emergency response. We present two ways of modeling user behavior. First, rules for AI agents or NPCs (Non-Player Characters) are defined. Second, controls to the users-controlled agents or PCs (Player characters) to navigate in the VR environment as autonomous agents with a keyboard/ joystick or with an immersive VR headset are provided. The users can enter the CVE as user-controlled agents and respond to emergencies like active shooter events, bomb blasts, fire, and smoke. A user study was conducted to evaluate the effectiveness of our CVE module for active shooter response training and decision-making using the Group Environment Questionnaire (GEQ), Presence Questionnaire (PQ), System Usability Scale (SUS), and Technology Acceptance Model (TAM) Questionnaire. The results show that the majority of users agreed that the sense of presence intrinsic motivation, and self-efficacy was increased when using the immersive emergency response training module for an active shooter evacuation environment.

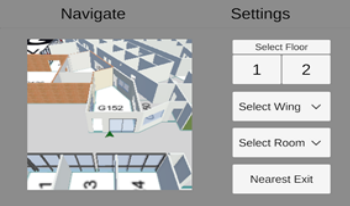

During emergencies, accurate and timely dissemination of evacuation information plays a critical role in saving lives and minimizing damage. As technology continues to advance, there is an increasing need to explore innovative approaches that enhance emergency evacuation and navigation in an indoor environment to facilitate efficient decision-making. This paper presents a mobile augmented reality application (MARA) for indoor emergency evacuation and navigation in a building environment in real time. The location of the user is determined by the device camera and translated to a position within a Digital Twin of the Building. AI-generated navigation meshes and augmented reality foundation image tracking technology are used for localization. Through the visualization of integrated geographic information systems and real-time data analysis, the proposed MARA provides the current location of the person, the number of exits, and user-specific personalized evacuation routes. The MARA can also be used for acquiring spatial analysis, situational awareness, and visual communication. In emergencies such as fire and smoke visibility becomes poor inside the building. The proposed MARA provides information to support effective decision-making for both building occupants and emergency responders during emergencies.

Mixed Reality (MR) introduces many possibilities for interacting with virtual environments in which training could be provided for some life-saving critical tasks. Neonatal Resuscitation is one of these tasks that would benefit from having a safe and immersive environment for practice. In this paper, we introduce an MR-based simulation that is aimed at healthcare practitioners to practice Positive Pressure Ventilation (PPV) in an immersive, risk-free, and interactive way using the hand-tracking capabilities built in the Mixed Reality Head Mounted Display. This research moves towards providing a standard addition to the neonatal healthcare system where MR could facilitate refresher sessions for the neonatal resuscitation procedure and eventually allow practitioners to assess their performance during the simulation. The simulation is based on a Virtual Reality (VR) Neonatal Resuscitation Program (NRP) simulation and is designed to rely on and utilize MR elements and features. We propose that this simulation could be used to receive refresher training sessions that would take place between the regularly scheduled in-person training sessions.