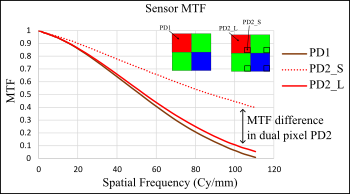

High dynamic range (HDR) image sensors have become increasingly important for automobile applications, particularly for advanced driver-assistance systems (ADAS) in recent years. The traditional single photodiode and split photodiode pixels are the two most commonly used technologies to build HDR image sensors in the industry. This study used a monochrome CMOS image sensor to model single and split photodiode pixel images to examine image quality. Slanted edge images were used to calculate the spatial frequency response (SFR), and the results showed a significant difference in modulation transfer function (MTF) from the split photodiode. MTF of the large photodiode in the split photodiode pixel at half Nyquist was lower than MTF of the small photodiode, as expected. However, the sampling area and the spatial separation of the small photodiode in the split pixel caused edge artifacts in the image. The traditional single photodiode pixel does not experience a change in MTF with light level and edge artifacts were not observed.

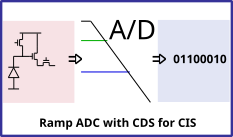

This article presents a novel architecture of a single slope single ramp column analogue-to-digital converter (ADC) for CMOS image sensors (CIS). The outlined ADC uses a global single slope ramp (SSR) with two comparators and two counters per column, one for the pixel reset level and one for the pixel signal level. A common reference level is used to start the conversion, while the reset and signal levels are used to terminate the respective conversion. With this architecture, the pixel reset, and signal levels are converted within a single conversion cycle. When the counter results are ready, a digital correlated double sampling (CDS) output is achieved by subtracting the reset counter value from the signal counter value with a digital subtractor. This architecture provides speed, offset compensation, and input swing adjustment through the reference level. Furthermore, the implementation is less vulnerable to temporal row noise, which is then suitable for low-noise applications. A 12-bit column ADC was designed and implemented in a 1920 x 1080 resolution CIS demonstrator, using a 0.18 m CIS process. With 200 MHz counter operation, the readout operation results in 25 frames per second.

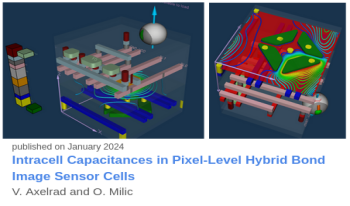

Accurate coupling capacitances are a key part in the design of modern image sensor cells due to their high speed requirements, large number of active devices and interconnects, and complex inter-layer dielectric structure. Automation of 3D structure creation integrated with the design flow as well as speed and robustness of capacitance calculation are crucial for a seamless design and optimization flow. Periodicity of image sensor arrays necessitates availability of periodic boundary conditions. High structural complexity (many layout elements, many metal interconnect levels and many dielectric layers) demands efficient numerics for reasonable runtimes. We apply a new integrated software package CellCap3D to the calculation of image sensor cell capacitances with the specific example application of comparing a Pixel-Level Hybrid Bonding (PLHB) cell to its conventional counterpart where hybrid bonds are only utilized along the periphery of the die. PLHB is a recently proposed approach which introduces an electrical contact between light-sensing (bottom) die and upper die for every pixel. A hybrid-bond, in essence a small-pitch, small-size Cu-Cu contact, now allows addition of extra MOS devices and capacitors to the individual pixel, thus greatly expanding imager functionality and performance. Additional benefit is achieved in simplification of the manufacturing of the light-sensing die. A reduction in the number of manufacturing steps, also reduces the chance of defect introduction. The architecture of the simulation system, some key components and numerical aspects are discussed in this work. The software produces a complete capacitance matrix for a typical image sensor cell with 5-7 interconnect layers in minutes on a standard Linux machine. Lithographic distortions of layout patterns, as demonstrated in the example in this work, can be optionally included for more physically accurate capacitances. Misalignment of mask layers or bonded wafers relative to each other can be considered for its effects on coupling capacitances. Periodic boundary conditions can be used for periodically repeated image sensor cells, avoiding the need to simulate an array of cells to provide the correct electrostatic environment for one cell.

Recently, many deep learning applications have been used on the mobile platform. To deploy them in the mobile platform, the networks should be quantized. The quantization of computer vision networks has been studied well but there have been few studies for the quantization of image restoration networks. In previous study, we studied the effect of the quantization of activations and weight for deep learning network on image quality following previous study for weight quantization for deep learning network. In this paper, we made adaptive bit-depth control of input patch while maintaining the image quality similar to the floating point network to achieve more quantization bit reduction than previous work. Bit depth is controlled adaptive to the maximum pixel value of the input data block. It can preserve the linearity of the value in the block data so that the deep neural network doesn't need to be trained by the data distribution change. With proposed method we could achieve 5 percent reduction in hardware area and power consumption for our custom deep network hardware while maintaining the image quality in subejctive and objective measurment. It is very important achievement for mobile platform hardware.

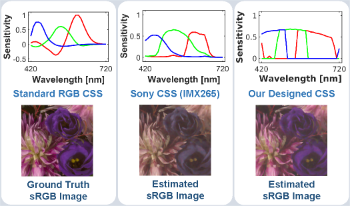

Camera spectral sensitivity (CSS) establishes the connection between scene radiance and device-captured RGB tristimulus values. Since the spectral sensitivity of most color imaging devices typically deviates from that of human vision or a standard color space and also noise is often introduced during the process of photoelectric signal conversion and transmission, the design of an efficient CSS with noise robustness and high color fidelity is of paramount importance. In this paper, we propose a CSS optimization method with noise consideration that designs theoretically an optimal CSS for each noise level. Additionally, taking practical considerations into account, we further extend the proposed method for a universally optimal CSS adaptable to diverse noise levels. Experimental results show that our optimized CSS is more robust to noise and has better imaging performance than existing optimization methods based on a fixed CSS. The source code is available at https://github.com/xyu12/Joint-Design-of-CSS-and-CCM-with-Noise-Consideration-EI2024.

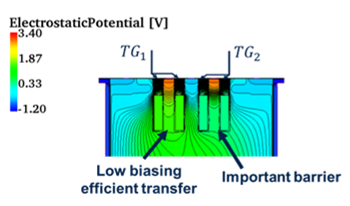

The integration of trench vertical transfer gates in an indirect time of flight pixel has been studied through TCAD & optical simulations. A small fast photo-gate pixel surpassing state of the art performances has been designed and optimized thanks to these advanced multiphysics simulations. Quantum efficiency of 40% is obtained and demodulation contrast of 89% at 200MHz is achieved while transfer gates operate at 1.0V biasing.

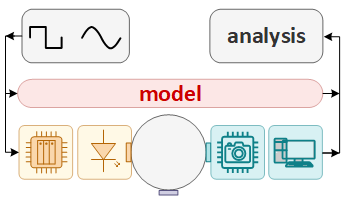

This paper proposes a pixel-wise parameter estimation framework for Event-based Vision Sensor (EVS) characterization. Using an ordinary differential equation (ODE) based pixel latency model and an autoregressive Monte-Carlo noise model, we first identify the representative parameters of EVS. The parameter estimation is then formulated as an optimization problem to minimize the measurement-prediction error for both pixel latency and event firing probability. Finally, the effectiveness and accuracy of the proposed framework are verified by comparison of synthetic and measured event response latency as well as firing probability as function of temporal contrast (so-called S-curves).

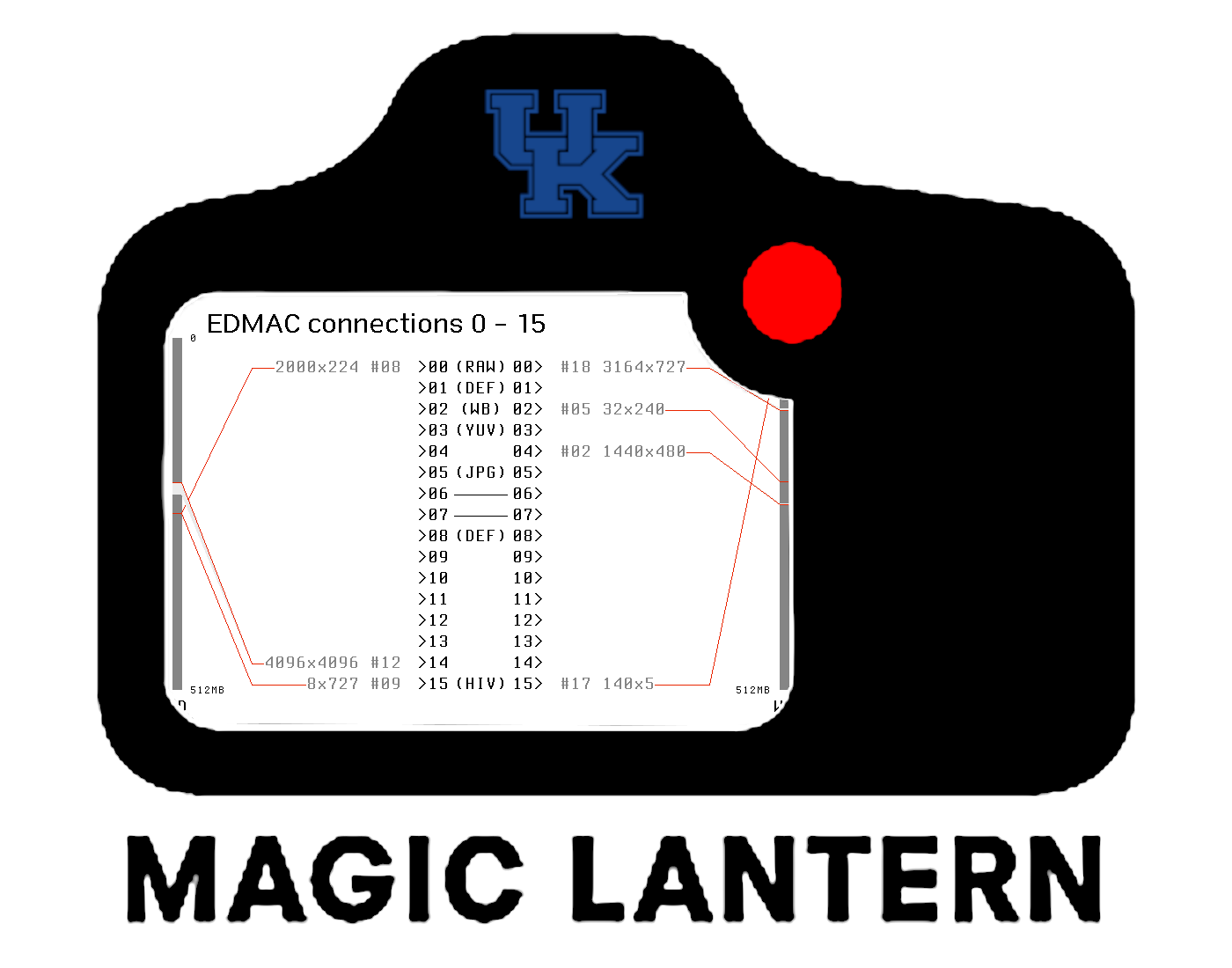

The Magic Lantern project describes itself as ``Magic Lantern is a free software add-on that runs from the SD/CF card and adds a host of new features to Canon EOS cameras that weren't included from the factory by Canon.'' In doing so, they have provided APIs, documentation, and the means to run code on many Canon EOS interchangeable lens cameras, and also useful well-documented interchange formats for data extracted via that access. The current work describes how these facilities can be applied by researchers to develop new imaging techniques on a professional/prosumer camera platform. Specifically, this work covers an attempt to use the Magic Lantern development tools to manipulate a cameras' Embedded Direct Memory Access engine (EDMAC) to perform on-the-fly frame diffing, and/or to the use of the project's MLV format for raw sensor data streams to extract data from the relatively large, high-performance sensor of a prosumer camera as input for alternative processing pipelines.