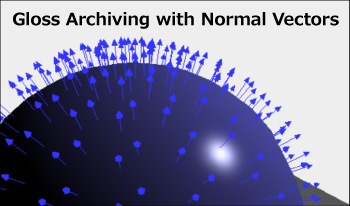

This paper proposes a method of gloss archiving using normal vectors. When archiving the gloss phenomenon of a material, it is important to record not only the reflected light intensity but also the gloss unevenness. This is because the gloss unevenness greatly affects the texture of the material. However, it has been difficult to quantitatively record gloss unevenness because they are dependent on the viewing direction and lighting. Gloss unevenness on mirror surfaces are mainly caused by irregularities in the normal direction. Therefore, we came up with a solution to archive the gloss unevenness phenomenon by recording the distribution of surface normal vectors. We are currently developing a apparatus to measure the distribution of surface normal vectors. Using this surface normal data, it will be also possible to reproduce gloss unevenness images using Computer Graphics technology.

Archaeological textiles are often highly fragmented, and solving a puzzle is needed to recover the original composition and respective motifs. The lack of ground truth and unknown number of the original artworks that the fragments come from complicate this process. We clustered the RGB images of the Viking Age Oseberg Tapestry based on their texture features. Classical texture descriptors as well as modern deep learning were used to construct a texture feature vector that was subsequently fed to the clustering algorithm. We anticipated that the clustering outcome would give indications to the number of original artworks. While the two clusters of different textures emerged, this finding needs to be taken with care due to a broad range of limitations and lessons learned.

Nowadays, industrial gloss evaluation is mostly limited to the specular gloss meter, focusing on a single attribute of surface gloss. The correlation of such meters with the human gloss appraisal is thus rather weak. Although more advanced image-based gloss meters have become available, their application is typically restricted to niche industries due to the high cost and complexity. This paper extends a previous design of a comprehensive and affordable image-based gloss meter (iGM) for the determination of each of the five main attributes of surface gloss (specular gloss, DOI, haze, contrast and surface-uniformity gloss). Together with an extensive introduction on surface gloss and its evaluation, the iGM design is described and some of its capabilities and opportunities are illustrated.

We propose a method of reproducing perceptual translucency in three-dimensional printing. In contrast to most conventional methods, which reproduce the physical properties of translucency, we focus on the perceptual aspects of translucency. Humans are known to rely on simple cues to perceive translucency, and we develop a method of reproducing these cues using the gradation of surface textures. Textures are designed to reproduce the intensity distribution of the shading and thus provide a cue for the perception of translucency. In creating textures, we adopt computer graphics to develop an image-based optimization method. We validate the effectiveness of the method through subjective evaluation experiments using three-dimensionally printed objects. The results of the validation show that the proposed method using texture is effective in improving perceptual translucency.

Noise is an extremely important image quality factor. Camera manufacturers go to great lengths to source sensors and develop algorithms to minimize it. Illustrations of its effects are familiar, but it is not well known that noise itself, which is not constant over an image, can be represented as an image. Noise varies over images for two reasons. (1) Noise voltage in raw images is predicted to be proportional to a constant plus the square root of the number of photons reaching each pixel. (2) The most commonly applied image processing in consumer cameras, bilateral filtering [1], sharpens regions of the image near contrasty features such as edges and smooths (applies lowpass filtering to reduce noise) the image elsewhere. Noise is normally measured in flat, uniformly-illuminated patches, where bilateral filter smoothing has its maximum effect, often at the expense of fine detail. Significant insight into the behavior of image processing can be gained by measuring the noise throughout the image, not just in flat patches. We describe a method for obtaining noise images, then illustrate an important application— observing texture loss— and compare noise images for JPEG and raw-converted images. The method, derived from the EMVA 1288 analysis of flat-field images, requires the acquisition of a large number of identical images. It is somewhat cumbersome when individual image files need to be saved, but it’s fast and convenient when direct image acquisition is available.

In the last decades, many researchers have developed algorithms that estimate the quality of a visual content (videos or images). Among them, one recent trend is the use of texture descriptors. In this paper, we investigate the suitability of using Binarized Statistical Image Features (BSIF), the Local Configuration Pattern (LCP), the Complete Local Binary Pattern (CLBP), and the Local Phase Quantization (LPQ) descriptors to design a referenceless image quality assessment (RIQA) method. These descriptors have been successfully used in computer vision applications, but their use in image quality assessment has not yet been thoroughly investigated. With this goal, we use a framework that extracts the statistics of these descriptors and maps them into quality scores using a regression approach. Results show that many of the descriptors achieve a good accuracy performance, outperforming other state-of-the-art RIQA methods. The framework is simple and reliable.