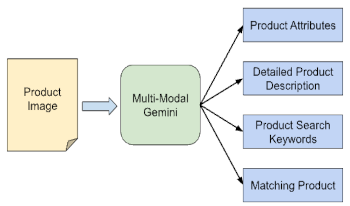

We present the application of a Multimodal Large Language Model, specifically Gemini, in automating product image analysis for the retail industry. We demonstrate how Gemini's ability to generate text based on mixed image-text prompts enables two key applications: 1) Product Attribute Extraction, where various attributes of a product in an image can be extracted using open or closed vocabularies and used for any downstream analytics by the retailers, and 2) Product Recognition, where a product in a user-provided image is identified, and its corresponding product information is retrieved from a retailer's search index to be returned to the user. In both cases, Gemini acts as a powerful and easily customizable recognition engine, simplifying the processing pipeline for retailers' developer teams. Traditionally, these tasks required multiple models (object detection, OCR, attributes classification, embedding, etc) working together, as well as extensive custom data collection and domain expertise. However, with Gemini, these tasks are streamlined by writing a set of prompts and straightforward logic to connect their outputs.

Single image dehazing is very important in intelligent vision systems. Since the dark channel prior (DCP) is invalid in bright areas such as the sky part of the image and will cause the recovered image to suffer severe distortion in the bright area. Therefore, we propose a novel dehazing method based on transmission map segmentation and prior knowledge. First, we divide the hazy input into bright areas and non-bright areas, then estimate the transmission map via DCP in the non-bright area, and propose a transmission map compensation function for correction in the bright area. Then we fuse the DCP and the bright channel prior (BCP) to accurately estimate the atmospheric light, and finally restore the clear image according to the physical model. Experiments show that our method well solves the DCP distortion problem in bright regions of images and is competitive with state-of-the-art methods.

More than ever before, computers and computation are critical to the image formation process. Across diverse applications and fields, remarkably similar imaging problems appear, requiring sophisticated mathematical, statistical, and algorithmic tools. This conference focuses on imaging as a marriage of computation with physical devices. It emphasizes the interplay between mathematical theory, physical models, and computational algorithms that enable effective current and future imaging systems. Contributions to the conference are solicited on topics ranging from fundamental theoretical advances to detailed system-level implementations and case studies.

More than ever before, computers and computation are critical to the image formation process. Across diverse applications and fields, remarkably similar imaging problems appear, requiring sophisticated mathematical, statistical, and algorithmic tools. This conference focuses on imaging as a marriage of computation with physical devices. It emphasizes the interplay between mathematical theory, physical models, and computational algorithms that enable effective current and future imaging systems. Contributions to the conference are solicited on topics ranging from fundamental theoretical advances to detailed system-level implementations and case studies.

The authors introduce an integrative approach for the analysis of the high-dimensional parameter space relevant for decision-making in the context of quality control. Typically, a large number of parameters influence the quality of a manufactured part in an assembly process, and our approach supports the visual exploration and comprehension of the correlations among various parameters and their effects on part quality. We combine visualization and machine learning methods to help a user with the identification of important parameter value settings having certain effects on a part. The goal to understand the influence of parameter values on part quality is treated from a reverse engineering perspective, driven by the goal to determine what values cause what effects on part quality. The high-dimensional parameter value domain generally cannot be visualized directly, and the authors employ dimension reduction techniques to address this problem. Their prototype system makes possible the identification of regions in a high-dimensional parameter value space that lead to desirable (or non-desirable) parameter value settings for quality assurance. They demonstrate the validity and effectiveness of our methods and prototype by applying them to a sheet metal deformation example.

Detecting changes in an uncontrolled environment using cameras mounted on a ground vehicle is critical for the detection of roadside Improvised Explosive Devices (IEDs). Hidden IEDs are often accompanied by visible markers, whose appearances are a priori unknown. Little work has been published on detecting unknown objects using deep learning. This article shows the feasibility of applying convolutional neural networks (CNNs) to predict the location of markers in real time, compared to an earlier reference recording. The authors investigate novel encoder–decoder Siamese CNN architectures and introduce a modified double-margin contrastive loss function, to achieve pixel-level change detection results. Their dataset consists of seven pairs of challenging real-world recordings, and they investigate augmentation with artificial object data. The proposed network architecture can compare two images of 1920 × 1440 pixels in 27 ms on an RTX Titan GPU and significantly outperforms state-of-the-art networks and algorithms on our dataset in terms of F-1 score by 0.28.

The quantification of material appearance is important in product design. In particular, the sparkle impression of metallic paint used mainly for automobiles varies with the observation angle. Although several evaluation methods and multi-angle measurement devices have been proposed for the impression, it is necessary to add more light sources or cameras to the devices to increase the number of evaluation angles. The present study constructed a device that evaluates the multi-angle sparkle impression in one shot and developed a method for quantifying the impression. The device comprises a line spectral camera, light source, and motorized rotation stage. The quantification method is based on spatial frequency characteristics. It was confirmed that the evaluation value obtained from the image recorded by the constructed device correlates closely with a subjective score. Furthermore, the evaluation value is significantly correlated with that obtained using a commercially available evaluation device.

This study focuses on real-time pedestrian detection using thermal images taken at night because a number of pedestrian–vehicle crashes occur from late at night to early dawn. However, the thermal energy between a pedestrian and the road differs depending on the season. We therefore propose the use of adaptive Boolean-map-based saliency (ABMS) to boost the pedestrian from the background based on the particular season. For pedestrian recognition, we use the convolutional neural network based pedestrian detection algorithm, you only look once (YOLO), which differs from conventional classifier-based methods. Unlike the original version, we combine YOLO with a saliency feature map constructed using ABMS as a hardwired kernel based on prior knowledge that a pedestrian has higher saliency than the background. The proposed algorithm was successfully applied to the thermal image dataset captured by moving vehicles, and its performance was shown to be better than that of other related state-of-the-art methods. © 2017 Society for Imaging Science and Technology.