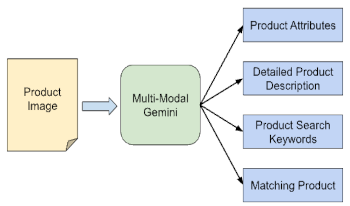

We present the application of a Multimodal Large Language Model, specifically Gemini, in automating product image analysis for the retail industry. We demonstrate how Gemini's ability to generate text based on mixed image-text prompts enables two key applications: 1) Product Attribute Extraction, where various attributes of a product in an image can be extracted using open or closed vocabularies and used for any downstream analytics by the retailers, and 2) Product Recognition, where a product in a user-provided image is identified, and its corresponding product information is retrieved from a retailer's search index to be returned to the user. In both cases, Gemini acts as a powerful and easily customizable recognition engine, simplifying the processing pipeline for retailers' developer teams. Traditionally, these tasks required multiple models (object detection, OCR, attributes classification, embedding, etc) working together, as well as extensive custom data collection and domain expertise. However, with Gemini, these tasks are streamlined by writing a set of prompts and straightforward logic to connect their outputs.

Tianli Yu, Daniel Vlasic, "Automating Product Image Analysis for Retail with Gemini" in Electronic Imaging, 2025, pp 262-1 - 262-7, https://doi.org/10.2352/EI.2025.37.8.IMAGE-262

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed