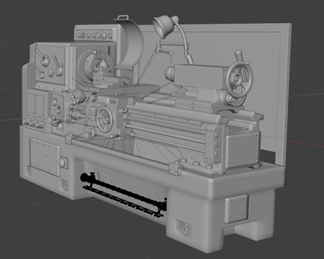

Utilizing a Value Engineering (VE) approach towards solving educational student throughput bottlenecks caused by equipment and space capacity issues in university machine shop learning, Virtual Reality (VR) presents an opportunity to provide scalable, customizable, and cost-effective means of easing these constraints. An experimental method is proposed to demonstrate applying VR towards increasing the output of the value function of an educational system. This method seeks to yield a high Transfer-Effectiveness-Ratio (TER) such that traditional educational strategies are supplemented by VR sufficiently so that further growth in classroom enrollment is enabled.

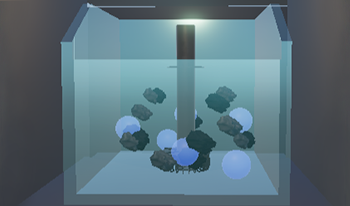

Virtual reality (VR) has increasingly become a popular tool in education and is often compared with traditional teaching methods for its potential to improve learning experiences. However, VR itself holds a wide range of experiences and immersion levels within, from less interactive environments to fully interactive, immersive systems. This study explores the impact of different levels of immersion and interaction within VR on learning outcomes. The project, titled Eureka, focuses on teaching the froth flotation process in mining by comparing two VR modalities: a low-interaction environment that presents information through text and visuals without user engagement, and an immersive, high-interaction environment where users can actively engage with the content. The purpose of this research is to investigate how these varying degrees of immersion affect user performance, engagement, and learning outcomes. The results of a user study involving 12 participants revealed that the high-interaction modality significantly improved task efficiency, with participants completing tasks faster than those of the low-interaction modality. Both modalities were similarly effective in conveying knowledge, as evidenced by comparable assessment scores. However, qualitative feedback highlighted design considerations, such as diverse user preferences for navigation and instructional methods. These findings suggest that, while interactive immersion can improve efficiency, effective VR educational tools must accommodate diverse learning styles and needs. Future work will focus on scaling participant diversity and refining VR design features.

Virtual Reality technologies are on the rise! Commercial Head-mounted Display Devices made VR applications affordable and available to a wide user range. Still, VR companies are far from being satisfied with the market penetration of their VR devices and related software. VR companies and VR enthusiasts are waiting for VR to become omnipresent! But what if everything is different? What, if VR has already taken over our world – we just are not aware of this fact? In this essay, I will discuss the Trojan Horses of Virtual Reality – those technologies and approaches, which started taking over our life years ago, we just do not acknowledge this factum. In this essay I will argue that Virtual Reality is already part of our daily life and that the still pending takeover of VR technologies will only be the final casing stone on top of the pyramid.

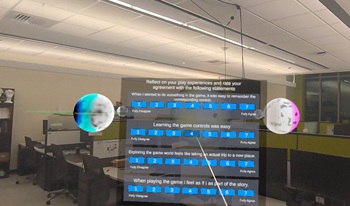

Virtual Reality (VR) technology has experienced remarkable growth, steadily establishing itself within mainstream consumer markets. This rapid expansion presents exciting opportunities for innovation and application across various fields, from entertainment to education and beyond. However, it also underscores a pressing need for more comprehensive research into user interfaces and human-computer interaction within VR environments. Understanding how users engage with VR systems and how their experiences can be optimized is crucial for further advancing the field and unlocking its full potential. This project introduces ScryVR, an innovative infrastructure designed to simplify and accelerate the development, implementation, and management of user studies in VR. By providing researchers with a robust framework for conducting studies, ScryVR aims to reduce the technical barriers often associated with VR research, such as complex data collection, hardware compatibility, and system integration challenges. Its goal is to empower researchers to focus more on study design and analysis, minimizing the time spent troubleshooting technical issues. By addressing these challenges, ScryVR has the potential to become a pivotal tool for advancing VR research methodologies. Its continued refinement will enable researchers to conduct more reliable and scalable studies, leading to deeper insights into user behavior and interaction within virtual environments. This, in turn, will drive the development of more immersive, intuitive, and impactful VR experiences.

Emergency response and active shooter training drills and exercises are necessary to train for emergencies as we are unable to predict when they do occur. There has been progress in understanding human behavior, unpredictability, human motion synthesis, crowd dynamics, and their relationships with active shooter events, but challenges remain. With continuing advancements in technology, virtual reality (VR) based training incorporates real-life experience that creates a “sense of presence” in the environment and becomes a viable alternative to traditional based training. This paper presents a collaborative virtual reality environment (CVE) module for performing active shooter training drills using immersive and non-immersive environments. The collaborative immersive environment is implemented in Unity 3D and is based on run, hide, and fight modes for emergency response. We present two ways of modeling user behavior. First, rules for AI agents or NPCs (Non-Player Characters) are defined. Second, controls to the users-controlled agents or PCs (Player characters) to navigate in the VR environment as autonomous agents with a keyboard/ joystick or with an immersive VR headset are provided. The users can enter the CVE as user-controlled agents and respond to emergencies like active shooter events, bomb blasts, fire, and smoke. A user study was conducted to evaluate the effectiveness of our CVE module for active shooter response training and decision-making using the Group Environment Questionnaire (GEQ), Presence Questionnaire (PQ), System Usability Scale (SUS), and Technology Acceptance Model (TAM) Questionnaire. The results show that the majority of users agreed that the sense of presence intrinsic motivation, and self-efficacy was increased when using the immersive emergency response training module for an active shooter evacuation environment.

This research presents a novel post-processing method for convolutional neural networks (CNNs) in character recognition, specifically designed to handle inconsistencies and irregularities in character shapes. Convolutional Neural Networks (CNNs) are powerful tools for recognizing and learning character shapes directly from source images, making them well-suited for recognition of characters that contain inconsistencies in their shapes. However, when applied to multi-object detection for character recognition, CNNs require post-processing to convert the recognized characters into code sequences, which has so far limited their applicability. The developed method solves this problem by directly post-processing the inconsistent characters identified by the convolutional neural model into labels corresponding to the source image. An experiment with real pharmaceutical packaging images demonstrates the functionality of the method, showing that it can handle different numbers of characters and labels effectively. As a scientific contribution to the fields of imaging and deep learning, this research opens new possibilities for future studies, particularly in the development of more accurate and efficient multi-object character recognition with post-processing and their application to new areas.

Sublimity has long been a theme in aesthetics, as one of the human emotions experienced when perceiving vastness, terror, or ambiguity. This study investigated the visual conditions that evoke sublimity using virtual reality (VR). Participants observed sublime content, developed based on previous research, under two factors conditions of (1) wide or narrow fields of view (FOV), and (2) 2D or 3D video presentations. We collected psycho-physiological evaluations from the participants. The results demonstrated that a wider FOV enhanced the perception of sublimity and pleasantness. In particular, gaze fixation time tended to increase under conditions of wider FOV and 3D presentation, supporting the effect of sublimity in VR. This suggests the potential of VR as a valuable tool for amplifying the experience of sublimity.

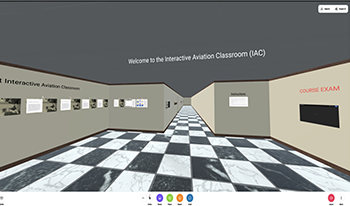

Aviation Maintenance Technicians (AMTs) play an important role in guaranteeing the safety, reliability, and readiness of aviation operations worldwide. Per Federal Aviation Administration (FAA) regulations, certified AMTs must document mechanic-related experience to maintain their certification. Currently, aviation maintenance training methods are centered around classroom instruction, printed manuals, videos, and on-the-job training. Due to the constantly evolving digital landscape, there is an opportunity to modernize the way AMTs are trained, remain current, and conduct on-the-job training. This research explores the implementation of Virtual Reality (VR) platforms as a method for enhancing the aviation training experience in the areas of aircraft maintenance and sustainability. One outcome of this research is the creation of a virtual training classroom module for aircraft maintenance, utilizing a web- based, open-source, immersive platform called Mozilla Hubs. While there is a general belief that VR enhances learning in general, very few controlled experiments have been conducted to show that this is the case. The goal of this research is to add to the general knowledge on the use of VR for training and specifically for aircraft maintenance training.

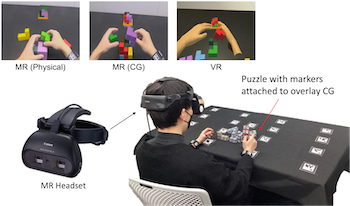

This study evaluates user experiences in Virtual Reality (VR) and Mixed Reality (MR) systems during task-based interactions. Three experimental conditions were examined: MR (Physical), MR (CG), and VR. Subjective and objective indices were used to assess user performance and experience. The results demonstrated significant differences among conditions, with MR (Physical) consistently outperforming MR (CG) and VR in various aspects. Eye-tracking data revealed that users spent less time observing physical objects in the MR (Physical) condition, primarily relying on virtual objects for task guidance. Conversely, in conditions with a higher proportion of CG content, users spent more time observing objects but reported increased discomfort due to delays. These findings suggest that the ratio of virtual to physical objects significantly impacts user experience and performance. This research provides valuable insights into improving user experiences in VR and MR systems, with potential applications across various domains.

In August 2023, a series of co-design workshops were run in a collaboration between Kyushu University (Fukuoka, Japan), the Royal College of Art (London, UK), and Imperial College London (London, UK). In this series of workshops, participants were asked to create a number of drawings visualising avian-human interaction scenarios. Each set of drawings demonstrated a specific interaction each participant had with an avian species in three different contexts: the interaction from the participants perspective, the interaction from the birds perspective, and how the participant hopes interaction will be embodied in 50 years' time. The main purpose of this exercise was to co-imagine a utopian future with more positive interspecies relations between humans and birds. Based on these drawings, we have created a number of visualisations presenting those perspectives in Virtual Reality. This development allows viewers to visualise participants' perspective shifts through the subject matter depicted in their workshop drawings, allowing for the observation of the relationship between humans and non-humans (here: avian species). This research tests the hypothesis that participants perceive Virtual Reality as furthering their feelings of immersion relating to the workshop topic of human-avian relationships. This demonstrates the potential of XR technologies as a medium for building empathy towards non-human species, providing foundational justification for this body of work to progress into employing XR as a medium for perspective shifts.