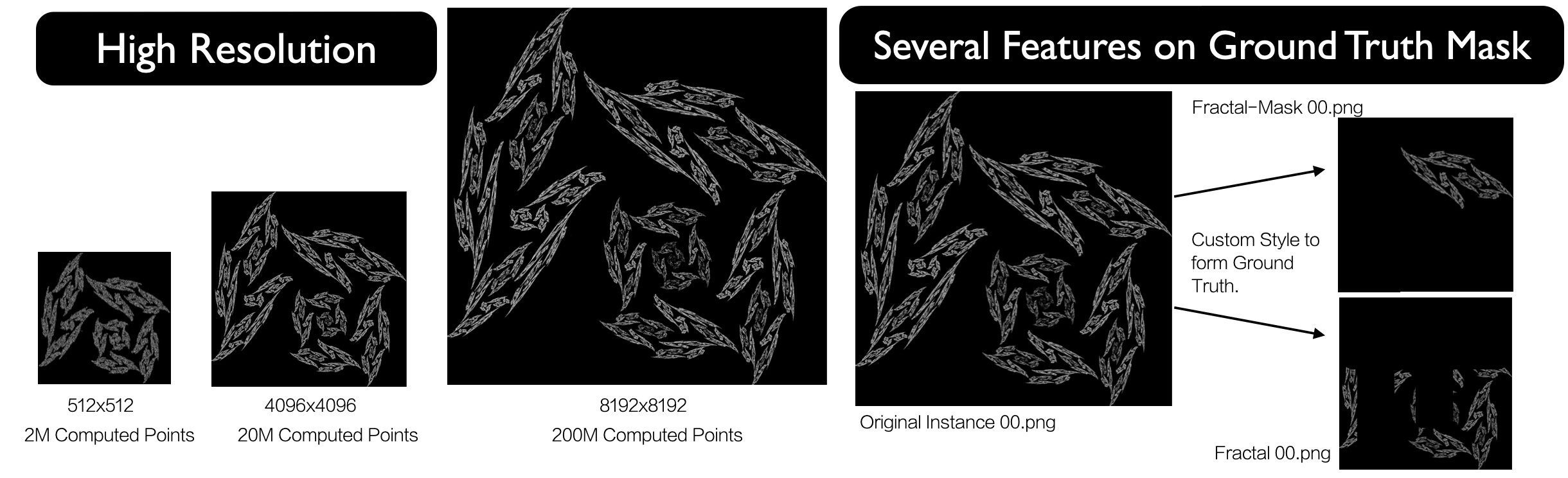

In medical segmentation, the acquisition of high-quality labeled data remains a significant challenge due to the substantial cost and time required for expert annotations. Variability in imaging conditions, patient diversity, and the use of different imaging devices further complicate model training. The high dimensionality of medical images also imposes considerable computational demands, while small lesions or abnormalities can create class imbalance, hindering segmentation accuracy. Pre-training on synthetic datasets in medical imaging may enable Vision Transformers (ViTs) to develop robust feature representations, even during the fine-tuning phase, when high-quality labeled data is limited. In this work, we propose integrating Formula-Driven Supervised Learning (FDSL) synthetic datasets with medical imaging to enhance pre-training for segmentation tasks. We implemented a custom fractal dataset, Style Fractals, capable of generating high-resolution images, including those measuring 8k x 8k pixels. Our results indicate improved performance when using the SAM model for segmentation, in conjunction with robust augmentation techniques, increasing performance from 62.30% to 63.68%. This was followed by fine-tuning on the PAIP dataset, a high-resolution, real-world pathology dataset focused on liver cancer. Additionally, we present results using another synthetic dataset, SegRCDB, for comparative analysis.

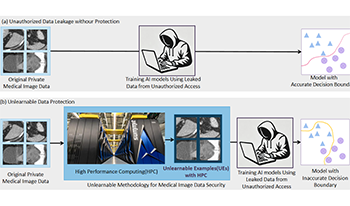

Recent advancements in AI models, like ChatGPT, are structured to retain user interactions, which could inadvertently include sensitive healthcare data. In the healthcare field, particularly when radiologists use AI-driven diagnostic tools hosted on online platforms, there is a risk that medical imaging data may be repurposed for future AI training without explicit consent, spotlighting critical privacy and intellectual property concerns around healthcare data usage. Addressing these privacy challenges, a novel approach known as Unlearnable Examples (UEs) has been introduced, aiming to make data unlearnable to deep learning models. A prominent method within this area, called Unlearnable Clustering (UC), has shown improved UE performance with larger batch sizes but was previously limited by computational resources (e.g., a single workstation). To push the boundaries of UE performance with theoretically unlimited resources, we scaled up UC learning across various datasets using Distributed Data Parallel (DDP) training on the Summit supercomputer. Our goal was to examine UE efficacy at high-performance computing (HPC) levels to prevent unauthorized learning and enhance data security, particularly exploring the impact of batch size on UE’s unlearnability. Utilizing the robust computational capabilities of the Summit, extensive experiments were conducted on diverse datasets such as Pets, MedMNist, Flowers, and Flowers102. Our findings reveal that both overly large and overly small batch sizes can lead to performance instability and affect accuracy. However, the relationship between batch size and unlearnability varied across datasets, highlighting the necessity for tailored batch size strategies to achieve optimal data protection. The use of Summit’s high-performance GPUs, along with the efficiency of the DDP framework, facilitated rapid updates of model parameters and consistent training across nodes. Our results underscore the critical role of selecting appropriate batch sizes based on the specific characteristics of each dataset to prevent learning and ensure data security in deep learning applications. The source code is publicly available at https: // github. com/ hrlblab/ UE_ HPC .

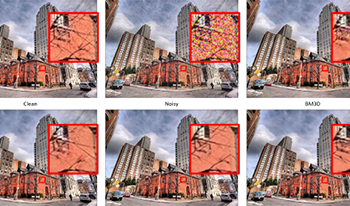

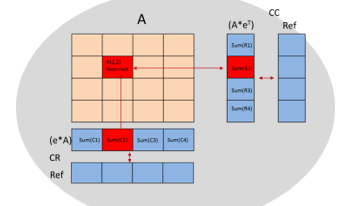

Image denoising is a crucial task in image processing, aiming to enhance image quality by effectively eliminating noise while preserving essential structural and textural details. In this paper, we introduce a novel denoising algorithm that integrates residual Swin transformer blocks (RSTB) with the concept of the classical non-local means (NLM) filtering. The proposed solution is aimed at striking a balance between performance and computation complexity and is structured into three main components: (1) Feature extraction utilizing a multi-scale approach to capture diverse image features using RSTB, (2) Multi-scale feature matching inspired by NLM that computes pixel similarity through learned embeddings enabling accurate noise reduction even in high-noise scenarios, and (3) Residual detail enhancement using the swin transformer block that recovers high-frequency details lost during denoising. Our extensive experiments demonstrate that the proposed model with 743k parameters achieves the best or competitive performance amongst the state-of-the-art models with comparable number of parameters. This makes the proposed solution a preferred option for applications prioritizing detail preservation with limited compute resources. Furthermore, the proposed solution is flexible enough to adapt to other image restoration problems like deblurring and super-resolution.

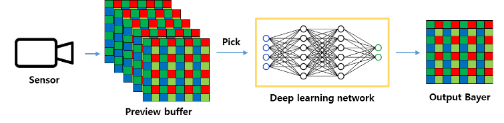

With the emergence of 200 mega pixel QxQ Bayer pattern image sensors, the remosaic technology that rearranges color filter arrays (CFAs) into Bayer patterns has become increasingly important. However, the limitations of the remosaic algorithm in the sensor often result in artifacts that degrade the details and textures of the images. In this paper, we propose a deep learning-based artifact correction method to enhance image quality within a mobile environment while minimizing shutter lag. We generated a dataset for training by utilizing a high-performance remosaic algorithm and trained a lightweight U-Net based network. The proposed network effectively removes these artifacts, thereby improving the overall image quality. Additionally, it only takes about 15 ms to process a 4000x3000 image on a Galaxy S22 Ultra, making it suitable for real-time applications.

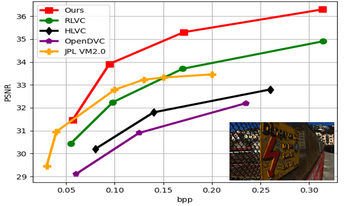

In recent years, several deep learning-based architectures have been proposed to compress Light Field (LF) images as pseudo video sequences. However, most of these techniques employ conventional compression-focused networks. In this paper, we introduce a version of a previously designed deep learning video compression network, adapted and optimized specifically for LF image compression. We enhance this network by incorporating an in-loop filtering block, along with additional adjustments and fine-tuning. By treating LF images as pseudo video sequences and deploying our adapted network, we manage to address challenges presented by the unique features of LF images, such as high resolution and large data sizes. Our method compresses these images competently, preserving their quality and unique characteristics. With the thorough fine-tuning and inclusion of the in-loop filtering network, our approach shows improved performance in terms of Peak Signal-to-Noise Ratio (PSNR) and Mean Structural Similarity Index Measure (MSSIM) when compared to other existing techniques. Our method provides a feasible path for LF image compression and may contribute to the emergence of new applications and advancements in this field.

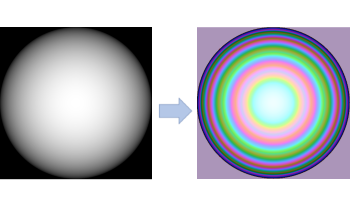

Recent advancements in 3D data capture have enabled the real-time acquisition of high-resolution 3D range data, even in mobile devices. However, this type of high bit-depth data remains difficult to efficiently transmit over a standard broadband connection. The most successful techniques for tackling this data problem thus far have been image-based depth encoding schemes that leverage modern image and video codecs. To our knowledge, no published work has directly optimized the end-to-end losses of a depth encoding scheme passing through a lossy image compression codec. In contrast, our compression-resilient neural depth encoding method leverages deep learning to efficiently encode depth maps into 24-bit RGB representations that minimize end-to-end depth reconstruction errors when compressed with JPEG. Our approach employs a fully differentiable pipeline, including a differentiable approximation of JPEG, allowing it to be trained end-to-end on the FlyingThings3D dataset with randomized JPEG qualities. On a Microsoft Azure Kinect depth recording, the neural depth encoding method was able to significantly outperform an existing state-of-the-art depth encoding method in terms of both root-mean-square error (RMSE) and mean absolute error (MAE) in a wide range of image qualities, all with over 20% lower average file sizes. Our method offers an efficient solution for emerging 3D streaming and 3D telepresence applications, enabling high-quality 3D depth data storage and transmission.

With artificial-intelligence (AI) becoming the mainstream approach to solve a myriad of problems across industrial, automotive, medical, military, wearables and cloud, the need for high-performance, low-power embedded devices are stronger than ever. Innovations around designing an efficient hardware accelerator to perform AI tasks also involves making them fault-tolerant to work reliability under varying stressful environmental conditions. These embedded devices could be deployed under varying thermal and electromagnetic interference conditions which require both the processing blocks and on-device memories to recover from faults and provide a reliable quality of service. Particularly in the automotive context, ASIL-B compliant AI systems typically implement error-correction-code (ECC) which takes care of single-error-correction, double-error detection (SECDED) faults. ASIL-D based AI systems implement dual lock step compute blocks and builds processing redundancy to reinforce prediction certainty, on top of protecting its memories. Fault-tolerant systems take it one level higher by tripling the processing blocks, where fault detected by one processing element is corrected and reinforced by the other two elements. This becomes a significant silicon area adder and makes the solution an expensive proposition. In this paper we propose novel techniques that can be applied to a typical deep-learning based embedded solution with many processing stages such as memory load, matrix-multiply, accumulate, activation functions and others to build a robust fault tolerant system without linearly tripling compute area and hence the cost of the solution.

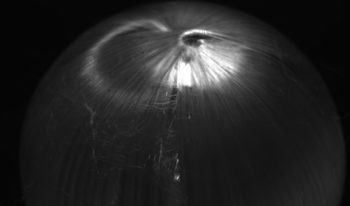

Regression-based radiance field reconstruction strategies, such as neural radiance fields (NeRFs) and, physics-based, 3D Gaussian splatting (3DGS), have gained popularity in novel view synthesis and scene representation. These methods parameterize a high-dimensional function that represents a radiance field, from a low-dimensional camera input. However, these problems are ill-posed and struggle to represent high (spatial) frequency data; manifesting as reconstruction artifacts when estimating high frequency details such as small hairs, fibers, or reflective surfaces. Here we show that classical spherical sampling around a target, often referred to as sampling a bounded scene, inhomogeneously samples the targets Fourier domain, resulting in spectral bias in the collected samples. We generalize the ill-posed problems of view-synthesis and scene representation as expressions of projection tomograpy and explore the upper-bound reconstruction limits of regression-based and integration-based strategies. We introduce a physics-based sampling strategy that we directly apply to 3DGS, and demonstrate high fidelity 3D anisotropic radiance field reconstructions with reconstruction PSNR scores as high as 44.04 dB and SSIM scores of 0.99, following the same metric analysis as defined in Mip-NeRF360.

Acquisitions of mass-per-charge (m/z) spectrometry data from tissue samples, at high spatial resolutions, using Mass Spectrometry Imaging (MSI), require hours to days of time. The Deep Learning Approach for Dynamic Sampling (DLADS) and Supervised Learning Approach for Dynamic Sampling with Least-Squares (SLADS-LS) algorithms follow compressed sensing principles to minimize the number of physical measurements performed, generating low-error reconstructions from spatially sparse data. Measurement locations are actively determined during scanning, according to which are estimated, by a machine learning model, to provide the most relevant information to an intended reconstruction process. Preliminary results for DLADS and SLADS-LS simulations with Matrix-Assisted Laser Desorption/Ionization (MALDI) MSI match prior 70% throughput improvements, achieved in nanoscale Desorption Electro-Spray Ionization (nano-DESI) MSI. A new multimodal DLADS variant incorporates optical imaging for a 5% improvement to final reconstruction quality, with DLADS holding a 4% advantage over SLADS-LS regression performance. Further, a Forward Feature Selection (FFS) algorithm replaces expert-based determination of m/z channels targeted during scans, with negligible impact to location selection and reconstruction quality.

The utilization of supercomputers and large clusters for big-data processing has recently gained immense popularity, primarily due to the widespread adoption of Graphics Processing Units (GPUs) to execute iterative algorithms, such as Deep Learning and 2D/3D imaging applications. This trend is especially prominent in the context of large-scale datasets, which can range from hundreds of gigabytes to several terabytes in size. Similar to the field of Deep Learning, which deals with datasets of comparable or even greater sizes (e.g. LION-3B), these efforts encounter complex challenges related to data storage, retrieval, and efficient GPU utilization. In this work, we benchmarked a collection of high-performance general dataloaders used in Deep Learning with a dual focus on user-friendliness (Pythonic) and high-performance execution. These dataloaders have become crucial tools. Notably, advanced dataloading solutions such as Web-datasets, FFCV, and DALI have demonstrated significantly superior performance when compared to traditional PyTorch general data loaders. This work provides a comprehensive benchmarking analysis of high-performance general dataloaders tailored for handling extensive datasets within supercomputer environments. Our findings indicate that DALI surpasses our baseline PyTorch dataloader by up to 3.4x in loading times for datasets comprising one million images.