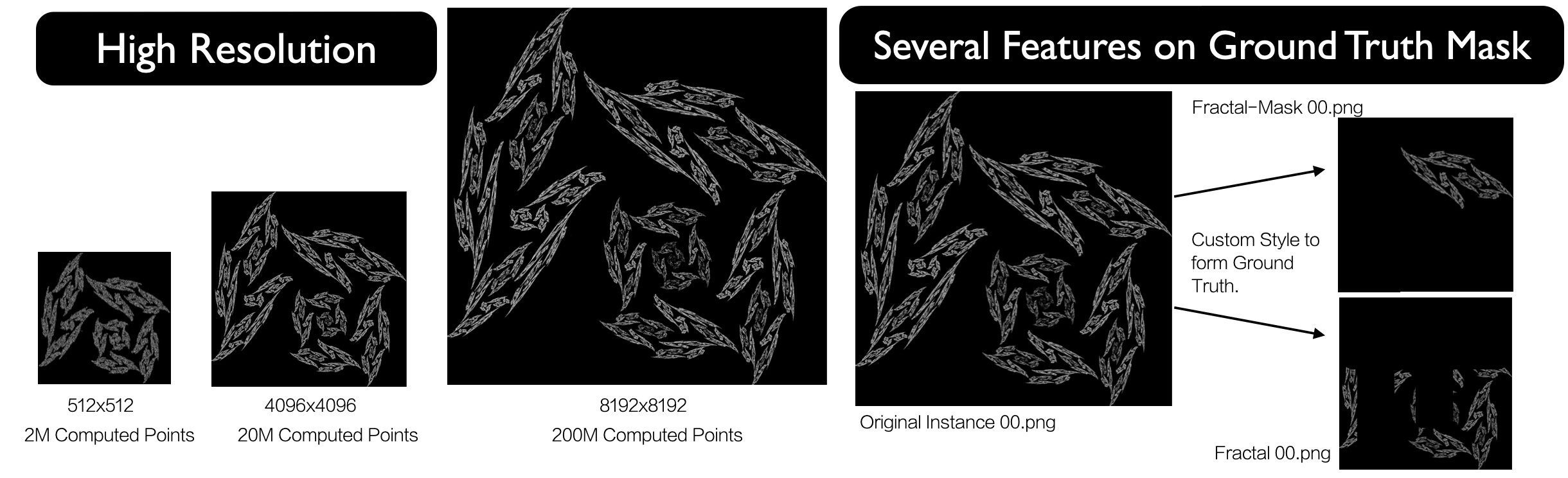

In medical segmentation, the acquisition of high-quality labeled data remains a significant challenge due to the substantial cost and time required for expert annotations. Variability in imaging conditions, patient diversity, and the use of different imaging devices further complicate model training. The high dimensionality of medical images also imposes considerable computational demands, while small lesions or abnormalities can create class imbalance, hindering segmentation accuracy. Pre-training on synthetic datasets in medical imaging may enable Vision Transformers (ViTs) to develop robust feature representations, even during the fine-tuning phase, when high-quality labeled data is limited. In this work, we propose integrating Formula-Driven Supervised Learning (FDSL) synthetic datasets with medical imaging to enhance pre-training for segmentation tasks. We implemented a custom fractal dataset, Style Fractals, capable of generating high-resolution images, including those measuring 8k x 8k pixels. Our results indicate improved performance when using the SAM model for segmentation, in conjunction with robust augmentation techniques, increasing performance from 62.30% to 63.68%. This was followed by fine-tuning on the PAIP dataset, a high-resolution, real-world pathology dataset focused on liver cancer. Additionally, we present results using another synthetic dataset, SegRCDB, for comparative analysis.

Edgar Josafat Martinez-Noriega, Peng Chen, Truong Thao Nguyen, Rio Yokota, "Synthetic Dataset Pre-training for Precision Medical Segmentation Using Vision Transformers" in Electronic Imaging, 2025, pp 176-1 - 176-7, https://doi.org/10.2352/EI.2025.37.12.HPCI-176

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed