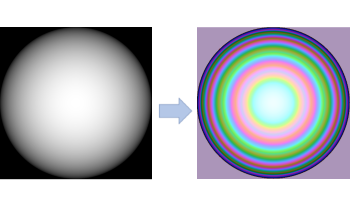

Recent advancements in 3D data capture have enabled the real-time acquisition of high-resolution 3D range data, even in mobile devices. However, this type of high bit-depth data remains difficult to efficiently transmit over a standard broadband connection. The most successful techniques for tackling this data problem thus far have been image-based depth encoding schemes that leverage modern image and video codecs. To our knowledge, no published work has directly optimized the end-to-end losses of a depth encoding scheme passing through a lossy image compression codec. In contrast, our compression-resilient neural depth encoding method leverages deep learning to efficiently encode depth maps into 24-bit RGB representations that minimize end-to-end depth reconstruction errors when compressed with JPEG. Our approach employs a fully differentiable pipeline, including a differentiable approximation of JPEG, allowing it to be trained end-to-end on the FlyingThings3D dataset with randomized JPEG qualities. On a Microsoft Azure Kinect depth recording, the neural depth encoding method was able to significantly outperform an existing state-of-the-art depth encoding method in terms of both root-mean-square error (RMSE) and mean absolute error (MAE) in a wide range of image qualities, all with over 20% lower average file sizes. Our method offers an efficient solution for emerging 3D streaming and 3D telepresence applications, enabling high-quality 3D depth data storage and transmission.

Stephen Siemonsma, Tyler Bell, "Neural Depth Encoding for Compression-Resilient 3D Compression" in Electronic Imaging, 2024, pp 105-1 - 105-6, https://doi.org/10.2352/EI.2024.36.18.3DIA-105

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed