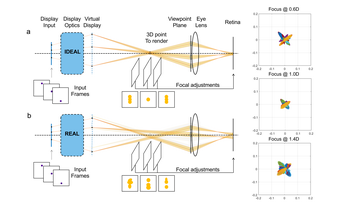

Aberrations in the optical system of a light field (LF) display can degrade its quality and affect the focusing effects in the retinal image, formed by the superposition of multiperspective LF views. To address this problem, we propose a method for calibrating and correcting aberrated LF displays. We employ an LF display optical model to subsequently derive the retinal image formation with a given LF input. This enables us to efficiently measure actual viewpoint locations and the deformation of each perspective image, by capturing focal-stack images during the calibration process. We then use the calibration coefficients to pre-warp the input images so that the aberrations are compensated. We demonstrate the effectiveness of our method on a simulated model of an aberrated near-eye LF display and show that it improves the display's optical quality and the accuracy of the focusing effects.

Robust multi-camera calibration is a fundamental task for all multi-view camera systems, leveraging discreet camera model fitting from sparse target observations. Stereo systems, photogrammetry and light-field arrays have all demonstrated the need for geometrically consistent calibrations to achieve higherlevels of sub-pixel localization accuracy for improved depth estimation. This work presents a calibration target that leverages multi-directional features to achieve improved dense calibrations of camera systems. We begin by presenting a 2D target that uses an encoded feature set, each with 12 bits of uniqueness for flexible patterning and easy identification. These features combine orthogonal sets of straight and circular binary edges, along with Gaussian peaks. Our proposed feature extraction algorithm uses steerable filters for edge localization, and an ellipsoidal peak fitting for the circle center estimation. Feature uniqueness is used for associativity across views, which is combined into a 3D pose graph for nonlinear optimization. Existing camera models are leveraged for intrinsic and extrinsic estimates, demonstrating a reduction in mean re-projection error of for stereo calibration from 0.2 pixels to 0.01 pixels when using a traditional checkerboard and the proposed target respectively.

The basic economic principle dictates that productivity improvement is the main driving force for raising the living standard of the general public and companies' profit margin. By replacing analog devices with their digital counterparts, the last digital revolution has made huge contribution in boosting labor productivity annually despite the reduction in working hours. However, the labor productivity growth rate has dropped to the lowest level during the Great Recession. Recognizing the long-term sustainability challenges of the current economic development, a new societal paradigm has emerged where artificial intelligence and connected devices take over well-defined tasks to achieving economies of scale and empowering people to innovate and create value. Being a form of mass customization manufacturing process, a dynamic imaging solution is proposed in an electrophotographic printing system with computational calibration capability in the spatial and tonal domains when requested, which will be an enabling technologies for the vision of autonomous printing.

Nonlinear CMOS image sensor (CIS) technology is capable of high/wide dynamic range imaging at high frame rates without motion artifacts. However, unlike with linear CIS technology, there is no generic method for colour correction of nonlinear CIS technology. Instead, there are specific methods for specific nonlinear responses, e.g., the logarithmic response, that are based on legacy models. Inspired by recent work on generic methods for fixed pattern noise and photometric correction of nonlinear sensors, which depend only on a reasonable assumption of monotonicity, this paper proposes and validates a generic method for colour correction of nonlinear sensors. The method is composed of a nonlinear colour correction, which employs cubic Hermite splines, followed by a linear colour correction. Calibration with a colour chart is required to estimate the relevant parameters. The proposed method is validated, through simulation, using a combination of experimental data, from a monochromatic logarithmic CIS, and spectral data, reported in the literature, of actual colour filter arrays and target colour patches.

In workplace of factory or office, measuring positions of workers is important to make the workplace visible for improving working efficiency, avoiding miss operation and accidents. Monocular fisheye camera is used by conventional methods to find and track people in workplace. But it is difficult for these methods to get 3D position of workers. Stereo fisheye cameras were used to measure 3D position. But calibrating these fisheye cameras is a hard and time-consuming work. We propose a new method to measure 3D position of people in workplace by only one fisheye camera. One 360-degree fisheye image is projected to a unit sphere and several perspective projection images are generated to correct fisheye distortion. People recognition is made in the corrected images by machine learning method. Recognition results are used to calculate 3D position of people in workplace. Only very few markers are set on floor of workplace to make calibration between perspective projection images and plane of workplace floor. People recognition and position measurement experiments were made in workplace by Ricoh R 360-degree monocular fisheye cameras. People recognition rate of 92.5%, false positive rate of 0.2%, people position measurement accuracy of 6.6% were obtained. The evaluation results demonstrate effectiveness of our proposed method.

For several decades, many CMOS Image Sensors (CIS) with a small pixel size have used single slope column parallel ADCs (SS-ADC). It is well known that the main drawback of this ADC is the conversion speed. This paper presents several ADC architectures which improve the speed of a SS-ADC. Different architectures are compared in terms of ADC resolution, power consumption, noise and conversion time.