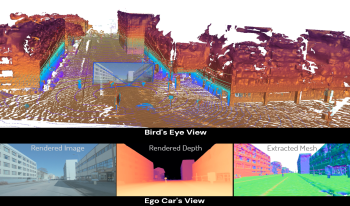

Dense 3D reconstruction has many applications in automated driving including automated annotation validation, multi-modal data augmentation, providing ground truth annotations for systems lacking LiDAR, as well as enhancing auto-labeling accuracy. LiDAR provides highly accurate but sparse depth, whereas camera images enable estimation of dense depth but noisy particularly at long ranges. In this paper, we harness the strengths of both sensors and propose a multimodal 3D scene reconstruction using a framework combining neural implicit surfaces and radiance fields. In particular, our method estimates dense and accurate 3D structures and creates an implicit map representation based on signed distance fields, which can be further rendered into RGB images, and depth maps. A mesh can be extracted from the learned signed distance field and culled based on occlusion. Dynamic objects are efficiently filtered on the fly during sampling using 3D object detection models. We demonstrate qualitative and quantitative results on challenging automotive scenes.

Imitation learning is used massively in autonomous driving for training networks to predict steering commands from frames using annotated data collected by an expert driver. Believing that the frames taken from a front-facing camera are completely mimicking the driver’s eyes raises the question of how eyes and the complex human vision system attention mechanisms perceive the scene. This paper proposes the idea of incorporating eye gaze information with the frames into an end-to-end deep neural network in the lane-following task. The proposed novel architecture, GG-Net, is composed of a spatial transformer network (STN), and a multitask network to predict steering angle as well as the gaze map for the input frame. The experimental results of this architecture show a great improvement in steering angle prediction accuracy of 36% over the baseline with inference time of 0.015 seconds per frame (66 fps) using NVIDIA K80 GPU enabling the proposed model to operate in real-time. We argue that incorporating gaze maps enhances the model generalization capability to the unseen environments. Additionally, a novel course-steering angle conversion algorithm with a complementing mathematical proof is proposed.

In this paper, we present an overview of automotive image quality challenges and link them to the physical properties of image acquisition. This process shows that the detection probability based KPIs are a helpful tool to link image quality to the tasks of the SAE classified supported and automated driving tasks. We develop questions around the challenges of the automotive image quality and show that especially color separation probability (CSP) and contrast detection probability (CDP) are a key enabler to improve the knowhow and overview of the image quality optimization problem. Next we introduce a proposal for color separation probability as a new KPI which is based on the random effects of photon shot noise and the properties of light spectra that cause color metamerism. This allows us to demonstrate the image quality influences related to color at different stages of the image generation pipeline. As a second part we investigated the already presented KPI Contrast Detection Probability and show how it links to different metrics of automotive imaging such as HDR, low light performance and detectivity of an object. As conclusion, this paper summarizes the status of the standardization status within IEEE P2020 of these detection probability based KPIs and outlines the next steps for these work packages.

Modern automobiles accidents occur mostly due to inattentive behavior of drivers, which is why driver’s gaze estimation is becoming a critical component in automotive industry. Gaze estimation has introduced many challenges due to the nature of the surrounding environment like changes in illumination, or driver’s head motion, partial face occlusion, or wearing eye decorations. Previous work conducted in this field includes explicit extraction of hand-crafted features such as eye corners and pupil center to be used to estimate gaze, or appearance-based methods like Convolutional Neural Networks which implicitly extracts features from an image and directly map it to the corresponding gaze angle. In this work, a multitask Convolutional Neural Network architecture is proposed to predict subject’s gaze yaw and pitch angles, along with the head pose as an auxiliary task, making the model robust to head pose variations, without needing any complex preprocessing or hand-crafted feature extraction.Then the network’s output is clustered into nine gaze classes relevant in the driving scenario. The model achieves 95.8% accuracy on the test set and 78.2% accuracy in cross-subject testing, proving the model’s generalization capability and robustness to head pose variation.

In autonomous driving applications, cameras are a vital sensor as they can provide structural, semantic and navigational information about the environment of the vehicle. While image quality is a concept well understood for human viewing applications, its definition for computer vision is not well defined. This gives rise to the fact that, for systems in which human viewing and computer vision are both outputs of one video stream, historically the subjective experience for human viewing dominates over computer vision performance when it comes to tuning the image signal processor. However, the rise in prominence of autonomous driving and computer vision brings to the fore research in the area of the impact of image quality in camera-based applications. In this paper, we provide results quantifying the accuracy impact of sharpening and contrast on two image feature registration algorithms and pedestrian detection. We obtain encouraging results to illustrate the merits of tuning image signal processor parameters for vision algorithms.

Semantic segmentation is an essential aspect of modern autonomous driving systems, since a precise understanding of the environment is crucial for navigation. We investigate the eligibility of novel snapshot hyperspectral cameras ?which capture a whole spectrum in one shot? for road scene classification. Hyperspectral data brings an advantage, as it allows a better analysis of the material properties of objects in the scene. Unfortunately, most classifiers suffer from the Hughes effect when dealing with high-dimensional hyperspectral data. Therefore we propose a new framework of hyperspectral-based feature extraction and classification. The framework utilizes a deep autoencoder network with additional regularization terms which focus on the modeling of latent space rather than the reconstruction error to learn a new dimension-reduced representation. This new dimension-reduced spectral feature space allows the use of deep learning architectures already established on RGB datasets.