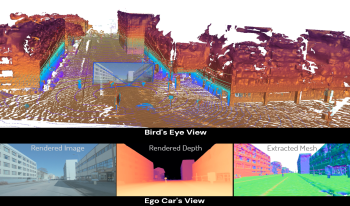

Dense 3D reconstruction has many applications in automated driving including automated annotation validation, multi-modal data augmentation, providing ground truth annotations for systems lacking LiDAR, as well as enhancing auto-labeling accuracy. LiDAR provides highly accurate but sparse depth, whereas camera images enable estimation of dense depth but noisy particularly at long ranges. In this paper, we harness the strengths of both sensors and propose a multimodal 3D scene reconstruction using a framework combining neural implicit surfaces and radiance fields. In particular, our method estimates dense and accurate 3D structures and creates an implicit map representation based on signed distance fields, which can be further rendered into RGB images, and depth maps. A mesh can be extracted from the learned signed distance field and culled based on occlusion. Dynamic objects are efficiently filtered on the fly during sampling using 3D object detection models. We demonstrate qualitative and quantitative results on challenging automotive scenes.

Shihao Shen, Louis Kerofsky, Varun Ravi Kumar, Senthil Yogamani, "Neural Rendering Based Urban Scene Reconstruction For Autonomous Driving" in Electronic Imaging, 2024, pp 365-1 - 365-6, https://doi.org/10.2352/EI.2024.36.17.AVM-365

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed