As 3D Imaging for cultural heritage continues to evolve, it’s important to step back and assess the objective as well as the subjective attributes of image quality. The delivery and interchange of 3D content today is reminiscent of the early days of the analog to digital photography transition, when practitioners struggled to maintain quality for online and print representations. Traditional 2D photographic documentation techniques have matured thanks to decades of collective photographic knowledge and the development of international standards that support global archiving and interchange. Because of this maturation, still photography techniques and existing standards play a key role in shaping 3D standards for delivery, archiving and interchange. This paper outlines specific techniques to leverage ISO-19264-1 objective image quality analysis for 3D color rendition validation, and methods to translate important aesthetic photographic camera and lighting techniques from physical studio sets to rendered 3D scenes. Creating high-fidelity still reference photography of collection objects as a benchmark to assess 3D image quality for renders and online representations has and will continue to help bridge the current gaps between 2D and 3D imaging practice. The accessible techniques outlined in this paper have vastly improved the rendition of online 3D objects and will be presented in a companion workshop.

In this paper we introduce MISHA3D, a set of tools that enable 3D surface capture using the MISHA multispectral imaging system. MISHA3D uses a novel multispectral photometric stereo algorithm to estimate normal, height, and RGB albedo maps as part of the standard multispectral imaging workflow. The maps can be visualized and analyzed directly, or rendered as realistic, interactive digital surrogates using standard graphics APIs. Our hope is that these tools will significantly increase the usefulness of the MISHA system for librarians, curators, and scholars studying historical and cultural heritage artifacts.

In 2024, the VR180 3D short film “Love Letter to Skating” was produced as part of a Curtin University HIVE Summer Internship project conducted by Curtin University student Cassandra Edwards (Cass for short). The film topically explores Cass’s fascination with skating since her childhood years. The location for the shoot was Hyde Park, a beautiful inner-city park with extensive gardens and large over-hanging trees in Perth, Western Australia. The production was filmed using a Canon R5C camera fitted with a Canon Dual Fisheye lens. This particular paper focuses on the stereoscopic post-production workflow. All stereoscopic content filmed natively with two lens cameras have some level of stereoscopic alignment errors. In the post-production stage, the native dual-fisheye 8K footage from the camera was converted to equirectangular format using the Canon EOS VR Utility software. The equirectangular VR180 3D footage was rectified using Stereoscopic Movie Maker V2 software. The rectified footage was then imported into Adobe Premiere where it was edited and combined with sound, music and graphics for the final production. Computer graphics were composited into the final film at the correct depth within Premiere. The final production premiered at the MINA 2024 – the 13th International Mobile Innovation Screening and Smartphone Film Festival 8th November 2024.

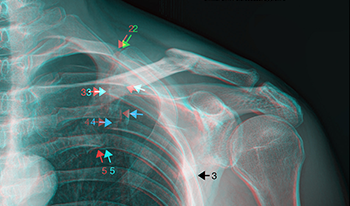

We present several stereoscopic 3D radiographs, obtained in a clinical setting using a technique requiring minimal operator training and no new technology. Reviewing known perceptual advantages in stereoscopic imaging, we argue the benefits for diagnosis and treatment planning primarily in orthopedics, with opportunities likely extended to rheumatology, oncology, and angiology (vascular medicine/surgery). These advantages accrue with the marginal additional cost of capturing just two or three supplementary radiographs using the proposed method. Presently, computed tomography (CT) scanning is standard for obtaining 3D imagery. We discuss relative advantages of stereoscopic 3D radiography (3DSR) in imaging resolution, cost, availability, and radiation dose. Further discussion will describe obstacles and challenges likely to be encountered in clinical implementation of 3DSR, to be mitigated through targeted training of clinicians and technicians. Further research is needed to explore and empirically validate the potential value of 3DSR. We hope to pave the way for this more accessible and cost-effective 3D imaging solution, enhancing diagnostic capabilities and treatment planning, especially in resource-constrained settings. (All 3D radiographs presented in this paper are in the red/cyan color anaglyph format. 3D anaglyph glasses are commonly available online, or in most comics bookstores. This report’s images can be found in L-R stereo-pair format, suitable for 3D viewing with a 3D screen or stereoscope, at this URL: https://www.starosta.com/3DSR/)

This paper will present the story of a collaborative project between the Imaging Department and the Paintings Conservation Department of the Metropolitan Museum of Art to use 3D imaging technology to restore missing and broken elements of an intricately carved giltwood frame from the late 18th century.

Advancements in accurate digitization of 3D objects through photogrammetry are ongoing in the cultural heritage space, for the purposes of digital archival and worldwide access. This paper outlines and documents several user-driven enhancements to the photogrammetry pipeline to improve the fidelity of digitizations. In particular, we introduce a new platform for capturing empirically-based specularity of 3D models called Kintsugi 3D, and visually compare traditional photogrammetry results with this new technique. Kintsugi 3D is a free and open-source package that features, among other things, the ability to generate a set of textures for a 3D model, including normal and specularity maps, based empirically on ground-truth observations from a flash-on-camera image set. It is hoped that the ongoing development of Kintsugi 3D will improve public access for institutions with an interest in sharing high-fidelity photogrammetry.

Simplification of 3D meshes is a fundamental part of most 3D workflows, where the amount of data is reduced to be more manageable for a user. The unprocessed data includes a lot of redundancies and small errors that occur during a 3D acquisition process which can often safely be removed without jeopardizing is function. Several algorithmic approaches are being used across applications of 3D data, which bring with them their own benefits and drawbacks. There is for the moment no standardized algorithm for cultural heritage. This investigation will make a statistical evaluation of how geometric primitive shapes behave during different simplification approaches and evaluate what information might be lost in a HBIM (Heritage-Building-Information-Modeling) or change-monitoring process of cultural heritage if each of these are applied to more complex manifolds.

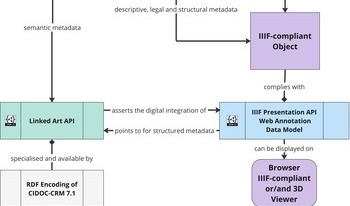

This paper addresses the concerns of the digital heritage field by setting out a series of recommendations for establishing a workflow for 3D objects, increasingly prevalent but still lacking a standardized process, in terms of long-term preservation and dissemination. We build our approach on interdisciplinary collaborations together with a comprehensive literature review. We provide a set of heuristics consisting of the following six components: data acquisition, data preservation, data description, data curation and processing, data dissemination, as well as data interoperability, analysis and exploration. Each component is supplemented by suggestions for standards and tools, which are either already common in 3D practices or represent a high potential component seeking consensus to formalize a 3D environment fit for the Humanities, such as efforts carried out by the International Image Interoperability Framework (IIIF). We then present a conceptual high-level 3D workflow which highly relies on standards adhering to the Linked Open Usable Data (LOUD) design principles.

We describe a novel method for monocular view synthesis. The goal of our work is to create a visually pleasing set of horizontally spaced views based on a single image. This can be applied in view synthesis for virtual reality and glasses-free 3D displays. Previous methods produce realistic results on images that show a clear distinction between a foreground object and the background. We aim to create novel views in more general, crowded scenes in which there is no clear distinction. Our main contributions are a computationally efficient method for realistic occlusion inpainting and blending, especially in complex scenes. Our method can be effectively applied to any image, which is shown both qualitatively and quantitatively on a large dataset of stereo images. Our method performs natural disocclusion inpainting and maintains the shape and edge quality of foreground objects.