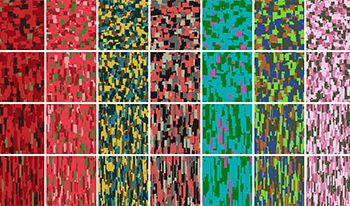

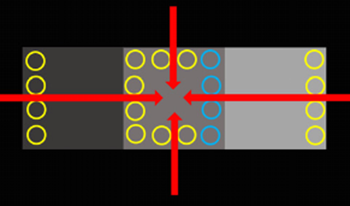

The authors explore the influence of the structure of a texture image on the perception of its color composition through a series of psychophysical studies. They estimate the color composition of a texture by extracting its dominant colors and the associated percentages. They then synthesize new textures with the same color composition but different geometric structural patterns. They conduct empirical studies in the form of two-alternative forced choice tests to determine the influence of two structural factors, pattern scale and shape, on the perceived amount of target color. The results of their studies indicate that (a) participants are able to consistently assess differences in color composition for textures of similar shape and scale, and (b) the perception of color composition is nonveridical. Pattern scale and shape have a strong influence on perceived color composition: the larger the scale, the higher the perceived amount of the target color, and the more elongated the shape, the lower the perceived amount of the target color. The authors also present a simple model that is consistent with the results of their empirical studies by accounting for the reduced visibility of the pixels near the color boundaries. In addition to a better understanding of human perception of color composition, their findings will contribute to the development of color texture similarity metrics.

Humans are adept at perceiving biological motion for purposes such as the discrimination of gender. Observers classify the gender of a walker at significantly above chance levels from a point-light distribution of joint trajectories. However, performance drops to chance level or below for vertically inverted stimuli, a phenomenon known as the inversion effect. This lack of robustness may reflect either a generic learning mechanism that has been exposed to insufficient instances of inverted stimuli or the activation of specialized mechanisms that are pre-tuned to upright stimuli. To address this issue, the authors compare the psychophysical performance of humans with the computational performance of neuromimetic machine-learning models in the classification of gender from gait by using the same biological motion stimulus set. Experimental results demonstrate significant similarities, which include those in the predominance of kinematic motion cues over structural cues in classification accuracy. Second, learning is expressed in the presence of the inversion effect in the models as in humans, suggesting that humans may use generic learning systems in the perception of biological motion in this task. Finally, modifications are applied to the model based on human perception, which mitigates the inversion effect and improves performance accuracy. The study proposes a paradigm for the investigation of human gender perception from gait and makes use of perceptual characteristics to develop a robust artificial gait classifier for potential applications such as clinical movement analysis.

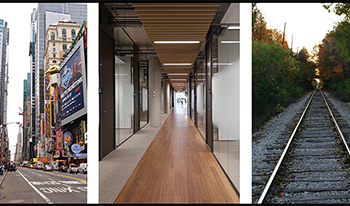

For the visual world in which we operate, the core issue is to conceptualize how its three-dimensional structure is encoded through the neural computation of multiple depth cues and their integration to a unitary depth structure. One approach to this issue is the full Bayesian model of scene understanding, but this is shown to require selection from the implausibly large number of possible scenes. An alternative approach is to propagate the implied depth structure solution for the scene through the “belief propagation” algorithm on general probability distributions. However, a more efficient model of local slant propagation is developed as an alternative.

The overall depth percept must be derived from the combination of all available depth cues, but a simple linear summation rule across, say, a dozen different depth cues, would massively overestimate the perceived depth in the scene in cases where each cue alone provides a close-to-veridical depth estimate. On the other hand, a Bayesian averaging or “modified weak fusion” model for depth cue combination does not provide for the observed enhancement of perceived depth from weak depth cues. Thus, the current models do not account for the empirical properties of perceived depth from multiple depth cues.

The present analysis shows that these problems can be addressed by an asymptotic, or hyperbolic Minkowski, approach to cue combination. With appropriate parameters, this first-order rule gives strong summation for a few depth cues, but the effect of an increasing number of cues beyond that remains too weak to account for the available degree of perceived depth magnitude. Finally, an accelerated asymptotic rule is proposed to match the empirical strength of perceived depth as measured, with appropriate behavior for any number of depth cues.

One of the primary functions of visual perception is to represent, estimate, and evaluate the properties of material surfaces in the visual environment. One such property is surface color, which can convey important information about ecologically relevant object characteristics such as the ripeness of fruit and the emotional reactions of humans in social interactions. This paper further develops and applies a neural model (Rudd, 2013, 2017) of how the human visual system represents the light/dark dimension of color—known as lightness—and computes the colors of achromatic material surfaces in real-world spatial contexts. Quantitative lightness judgments conducted with real surfaces viewed under Gelb (i.e., spotlight) illumination are analyzed and simulated using the model. According to the model, luminance ratios form the inputs to ON- and OFF-cells, which encode local luminance increments and decrements, respectively. The response properties of these cells are here characterized by physiologically motivated equations in which different parameters are assumed for the two cell types. Under non-saturating conditions, ON-cells respond in proportion to a compressive power law of the local incremental luminance in the image that causes them to respond, while OFF-cells respond linearly to local decremental luminance. ON- and OFF-cell responses to edges are log-transformed at a later stage of neural processing and then integrated across space to compute lightness via an edge integration process that can be viewed as a neurally elaborated version of Land’s retinex model (Land & McCann, 1971). It follows from the model assumptions that the perceptual weights—interpreted as neural gain factors—that the model observer applies to steps in log luminance at edges in the edge integration process are determined by the product of a polarity-dependent factor 1—by which incremental steps in log luminance (i.e., edges) are weighted by the value <1.0 and decremental steps are weighted by 1.0—and a distance-dependent factor 2, whose edge weightings are estimated to fit perceptual data. The model accounts quantitatively (to within experimental error) for the following: lightness constancy failures observed when the illumination level on a simultaneous contrast display is changed (Zavagno, Daneyko, & Liu, 2018); the degree of dynamic range compression in the staircase-Gelb paradigm (Cataliotti & Gilchrist, 1995; Zavagno, Annan, & Caputo, 2004); partial releases from compression that occur when the staircase-Gelb papers are reordered (Zavagno, Annan, & Caputo, 2004); and the larger compression release that occurs when the display is surrounded by a white border (Gilchrist & Cataliotti, 1994).