The economy developed rapidly and the change in consumption segment poses higher requirements for the efficient circulation of industrial product transportation. Improving the speed of goods circulation and ensuring the safety and quality of finished products has become an important issue. However, relevant research on these is still lacking. To address the issues of missing barcodes, box damage, and brand error in existing industrial products within the park, this paper proposes an industrial packaging surface barcode and damage detection method based on the GooLeNet network. First, Delta Machine Vision (DMV) products capture and quickly read barcodes from six directions. Second, a corresponding training set is created through sample and data collection. The training set categorizes damage into three types; damaged holes, cracks, and indentations. Moreover, image enhancement processing, along with data expansion, is applied to the dataset. Finally, the improved GooLeNet network model is designed by combining GooLeNet architecture with regularization, aiming to facilitate feature extraction and training of images under the interference of packaging surface patterns. This design leads to a higher damage identification accuracy of 96.63%, which is 14.66%, 3.72%, 14.05%, and 12.78% higher than that of AlexNet, GoogleNet, VGG, and RestNet, respectively, in a convolutional neural network.

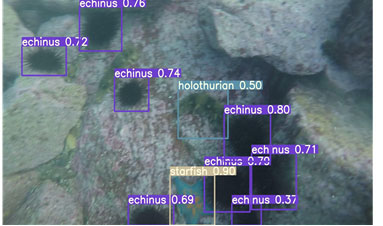

This paper proposes an underwater object detection algorithm based on lightweight structure optimization to address the low detection accuracy and difficult deployment in underwater robot dynamic inspection caused by low light, blurriness, and low contrast. The algorithm builds upon YOLOv7 by incorporating the attention mechanism of the convolutional module into the backbone network to enhance feature extraction in low light and blurred environments. Furthermore, the feature fusion enhancement module is optimized to control the shortest and longest gradient paths for fusion, improving the feature fusion ability while reducing network complexity and size. The output module of the network is also optimized to improve convergence speed and detection accuracy for underwater fuzzy objects. Experimental verification using real low-light underwater images demonstrates that the optimized network improves the object detection accuracy (mAP) by 11.7%, the detection rate by 2.9%, and the recall rate by 15.7%. Moreover, it reduces the model size by 20.2 MB with a compression ratio of 27%, making it more suitable for deployment in underwater robot applications.

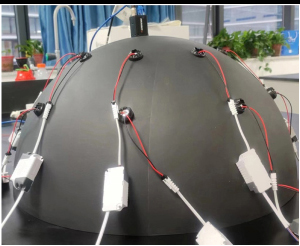

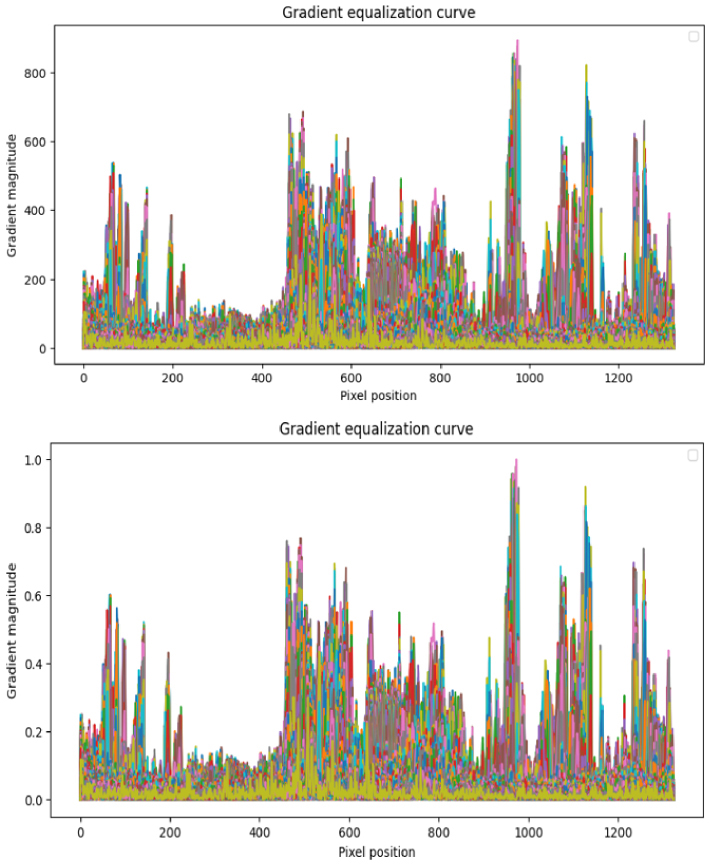

Along with the improvement of quality requirements in industrial production, surface inspection of workpiece has gradually become an indispensable and important process in the production of the workpiece. Aiming at the traditional methods in textured paper inspection, there are problems of low efficiency and large error; based on machine vision, we propose a “photometric stereo vision + fast Fourier enhancement + feature fusion” composite structure inspection method. First, as the traditional CCD camera produces obvious noise and scratches, which are difficult to distinguish from the background texture area, we propose combining the photometric stereo vision measurement algorithm to get the surface gradient information of the textured paper to obtain more gradient texture information; and then realize the secondary enhancement of the image through Fourier transform in spatial and frequency domains. Second, as the textured paper scratches are difficult to detect, the features are difficult to extract, and the threshold boundary is difficult to define, we propose dynamic threshold segmentation through multi-feature fusion to realize the surface scratch detection work of textured paper. We designed experiments using more than 300 different textured papers; and the results show that the composite structure detection method proposed in this paper is feasible and has advantages.

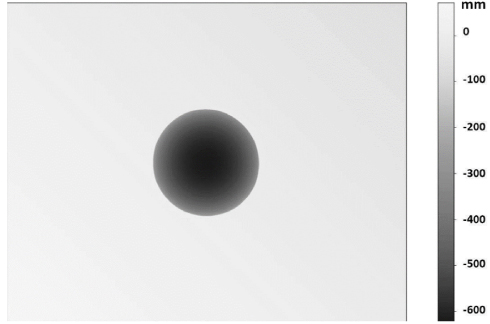

The 3D acquisition of an indoor scene with colorimetric information is currently achieved by vision systems using structured light. The existing solutions are specific to small scenes at short distances. In the case of larger scenes where the vision system is far from the analysed objects, i.e. more than 4 m from the acquisition system, the solutions do not enable scene acquisition with a measurement error of the order of a millimetre. From existing algorithms in the literature, the method, Bi-Frequency and Gray Code Phase Shifting (B-GCPS) combining two structured light algorithms is proposed. The main idea consists in using several reference planes to minimize the measurement error. Our method uses the Gray Code + Phase Shifting (GC+PS) algorithm to assist in the acquisition of reference planes and the selection of the most relevant ones. Then, the Bi-frequency algorithm estimates the 3D coordinates of the scene with a very low measurement error, thanks to different reference planes acquired previously. With the proposed method, the acquisition of long distances scenes higher than 2 m in width and length is possible, with a very low measurement error. This method reduces the measurement error on the 3 axes (X, Y, Z) by at least 400, in the order of a millimetre.

Obtaining a large number of unqualified product samples in industrial production is an arduous task. It is challenging to learn the features of few-shot object images. Despite the limited number of original images, we developed a transfer learning method called LDFISB (Large-scale Dataset to Few-Shot Image with Similar Background) that provides a feasible solution. LDFISB is trained on a large-scale dataset such as CIFAR100, and then the model is fine-tuned based on the original model and parameters to achieve classification tasks on a new APSD (auto part surface dataset). Batch normalization, padding, and Weighted Cross Entropy Loss are employed in the training processes. Hyper-parameters are configured according to Hyper-table to enhance the accuracy of the prediction. The CIFAR10, CIFAR100, and ImageNet were considered as pre-training datasets, and the LDFISB method is capable of accurately predicting the flaw area of the product image. The LDFISB method achieves the highest accuracy on the CIFAR100 pre-training dataset.

Forest fires wreak havoc on natural ecosystems and represent a grave threat to environmental stability. Establishing a rapid and efficient network for the early detection of forest fires remains a critical challenge and a focal point of research. In response to this problem, this paper proposes Fire & Smoke - You Only Look Once (FS-YOLO) for real-time forest fire detection. FS-YOLO significantly enhances fire detection performance through the integration of three innovative modules: Mixed Attention Cross Stage Partial (MACSP), Cross Stage Feature Pyramid Network (CSFPN), and Scalable Spatial Pyramid Pooling (SSPP). First, the MACSP module targets diverse colors and shapes characteristic of forest fires. By combining channel attention with local spatial attention, it precisely weights the network’s features, achieving greater accuracy in capturing fire characteristics. Second, the CSFPN method merges high-level semantic information with low-level detail via both top-down and bottom-up pathways, creating multi-scale feature maps that boast expanded receptive fields. Lastly, the SSPP method enhances the network’s focus on fire targets across varied scenes through scaling factors, bolstering the model’s robustness. Additionally, this paper organizes and annotates a forest fire dataset. The experimental results show that compared to the baseline model, FS-YOLO achieves an 8% improvement in mean average precision, and the average precision values for flames and smoke increase by 10.1% and 5.7%, respectively, indicating a significant overall performance improvement of the model. Compared to other object detection algorithms, FS-YOLO consistently achieves optimal performance.

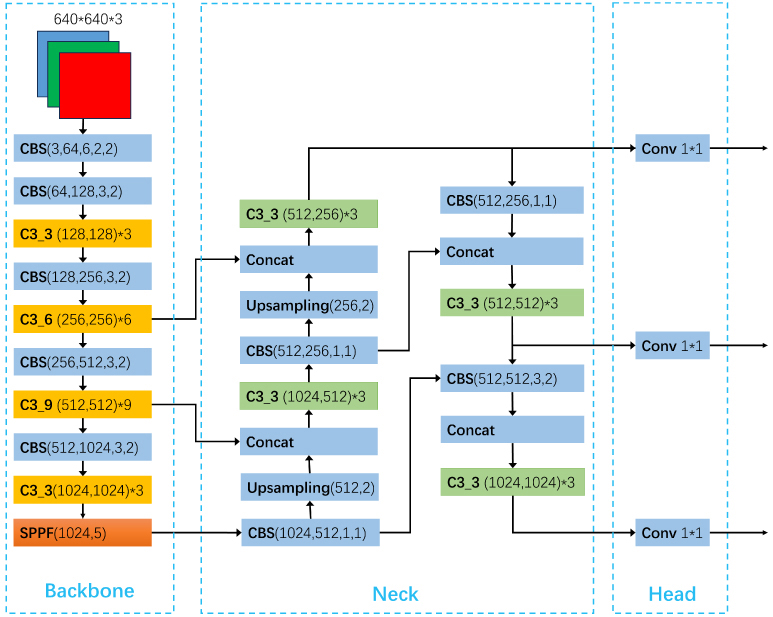

Object detection has been used in a wide range of industries. For example, in autonomous driving, the task of object detection is to accurately and efficiently identify and locate a large number of predefined classes of object instances (vehicles, pedestrians, traffic signs, etc.) from road videos. In robotics, the industrial robot needs to recognize specific machine elements. In the security field, the camera should accurately recognize people’s faces. With the wide application of deep learning, the accuracy and efficiency of object detection have greatly improved, but object detection based on deep learning still faces challenges. Different applications of object detection have different requirements, including highly accurate detection, multi-category object detection, real-time detection, robustness to occlusions, etc. To address the above challenges, based on extensive literature research, this paper analyzes methods for improving and optimizing mainstream object detection algorithms from the perspective of evolution of one-stage and two-stage object detection algorithms. Furthermore, this article proposes methods for improving object detection accuracy from the perspective of changing receptive fields. The new model is based on the original YOLOv5 (You Look Only Once) with some modifications. The structure of the head part of YOLOv5 is modified by adding asymmetrical pooling layers. As a result, the accuracy of the algorithm is improved while ensuring speed. The performance of the new model in this article is compared with that of the original YOLOv5 model and analyzed by several parameters. In addition, the new model is evaluated under four scenarios. Moreover, a summary and outlook on the problems to be solved and the research directions in the future are presented.

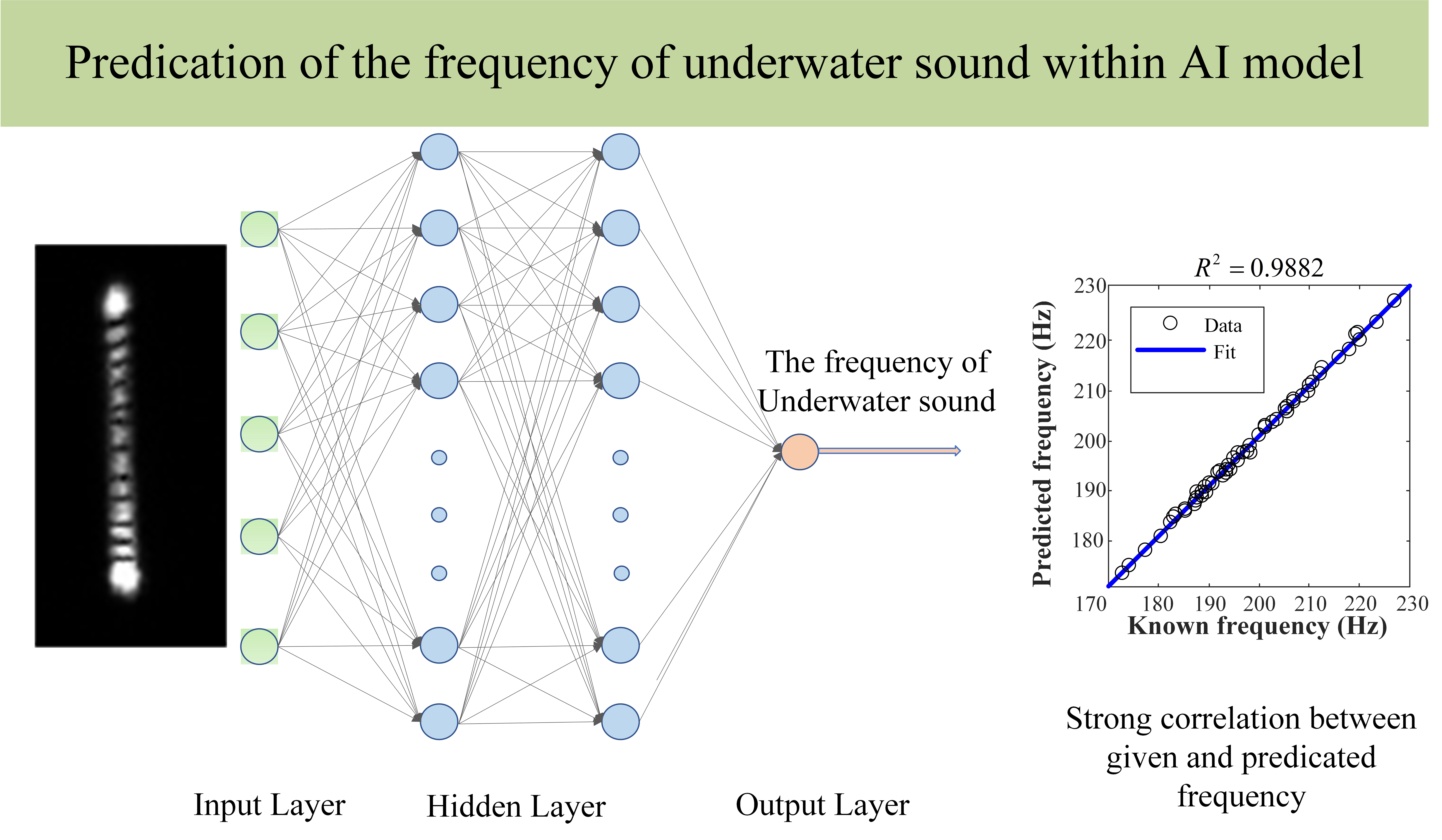

To evaluate underwater sound, a simple non-invasive optical technique based on self-interference to detect low-frequency underwater acoustic signals was demonstrated. Clear self-interference fringes of a laser beam reflected from the surface capillary wave transformed from a low-frequency underwater acoustic signal by a cylinder were observed. This study was also aimed at developing an artificial neural network (ANN) model with input from an optical pattern. The relationship between the optical pattern and the frequency of underwater sound was established, and an optimal combination of hyper-parameters was obtained. By analyzing the fringe region and the fringe interval, the frequency of the underwater acoustic signal and its relative amplitude were measured. A model based on physical optics modified the fringe distribution function, and the theoretical fit with the modified function was in good agreement with experimental observation. The BP-ANN model exhibited good performance in establishing the relationship between the optical pattern and underwater sound.

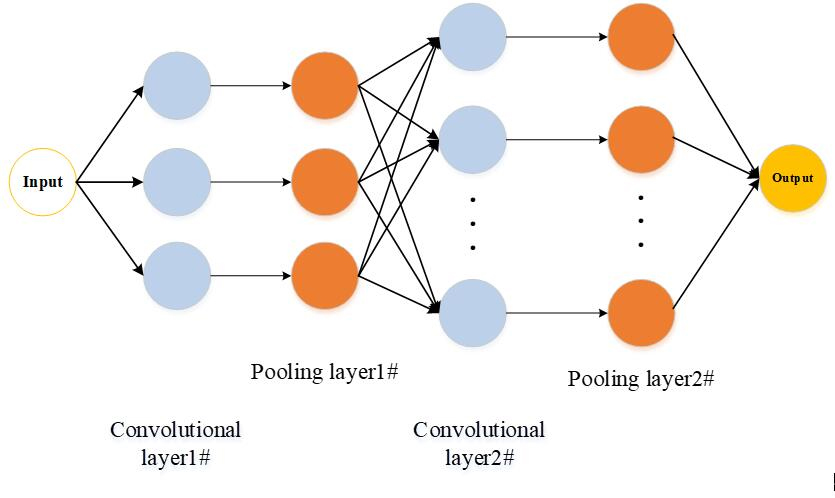

With advances in deep learning technology, the study and application of Human Action Recognition (HAR) systems in competitive sports have evolved, becoming more profound and diverse. These systems have demonstrated the potential to enhance athletes’ training and competitive performance while introducing innovation and progress into sports education and entertainment. This paper addresses the practical needs of sports training by designing a HAR system tailored to competitive sports scenarios, subsequently analyzing its recognition performance and applied models. The primary contribution of this paper lies in its exploration of HAR technology through Convolutional Neural Networks (CNNs) in the context of competitive sports. It systematically investigates and applies HAR requirements in competitive settings. Additionally, this paper evaluates the real-world performance of AlexNet and GoogleNet, constructs a CNN-based HAR system, and assesses its capabilities using publicly available datasets. These efforts provide valuable insights and technical support for the implementation of CNN-based HAR technology in competitive sports and other related fields, offering both academic and practical applications. The results indicate that different models achieve recognition accuracies of 94.45%, 95.04%, 93.01%, 93.23%, and 90.54% under five distinct decision-level fusion equations (A# ∼E#, respectively). Following fine-tuning and optimization, the recognition accuracy of AlexNet, GoogleNet, and ResNet networks significantly improved, with the model achieving a remarkable 99.94% accuracy in recognizing and analyzing the same athlete. In comparison to alternative algorithms, the designed HAR system prioritizes immediacy and interactivity while offering superior accuracy and broader application potential. It successfully fulfills its intended function, accurately recognizing human actions from video images, thereby proving invaluable for research in competitive sports.