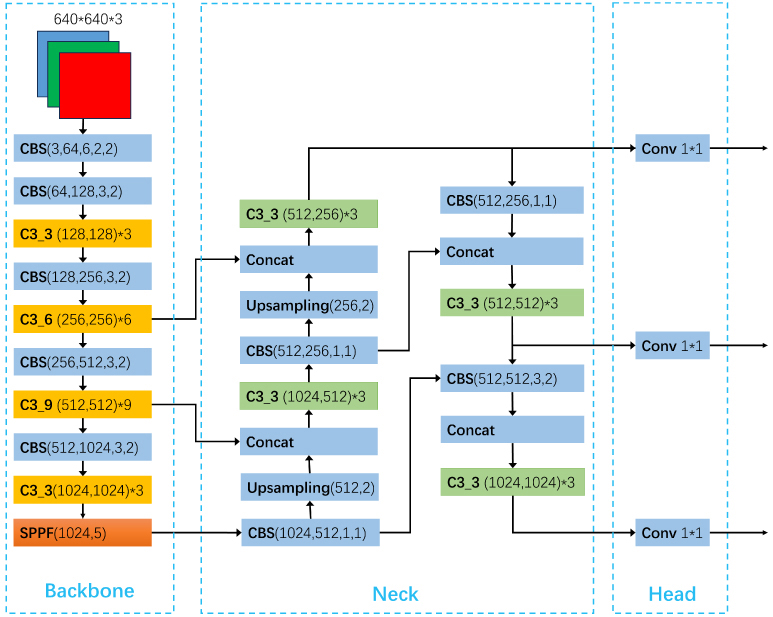

Object detection has been used in a wide range of industries. For example, in autonomous driving, the task of object detection is to accurately and efficiently identify and locate a large number of predefined classes of object instances (vehicles, pedestrians, traffic signs, etc.) from road videos. In robotics, the industrial robot needs to recognize specific machine elements. In the security field, the camera should accurately recognize people’s faces. With the wide application of deep learning, the accuracy and efficiency of object detection have greatly improved, but object detection based on deep learning still faces challenges. Different applications of object detection have different requirements, including highly accurate detection, multi-category object detection, real-time detection, robustness to occlusions, etc. To address the above challenges, based on extensive literature research, this paper analyzes methods for improving and optimizing mainstream object detection algorithms from the perspective of evolution of one-stage and two-stage object detection algorithms. Furthermore, this article proposes methods for improving object detection accuracy from the perspective of changing receptive fields. The new model is based on the original YOLOv5 (You Look Only Once) with some modifications. The structure of the head part of YOLOv5 is modified by adding asymmetrical pooling layers. As a result, the accuracy of the algorithm is improved while ensuring speed. The performance of the new model in this article is compared with that of the original YOLOv5 model and analyzed by several parameters. In addition, the new model is evaluated under four scenarios. Moreover, a summary and outlook on the problems to be solved and the research directions in the future are presented.

Pengfei Li, Wei Wei, Yu Yan, Rong Zhu, LokHin Fung, Muchen Li, "Accurate Object Detection on Feature Maps with Multi-Shape Receptive Field" in Journal of Imaging Science and Technology, 2024, pp 1 - 10, https://doi.org/10.2352/J.ImagingSci.Technol.2024.68.3.030403

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed