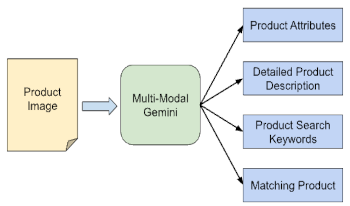

We present the application of a Multimodal Large Language Model, specifically Gemini, in automating product image analysis for the retail industry. We demonstrate how Gemini's ability to generate text based on mixed image-text prompts enables two key applications: 1) Product Attribute Extraction, where various attributes of a product in an image can be extracted using open or closed vocabularies and used for any downstream analytics by the retailers, and 2) Product Recognition, where a product in a user-provided image is identified, and its corresponding product information is retrieved from a retailer's search index to be returned to the user. In both cases, Gemini acts as a powerful and easily customizable recognition engine, simplifying the processing pipeline for retailers' developer teams. Traditionally, these tasks required multiple models (object detection, OCR, attributes classification, embedding, etc) working together, as well as extensive custom data collection and domain expertise. However, with Gemini, these tasks are streamlined by writing a set of prompts and straightforward logic to connect their outputs.

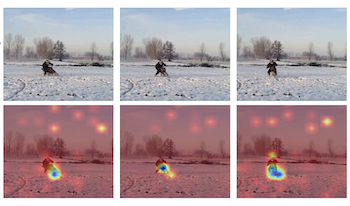

Pre-trained vision-language models, exemplified by CLIP, have exhibited promising zero-shot capabilities across various downstream tasks. Trained on image-text pairs, CLIP is naturally extendable to video-based action recognition, due to the similarity between processing images and video frames. To leverage this inherent synergy, numerous efforts have been directed towards adapting CLIP for action recognition tasks in videos. However, the specific methodologies for this adaptation remain an open question. Common approaches include prompt tuning and fine-tuning with or without extra model components on video-based action recognition tasks. Nonetheless, such adaptations may compromise the generalizability of the original CLIP framework and also necessitate the acquisition of new training data, thereby undermining its inherent zero-shot capabilities. In this study, we propose zero-shot action recognition (ZAR) by adapting the CLIP pre-trained model without the need for additional training datasets. Our approach leverages the entropy minimization technique, utilizing the current video test sample and augmenting it with varying frame rates. We encourage the model to make consistent decisions, and use this consistency to dynamically update a prompt learner during inference. Experimental results demonstrate that our ZAR method achieves state-of-the-art zero-shot performance on the Kinetics-600, HMDB51, and UCF101 datasets.

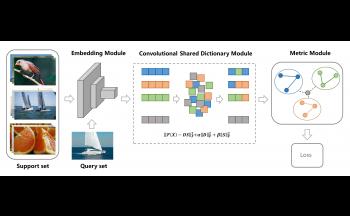

Few-shot learning is the most prevalent problem which has attracted lots of attention in recent years. It is a powerful research method in the case of limited training data. Simultaneously, few-shot learning methods based on metric learning mainly measure the similarity of feature embeddings between the query set sample and each class of support set samples. Therefore, how to design a CNN-based feature extractor is the most crucial problem. Nowadays, the existed feature extractors are obtained via training the standard convolutional networks (e.g., ResNet), which merely focuses on the information inside each image. However, the relations among samples may also be beneficial to promote the performance of the few-shot learning task. This paper proposes a Convolutional Shared Dictionary Module (CSDM) to find the hidden structural information among samples for few-shot learning and reduce the dimension of sample features to remove redundant information. Therefore, the learned dictionary is more easily adapt to the novel class, and the reconstructed features are more discriminative. Moreover, the CSDM is a plug-and-play module and integrates the dictionary learning algorithm into the feature embedding. Experimental results on several benchmark datasets have demonstrated the effectiveness of the proposed CSDM.

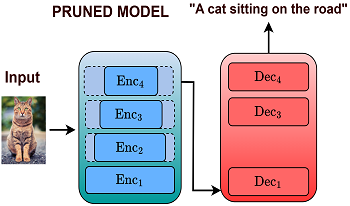

Deep learning models have significantly advanced, leading to substantial improvements in image captioning performance over the past decade. However, these improvements have resulted in increased model complexity and higher computational costs. Contemporary captioning models typically consist of three components such as a pre-trained CNN encoder, a transformer encoder, and a decoder. Although research has extensively explored the network pruning for captioning models, it has not specifically addressed the pruning of these three individual components. As a result, existing methods lack the generalizability required for models that deviate from the traditional configuration of image captioning systems. In this study, we introduce a pruning technique designed to optimize each component of the captioning model individually, thus broadening its applicability to models that share similar components, such as encoders and decoder networks, even if their overall architectures differ from the conventional captioning models. Additionally, we implemented a novel modification during the pruning in the decoder through the cross-entropy loss, which significantly improved the performance of the image-captioning model. Furthermore, we trained and validated our approach on the Flicker8k dataset and evaluated its performance using the CIDEr and ROUGE-L metrics.

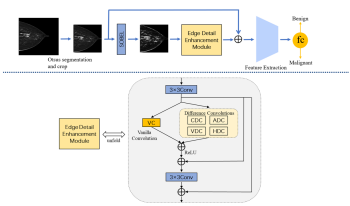

Breast cancer is the leading malignant tumor worldwide, and early diagnosis is crucial for effective treatment. Computer-aided diagnostic models based on deep learning have significantly improved the accuracy and efficiency of medical diagnosis. However, tumor edge features are critical information for determining benign and malignant, but existing methods underutilize tumor edge information, which limits the ability of early diagnosis. To enhance the study of breast lesion features, we propose the enhanced edge feature learning network (EEFL-Net) for mammogram classification. EEFL-Net enhances the learning of pathology features through the Sobel edge detection module and edge detail enhancement module (EDEM). The Sobel edge detection module performs processing to identify and enhance the key edge information. The image then enters the EDEM to fine-tune the processing further and enhance the detailed features, thus improving the classification results. Experiments on two public datasets (INbreast and CBIS-DDSM) show that EEFL-Net performs better than previous advanced mammography image classification methods.

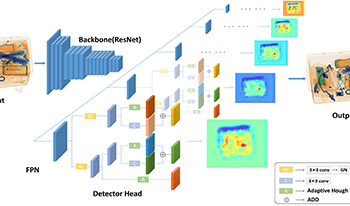

Recently, X-ray prohibited item detection has been widely used for security inspection. In practical applications, the items in the luggage are severely overlapped, leading to the problem of occlusion. In this paper, we address prohibited item detection under occlusion from the perspective of the compositional model. To this end, we propose a novel VotingNet for occluded prohibited item detection. VotingNet incorporates an Adaptive Hough Voting Module (AHVM) based on the generalized Hough transform into the widely-used detector. AHVM consists of an Attention Block (AB) and a Voting Block (VB). AB divides the voting area into multiple regions and leverages an extended Convolutional Block Attention Module (CBAM) to learn adaptive weights for inter-region features and intra-region features. In this way, the information from unoccluded areas of the prohibited items is fully exploited. VB collects votes from the feature maps of different regions given by AB. To improve the performance in the presence of occlusion, we combine AHVM with the original convolutional branches, taking full advantage of the robustness of the compositional model and the powerful representation capability of convolution. Experimental results on OPIXray and PIDray datasets show the superiority of VotingNet on widely used detectors (including representative anchor-based and anchor-free detectors).

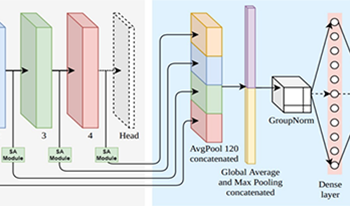

This work addresses the challenge of identifying the provenance of illicit cultural artifacts, a task often hindered by the lack of specialized expertise among law enforcement and customs officials. To facilitate immediate assessments, we propose an improved deep learning model based on a pre-trained ResNet model, fine-tuned for archaeological artifact recognition through transfer learning. Our model uniquely integrates multi-level feature extraction, capturing both textural and structural features of artifacts, and incorporates self-attention mechanisms to enhance contextual understanding. In addition, we developed two different artifact datasets: a dataset with mixed types of earthenware and a dataset for coins. Both datasets are categorized according to the age and region of artifacts. Evaluations of the proposed model on these datasets demonstrate improved recognition accuracy thanks to the enhanced feature representation.

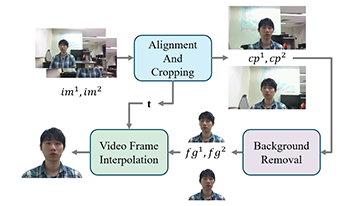

In this paper, we propose a new solution for synthesizing frontal human images in video conferencing, aimed at enhancing immersive communication. Traditional methods such as center staging, gaze correction, and background replacement improve the user experience, but they do not fully address the issue of off-center camera placement. We introduce a system that utilizes two arbitrary cameras positioned on the top bezel of a display monitor to capture left and right images of the video participant. A facial landmark detection algorithm identifies key points on the participant’s face, from which we estimate the head pose. A segmentation model is employed to remove the background, isolating the user. The core component of our method is a video frame interpolation technique that synthesizes a realistic frontal view of the participant by leveraging the two captured angles. This method not only enhances visual alignment between users but also maintains natural facial expressions and gaze direction, resulting in a more engaging and life-like video conferencing experience.

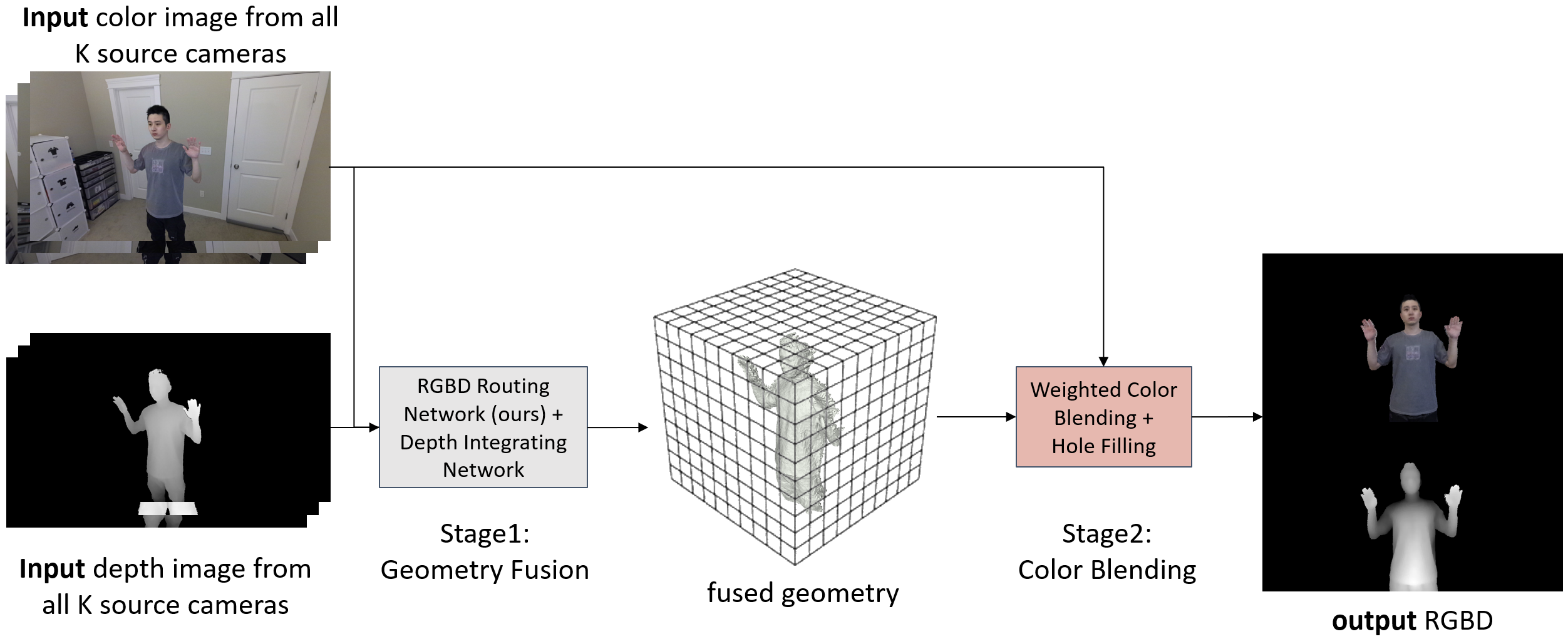

With the widespread use of video conferencing technology for remote communication in the workforce, there is an increasing demand for face-to-face communication between the two parties. To solve the problem of difficulty in acquiring frontal face images, multiple RGB-D cameras have been used to capture and render the frontal faces of target objects. However, the noise of depth cameras can lead to geometry and color errors in the reconstructed 3D surfaces. In this paper, we proposed RGBD Routed Blending, a novel two-stage pipeline for video conferencing that fuses multiple noisy RGB-D images in 3D space and renders virtual color and depth output images from a new camera viewpoint. The first stage is the geometry fusion stage consisting of an RGBD Routing Network followed by a Depth Integrating Network to fuse the RGB-D input images to a 3D volumetric geometry. As an intermediate product, this fused geometry is sent to the second stage, the color blending stage, along with the input color images to render a new color image from the target viewpoint. We quantitatively evaluate our method on two datasets, a synthetic dataset (DeformingThings4D) and a newly collected real dataset, and show that our proposed method outperforms the state-of-the-art baseline methods in both geometry accuracy and color quality.