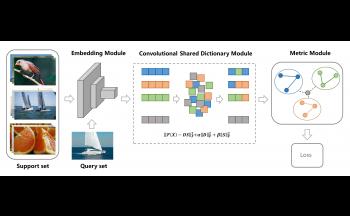

Few-shot learning is the most prevalent problem which has attracted lots of attention in recent years. It is a powerful research method in the case of limited training data. Simultaneously, few-shot learning methods based on metric learning mainly measure the similarity of feature embeddings between the query set sample and each class of support set samples. Therefore, how to design a CNN-based feature extractor is the most crucial problem. Nowadays, the existed feature extractors are obtained via training the standard convolutional networks (e.g., ResNet), which merely focuses on the information inside each image. However, the relations among samples may also be beneficial to promote the performance of the few-shot learning task. This paper proposes a Convolutional Shared Dictionary Module (CSDM) to find the hidden structural information among samples for few-shot learning and reduce the dimension of sample features to remove redundant information. Therefore, the learned dictionary is more easily adapt to the novel class, and the reconstructed features are more discriminative. Moreover, the CSDM is a plug-and-play module and integrates the dictionary learning algorithm into the feature embedding. Experimental results on several benchmark datasets have demonstrated the effectiveness of the proposed CSDM.

Tong Zhou, Changyin Dong, Zhen Wang, Bo Chang, Junshu Song, Bin Shen, Baodi Liu, Pengfei He, "Convolutional Shared Dictionary Module for Few-shot Learning" in Electronic Imaging, 2025, pp 265-1 - 265-7, https://doi.org/10.2352/EI.2025.37.8.IMAGE-265

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed