Domain Adaptation (DA) techniques aim to overcome the domain shift between a source domain used for training and a target domain used for testing. In recent years, vision transformers have emerged as a preferred alternative to Convolutional Neural Networks (CNNs) for various computer vision tasks. When used as backbones for DA, these attention-based architectures have been found to be more powerful than standard ResNet backbones. However, vision transformers require a larger computational overhead due to their model size. In this paper, we demonstrate the superiority of attention-based architectures for domain generalization and source-free unsupervised domain adaptation. We further improve the performance of ResNet-based unsupervised DA models using knowledge distillation from a larger teacher model to the student ResNet model. We explore the efficacy of two frameworks and answer the question: is it better to distill and then adapt or to adapt and then distill? Our experiments on two popular datasets show that adapt-to-distill is the preferred approach.

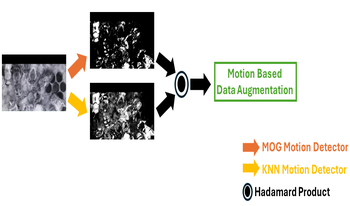

In this paper, we address the task of detecting honey bees inside a beehive using computer vision with the goal of monitoring their activity. Conventionally, beekeepers monitor the activities of honey bees by watching colony entrances or by opening their colonies and examining bee movement and behavior during inspections. However, these methods either miss important information or alter honey bee behavior. Therefore, we installed simple cameras and IR lighting into honey bee colonies for a proof of concept study whether deep-learning techniques could assist in-hive observations. However, the lighting conditions across different beehives are diverse, which leads to varied appearances of both the beehive backgrounds and the honey bees. This phenomenon significantly degrades the performance of detection using Deep Neural Networks. In this paper, we propose to apply domain randomization based on motion to train honey bee detectors for inside the beehive. Our experiments were conducted on the images captured from beehives both seen and unseen during training. The results show that our proposed method boosts the performance of honey bee detection, especially for small bees which are more likely to be affected by the lighting conditions.

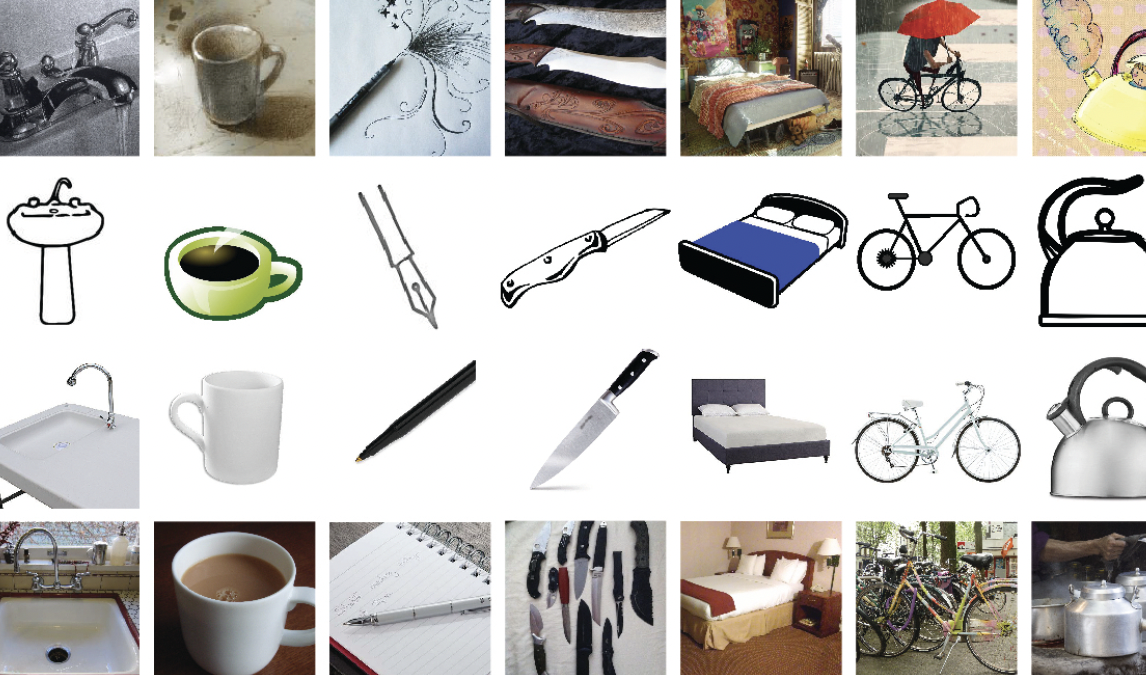

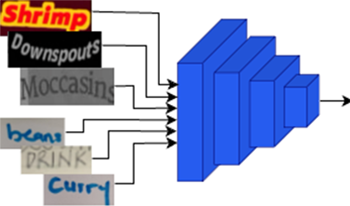

In this paper, we introduce a unified handwriting and scene-text recognition model tailored to discern both printed and hand-written text images. Our primary contribution is the incorporation of the self-attention mechanism, a salient feature of the transformer architecture. This incorporation leads to two significant advantages: 1) A substantial improvement in the recognition accuracy for both scene-text and handwritten text, and 2) A notable decrease in inference time, addressing a prevalent challenge faced by modern recognizers that utilize sequence-based decoding with attention.

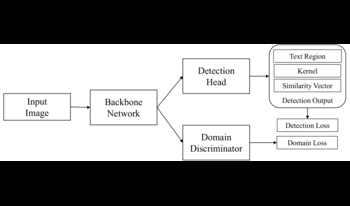

In this paper, we present a deep-learning approach that unifies handwriting and scene-text detection in images. Specifically, we adopt adversarial domain generalization to improve text detection across different domains and extend the conventional dice loss to provide extra training guidance. Furthermore, we build a new benchmark dataset that comprehensively captures various handwritten and scene text scenarios in images. Our extensive experimental results demonstrate the effectiveness of our approach in generalizing detection across both handwriting and scene text.

Intelligence assistance applications hold enormous potential to extend the range of tasks people can perform, increase the speed and accuracy of task performance and provide high quality documentation for record keeping. However, the computational complexity of modern perception and reasoning techniques based on massive foundation model networks cannot run on devices at the edge. A remote server can be used to offload computation but latency and security concerns often rule this out. Distillation and quantization can compress networks but we still face the challenge of obtaining sufficient training data for all possible task executions. We propose a hybrid ensemble architecture that combines intelligent switching of special purpose networks and a symbolic reasoner to provide assistance on modest hardware while still allowing robust and sophisticated reasoning. The rich reasoner representations can also be to identify mistakes in complex procedures. Since system inferences are still imperfect, users can be confused about what the system expects and get frustrated. An interface which makes the capabilities and limitations of perception and reasoning transparent to users dramatically improves the usability of the system. Importantly, our interface provides feedback without compromising situational awareness through well designed audio cues and compact icon-based feedback.