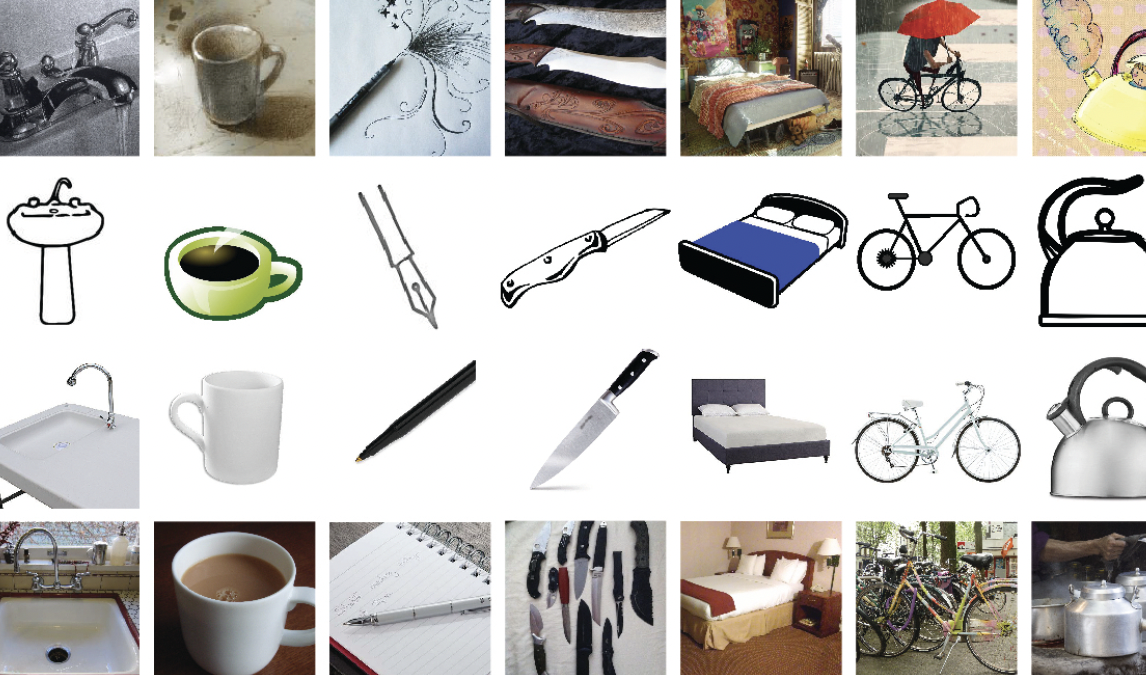

Domain Adaptation (DA) techniques aim to overcome the domain shift between a source domain used for training and a target domain used for testing. In recent years, vision transformers have emerged as a preferred alternative to Convolutional Neural Networks (CNNs) for various computer vision tasks. When used as backbones for DA, these attention-based architectures have been found to be more powerful than standard ResNet backbones. However, vision transformers require a larger computational overhead due to their model size. In this paper, we demonstrate the superiority of attention-based architectures for domain generalization and source-free unsupervised domain adaptation. We further improve the performance of ResNet-based unsupervised DA models using knowledge distillation from a larger teacher model to the student ResNet model. We explore the efficacy of two frameworks and answer the question: is it better to distill and then adapt or to adapt and then distill? Our experiments on two popular datasets show that adapt-to-distill is the preferred approach.

Georgi Thomas, Andreas Savakis, "Adapt to Distill or Distill to Adapt" in Electronic Imaging, 2024, pp 238-1 - 238-6, https://doi.org/10.2352/EI.2024.36.8.IMAGE-238

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed