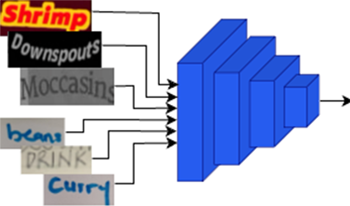

In this paper, we introduce a unified handwriting and scene-text recognition model tailored to discern both printed and hand-written text images. Our primary contribution is the incorporation of the self-attention mechanism, a salient feature of the transformer architecture. This incorporation leads to two significant advantages: 1) A substantial improvement in the recognition accuracy for both scene-text and handwritten text, and 2) A notable decrease in inference time, addressing a prevalent challenge faced by modern recognizers that utilize sequence-based decoding with attention.

Gaurav Patel, Taewook Kim, Qian Lin, Jan P. Allebach, Qiang Qiu, "Self-Attention Enhanced Recognition: A Unified Model for Handwriting and Scene-text Recognition with Improved Inference" in Electronic Imaging, 2024, pp 241-1 - 241-6, https://doi.org/10.2352/EI.2024.36.8.IMAGE-241

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed