In recent years, deep learning has achieved excellent results in several applications across various fields. However, as the scale of deep learning models increases, the training time of the models also increases dramatically. Furthermore, hyperparameters have a significant influence on model training results and selecting the model’s hyperparameters efficiently is essential. In this study, the orthogonal array of the Taguchi method is used to find the best experimental combination of hyperparameters. This research uses three hyperparameters of the you only look once-version 3 (YOLOv3) detector and five hyperparameters of data augmentation as the control factor of the Taguchi method in addition to the traditional signal-to-noise ratio (S/N ratio) analysis method with larger-the-better (LB) characteristics.

Experimental results show that the mean average precision of the blood cell count and detection dataset is 84.67%, which is better than the available results in literature. The method proposed herein can provide a fast and effective search strategy for optimizing hyperparameters in deep learning.

The detection of urban appearance violation in unmanned aerial vehicle imagery faces several challenges. To address this problem, an optimized YOLOv8n-based urban appearance violation detection model is proposed. A custom dataset including four classes is created owing to the lack of a sufficient dataset. The Convolutional Block Attention Module attention mechanism is applied to improve the feature extraction ability of the model. A small target detection head is added to capture the characteristics of small targets and context information more effectively. The loss function Wise Intersection over Union is applied to improve the regression performance of the bounding box and the robustness of detection. Experimental results show that compared with the YOLOv8n model, the Precision, Recall, mAP0.5, and mAP0.5−0.95 of the optimized method increase by 3.8%, 2.1%, 3.3%, and 4.8%, respectively. Besides, an intelligent urban appearance violation detection system is developed, which generates and delivers warning messages via the WeChat official account platform.

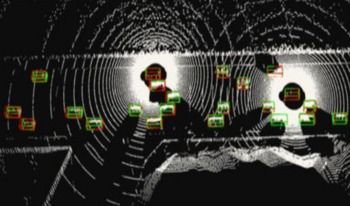

Collaborative perception for autonomous vehicles aims to overcome the limitations of individual perception. Sharing information between multiple agents resolve multiple problems, such as occlusion, sensor range limitations, and blind spots. One of the biggest challenge is to find the right trade-off between perception performance and communication bandwidth. This article proposes a new cooperative perception pipeline based on the Where2comm algorithm with optimization strategies to reduce the amount of transmitted data between several agents. Those strategies involve a data reduction module in the encoder part for efficient selection of the most important features and a new representation of messages to be exchanged in a V2X manner that takes into account a vector of information and its positions instead of a high-dimensional feature map. Our approach is evaluated on two simulated datasets, OPV2V and V2XSet. The accuracy is increased by around 7% with AP@50 on both datasets and the communication volume is reduced by 89.77% and 92.19% on V2XSet and OPV2V respectively.

Object detection and video single-frame detection have seen substantial advancements in recent years, particularly with deep-learning-based approaches demonstrating strong performance. However, these detectors often struggle in practical scenarios such as the analysis of video frames captured by unmanned aerial vehicles. The existing detectors usually do not perform well, especially for some objects with small area, large scale variation, dense distribution, and motion blur. To address these challenges, we propose a new feature extraction network: Attention-based Weighted Fusion Network. Our proposed method incorporates the Self-Attention Residual Block to enhance feature extraction capabilities. To accurately locate and identify objects of interest, we introduce the Mixed Attention Module, which significantly enhances object detection accuracy. Additionally, we incorporate adaptive learnable weights for each feature map to emphasize contributions from feature maps with varying resolutions during feature fusion. The performance of our method is evaluated on two datasets: PASCAL VOC and VisDrone2019. Experimental results demonstrate that our proposed method is superior to the baseline and other detectors. Our method achieves 87.1% mean average precision on the Pascal VOC 2007 test set and surpasses the baseline by 3.1% AP50. In addition, our method also exhibits lower false detection rate and missed detection rate compared with other detectors.

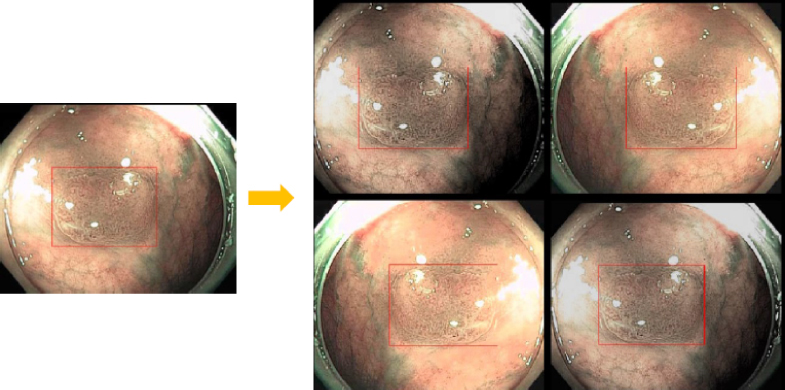

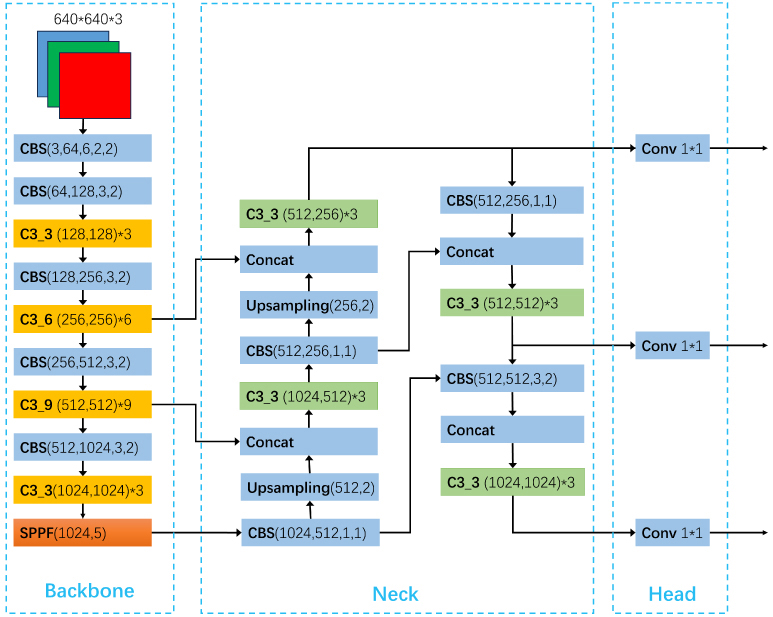

Object detection has been used in a wide range of industries. For example, in autonomous driving, the task of object detection is to accurately and efficiently identify and locate a large number of predefined classes of object instances (vehicles, pedestrians, traffic signs, etc.) from road videos. In robotics, the industrial robot needs to recognize specific machine elements. In the security field, the camera should accurately recognize people’s faces. With the wide application of deep learning, the accuracy and efficiency of object detection have greatly improved, but object detection based on deep learning still faces challenges. Different applications of object detection have different requirements, including highly accurate detection, multi-category object detection, real-time detection, robustness to occlusions, etc. To address the above challenges, based on extensive literature research, this paper analyzes methods for improving and optimizing mainstream object detection algorithms from the perspective of evolution of one-stage and two-stage object detection algorithms. Furthermore, this article proposes methods for improving object detection accuracy from the perspective of changing receptive fields. The new model is based on the original YOLOv5 (You Look Only Once) with some modifications. The structure of the head part of YOLOv5 is modified by adding asymmetrical pooling layers. As a result, the accuracy of the algorithm is improved while ensuring speed. The performance of the new model in this article is compared with that of the original YOLOv5 model and analyzed by several parameters. In addition, the new model is evaluated under four scenarios. Moreover, a summary and outlook on the problems to be solved and the research directions in the future are presented.

The usefulness of mobile devices has increased greatly in recent years allowing users to perform more tasks in daily life. Mobile devices and applications provide many benefits for users, perhaps most significantly is the increased access to point-of-use tools, navigation, and alert systems. This paper presents a prototype of a cross-platform mobile augmented reality (AR) system with the core purpose of finding a better means to keep the campus community secure and connected. The mobile AR System consists of four core functionalities – an events system, a policing system, a directory system, and a notification system. The events system keeps the community up-to-date on current events that are happening or will be happening on campus. The policing system allows the community to stay in arms reach of campus resources that will allow them to stay secure. The directory system serves as a one-stop-shop for campus resources, ensuring that staff, faculty, and students will have a convenient and efficient means of accessing pertinent information on the campus departments. The mobile augmented reality system includes integrated guided navigation system that users can use to get directions to various destinations on campus. The various destinations are different buildings and departments on campus. This mobile augmented reality application will assist the students and visitors on campus to efficiently navigate the campus as well as send alert and notifications in case of emergencies. This will allow campus police to respond to the emergencies in a quick and timely manner. The mobile AR system was designed using Unity Game Engine and Vuforia Engine for object detection and classification. Google Map API was integrated for GPS integration in order to provide location-based services. Our contribution lies in our approach to create a user specific customizable navigational and alert system in order to improve the safety of the users at their workplace. Specifically, the paper describes the design and implementation of the proposed mobile AR system and reports the results of the pilot study conducted to evaluate their perceived ease-of-use, and usability.

This research explores a fresh approach to the selection and weighting of classical image features for infrared object detection and target-like clutter rejection. Traditional statistical techniques are used to calculate individual features, while modern supervised machine learning techniques are used to rank-order the predictive-value of each feature. This paper describes the use of Decision Trees to determine which features have the highest value in prediction of the correct binary target/non-target class. This work is unique in that it is focused on infrared imagery and exploits interpretable machine learning techniques for the selection of hand-crafted features integrated into a pre-screening algorithm.

In this paper, we propose a video analytics system to identify the behavior of turkeys. Turkey behavior provides evidence to assess turkey welfare, which can be negatively impacted by uncomfortable ambient temperature and various diseases. In particular, healthy and sick turkeys behave differently in terms of the duration and frequency of activities such as eating, drinking, preening, and aggressive interactions. Our system incorporates recent advances in object detection and tracking to automate the process of identifying and analyzing turkey behavior captured by commercial grade cameras. We combine deep-learning and traditional image processing methods to address challenges in this practical agricultural problem. Our system also includes a web-based user interface to create visualization of automated analysis results. Together, we provide an improved tool for turkey researchers to assess turkey welfare without the time-consuming and labor-intensive manual inspection.