Ubiquitous throughout the history of photography, white borders on photo prints and vintage Polaroids remain useful as new technologies including augmented reality emerge for general use. In contemporary optical see-through augmented reality (OST-AR) displays, physical transparency limits the visibility of dark stimuli. However, recent research shows that simple image manipulations, white borders and outer glows, have a strong visual effect, making dark objects appear darker and more opaque. In this work, the practical value of known, inter-related effects including lightness induction, glare illusion, Cornsweet illusion, and simultaneous contrast are explored. The results show promising improvements to visibility and visual quality in future OST-AR interfaces.

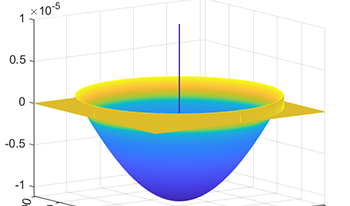

Rudd and Zemach analyzed brightness/lightness matches performed with disk/annulus stimuli under four contrast polarity conditions, in which the disk was either a luminance increment or decrement with respect to the annulus, and the annulus was either an increment or decrement with respect to the background. In all four cases, the disk brightness—measured by the luminance of a matching disk—exhibited a parabolic dependence on the annulus luminance when plotted on a log-log scale. Rudd further showed that the shape of this parabolic relationship can be influenced by instructions to match the disk’s brightness (perceived luminance), brightness contrast (perceived disk/annulus luminance ratio), or lightness (perceived reflectance) under different assumptions about the illumination. Here, I compare the results of those experiments to results of other, recent, experiments in which the match disk used to measure the target disk appearance was not surrounded by an annulus. I model the entire body of data with a neural model involving edge integration and contrast gain control in which top-down influences controlling the weights given to edges in the edge integration process act either before or after the contrast gain control stage of the model, depending on the stimulus configuration and the observer’s assumptions about the nature of the illumination.

There are a variety of computational formulations of retinex but it is the center/surround convolutional variant that is of interest to us here. In convolutional retinex, an image is filtered by a center/surround operator that is to designed to mitigate the effects of shading, which in turn compresses the dynamic range. The parameters that define the shape and extent of these filters are tuned to give the “best” results. In their 1988 paper, Hurlbert & Poggio showed that the problem can be formulated as a regression, where corresponding pairs of images with and without the effects of shading are related by a center/surround convolution filter that is found by solving an optimization. This paper starts with the observation that finding sufficiently large representative pairs of images with and without shading is difficult. This leads us to reformulate the Hurlbert & Poggio approach so that we analytically integrate over the whole sets of shadings and albedos, which means that no sampling is required. Rather nicely, the derived filters are found in closed form and have a smooth shape, unlike the filters derived by the prior art. Experiments validate our method.

Predicting the final appearance of a print is crucial in the graphic industry. The aim of this work is to build a mathematical model to predict the visual gloss of 2D printed samples. We conducted a psychophysical experiment where the observers judged the gloss of samples with different colours and different gloss values. For the psychophysical experiment, a new reference scale was built. Using the results from the psychophysical experiment, a mathematical prediction model for the visual assessment of gloss has been developed. By using the Principal Component Analysis to explain and predict the perceived gloss, the dimensions were reduced to three dimensions: specular gloss measured at 60°, Lightness (L*) and Distinctness of Image (DOI).

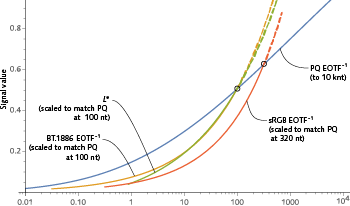

High dynamic range (HDR) technology enables a much wider range of luminances – both relative and absolute – than standard dynamic range (SDR). HDR extends black to lower levels, and white to higher levels, than SDR. HDR enables higher absolute luminance at the display to be used to portray specular highlights and direct light sources, a capability that was not available in SDR. In addition, HDR programming is mastered with wider color gamut, usually DCI P3, wider than the BT.1886 (“BT.709”) gamut of SDR. The capabilities of HDR strain the usual SDR methods of specifying color range. New methods are needed. A proposal has been made to use CIE LAB to quantify HDR gamut. We argue that CIE L* is only appropriate for applications having contrast range not exceeding 100:1, so CIELAB is not appropriate for HDR. In practice, L* cannot accurately represent lightness that significantly exceeds diffuse white – that is, L* cannot reasonably represent specular reflections and direct light sources. In brief: L* is inappropriate for HDR. We suggest using metrics based upon ST 2084/BT.2100 PQ and its associated color encoding, IC<sub>T</sub>C<sub>P</sub>.

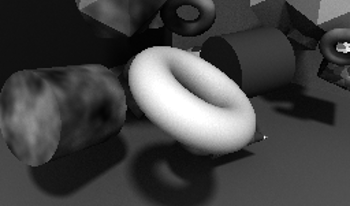

Research on human lightness perception has revealed important principles of how we perceive achromatic surface color, but has resulted in few image-computable models. Here we examine the performance of a recent artificial neural network architecture in a lightness matching task. We find similarities between the network’s behaviour and human perception. The network has human-like levels of partial lightness constancy, and its log reflectance matches are an approximately linear function of log illuminance, as is the case with human observers. We also find that previous computational models of lightness perception have much weaker lightness constancy than is typical of human observers. We briefly discuss some challenges and possible future directions for using artificial neural networks as a starting point for models of human lightness perception.

Translucency optically results from subsurface light transport and plays a considerable role in how objects and materials appear. Absorption and scattering coefficients parametrize the distance a photon travels inside the medium before it gets absorbed or scattered, respectively. Stimuli produced by a material for a distinct viewing condition are perceptually non-uniform w.r.t. these coefficients. In this work, we use multi-grid optimization to embed a non-perceptual absorption-scattering space into a perceptually more uniform space for translucency and lightness. In this process, we rely on A (alpha) as a perceptual translucency metric. Small Euclidean distances in the new space are roughly proportional to lightness and apparent translucency differences measured with A. This makes picking A more practical and predictable, and is a first step toward a perceptual translucency space.

Computer simulations of an extended version of a neural model of lightness perception [1,2] are presented. The model provides a unitary account of several key aspects of spatial lightness phenomenology, including contrast and assimilation, and asymmetries in the strengths of lightness and darkness induction. It does this by invoking mechanisms that have also been shown to account for the overall magnitude of dynamic range compression in experiments involving lightness matches made to real-world surfaces [2]. The model assumptions are derived partly from parametric measurements of visual responses of ON and OFF cells responses in the lateral geniculate nucleus of the macaque monkey [3,4] and partly from human quantitative psychophysical measurements. The model’s computations and architecture are consistent with the properties of human visual neurophysiology as they are currently understood. The neural model's predictions and behavior are contrasted though the simulations with those of other lightness models, including Retinex theory [5] and the lightness filling-in models of Grossberg and his colleagues [6].