With the advancement of digital printing technology, the rapid detection of inkjet defects have become critical research areas in OnePass inkjet printing. These defects can disrupt the continuity and smoothness of the image, leading to a decrease in print quality. To overcome these issues, this study proposed an inkjet defect detection method based on printing characterization. Image processing technology was used to obtain the printing characterization information of the printer and extract the information of ink point positions. Optimizing the allocation matrix through Sinkhorn algorithm and combining it with Robust Point Matching algorithm to construct the transmission objective function was accomplished to obtain the optimal point set matching model. This model serves two purposes: diagnosing the nozzle function status of each printhead and quantifying the alignment errors between printheads. Experiments demonstrated the high precision of this detection method. We analyzed the impact of related parameters on the model’s performance and assessed changes in image quality under different alignment errors. This approach provides a new solution for optimizing printer maintenance.

Computer Vision has become increasingly important in smart farming applications, including scheduling crop irrigation. A combination of various remote sensing devices enables continuous monitoring of a crop and non-destructive prediction of irrigation time. Appropriately scheduled and precisely targeted irrigation enables sustainable use of this limited resource. In agriculture, absorption-based and thermal-based imagery are used to monitor plant conditions through indices such as the Normalized Difference Water Index (NDWI) and Crop Water Stress Index (CWSI). This paper provides an overview of the concept and components of monitoring systems for automated irrigation scheduling. It explains the potential and limitations of applying computer vision-based systems for plant stress detection, providing insights to advance understanding in this growing field.

Single image dehazing is very important in intelligent vision systems. Since the dark channel prior (DCP) is invalid in bright areas such as the sky part of the image and will cause the recovered image to suffer severe distortion in the bright area. Therefore, we propose a novel dehazing method based on transmission map segmentation and prior knowledge. First, we divide the hazy input into bright areas and non-bright areas, then estimate the transmission map via DCP in the non-bright area, and propose a transmission map compensation function for correction in the bright area. Then we fuse the DCP and the bright channel prior (BCP) to accurately estimate the atmospheric light, and finally restore the clear image according to the physical model. Experiments show that our method well solves the DCP distortion problem in bright regions of images and is competitive with state-of-the-art methods.

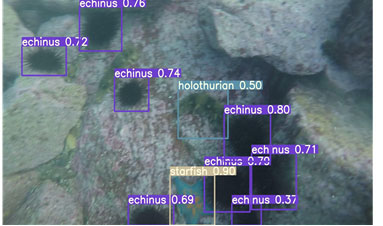

This paper proposes an underwater object detection algorithm based on lightweight structure optimization to address the low detection accuracy and difficult deployment in underwater robot dynamic inspection caused by low light, blurriness, and low contrast. The algorithm builds upon YOLOv7 by incorporating the attention mechanism of the convolutional module into the backbone network to enhance feature extraction in low light and blurred environments. Furthermore, the feature fusion enhancement module is optimized to control the shortest and longest gradient paths for fusion, improving the feature fusion ability while reducing network complexity and size. The output module of the network is also optimized to improve convergence speed and detection accuracy for underwater fuzzy objects. Experimental verification using real low-light underwater images demonstrates that the optimized network improves the object detection accuracy (mAP) by 11.7%, the detection rate by 2.9%, and the recall rate by 15.7%. Moreover, it reduces the model size by 20.2 MB with a compression ratio of 27%, making it more suitable for deployment in underwater robot applications.

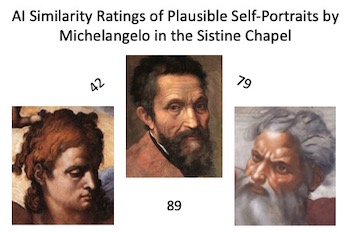

Three of the faces in Michelangelos Sistine Chapel frescos are recognized as portraits: his own sardonic self-portrait in the flayed skin held by St Bartholomew, itself a portrait of the scathing satirist Pietro Agostino, and the depiction of Mary as a portrait of his spiritual soulmate Vittoria Colonna. The first analysis was of the faces of the depictions of God and of the patriarch Jacob, which were rated by AI-based facial ratings as highly similar to each other and to a portrait of Michelangelo. A second set of young faces: Jesus, Adam and Sebastian, were also rated as highly similar to God and to each other. These ratings suggest that Michelangelo depicted himself as all these central figures in the Sistine Chapel frescoes. Similar ratings of several young women across the ceiling suggested that they were further portraits of Vittoria Colonna, and that she had posed for Michelangelo as a model for the Sistine Chapel personages in her younger years.

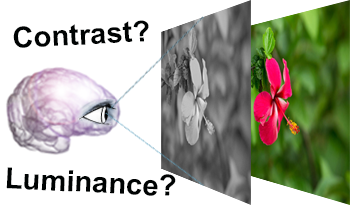

Grayscale images are essential in image processing and computer vision tasks. They effectively emphasize luminance and contrast, highlighting important visual features, while also being easily compatible with other algorithms. Moreover, their simplified representation makes them efficient for storage and transmission purposes. While preserving contrast is important for maintaining visual quality, other factors such as preserving information relevant to the specific application or task at hand may be more critical for achieving optimal performance. To evaluate and compare different decolorization algorithms, we designed a psychological experiment. During the experiment, participants were instructed to imagine color images in a hypothetical ”colorless world” and select the grayscale image that best resembled their mental visualization. We conducted a comparison between two types of algorithms: (i) perceptual-based simple color space conversion algorithms, and (ii) spatial contrast-based algorithms, including iteration-based methods. Our experimental findings indicate that CIELAB exhibited superior performance on average, providing further evidence for the effectiveness of perception-based decolorization algorithms. On the other hand, the spatial contrast-based algorithms showed relatively poorer performance, possibly due to factors such as DC-offset and artificial contrast generation. However, these algorithms demonstrated shorter selection times. Notably, no single algorithm consistently outperformed the others across all test images. In this paper, we will delve into a comprehensive discussion on the significance of contrast and luminance in color-to-grayscale mapping based on our experimental results and analysis.

In order to train a learning-based prediction model, large datasets are typically required. One of the major restrictions of machine learning applications using customized databases is the cost of human labor. In the previous papers [3, 4, 5], it is demonstrated through experiments that the correlation between thin-film nitrate sensor performance and surface texture exists. In the previous papers, several methods for extracting texture features from sensor images are explored, repeated cross-validation and a hyperparameter auto-tuning method are performed, and several machine learning models are built to improve prediction accuracy. In this paper, a new way to achieve the same accuracy with a much smaller dataset of labels by using an active learning structure is presented.

We live in a visual world. The perceived quality of images is of crucial importance in industrial, medical, and entertainment application environments. Developments in camera sensors, image processing, 3D imaging, display technology, and digital printing are enabling new or enhanced possibilities for creating and conveying visual content that informs or entertains. Wireless networks and mobile devices expand the ways to share imagery and autonomous vehicles bring image processing into new aspects of society. The power of imaging rests directly on the visual quality of the images and the performance of the systems that produce them. As the images are generally intended to be viewed by humans, a deep understanding of human visual perception is key to the effective assessment of image quality.

The COVID-19 virus induces infection in both the upper respiratory tract and the lungs. Chest X-ray are widely used to diagnose various lung diseases. Considering chest X-ray and CT images, we explore deep-learning-based models namely: AlexNet, VGG16, VGG19, Resnet50, and Resnet101v2 to classify images representing COVID-19 infection and normal health situation. We analyze and present the impact of transfer learning, normalization, resizing, augmentation, and shuffling on the performance of these models. We explored the vision transformer (ViT) model to classify the CXR images. The ViT model incorporates multi-headed attention to disclose more global information in constrast to CNN models at lower layers. This mechanism leads to quantitatively diverse features. The ViT model renders consolidated intermediate representations considering the training data. For experimental analysis, we use two standard datasets and exploit performance metrics: accuracy, precision, recall, and F1-score. The ViT model, driven by self-attention mechanism and longrange context learning, outperforms other models.

Recent advances in convolutional neural networks and vision transformers have brought about a revolution in the area of computer vision. Studies have shown that the performance of deep learning-based models is sensitive to image quality. The human visual system is trained to infer semantic information from poor quality images, but deep learning algorithms may find it challenging to perform this task. In this paper, we study the effect of image quality and color parameters on deep learning models trained for the task of semantic segmentation. One of the major challenges in benchmarking robust deep learning-based computer vision models is lack of challenging data covering different quality and colour parameters. In this paper, we have generated data using the subset of the standard benchmark semantic segmentation dataset (ADE20K) with the goal of studying the effect of different quality and colour parameters for the semantic segmentation task. To the best of our knowledge, this is one of the first attempts to benchmark semantic segmentation algorithms under different colour and quality parameters, and this study will motivate further research in this direction.