Hyperspectral imaging (HSI) has been widely used in the conservation studies of various cultural heritage (CH) objects, e.g., paintings, murals, and handwritten historical manuscripts. In this work, HSI is used to study painted historical maps, i.e., five maps of the Scandinavia region from the Ortelius collection preserved at the National Library of Norway in Oslo. Given knowledge of their colour application and usage, HSI-based pigment identification is performed, assuming several spectral mixing theories, i.e., pure pigments, subtractive, and additive mixing models. The obtained results are discussed, showing both the pure pigment and subtractive mixing model to be suitable for pigment identification in the case of watercolour applied on paper substrate.

This work presents insights into the imaging workflow from cultural heritage domain experts, gathered from an online survey. Non-invasive 2D imaging technology has become a cornerstone in the analysis and documentation of cultural heritage artefacts. Techniques such as hyperspectral imaging (HSI) and X-ray fluorescence (XRF) can investigate material properties, artistic processes, and conservation states. Existing analysis and visualisation tools offer functionality for specific data types but lack integration for holistic multimodal analysis. To address these limitations, we conducted a structured survey targeting researchers and practitioners in CH working with imaging technology. The survey explores their workflows, imaging technology usage, and software preferences. This study identifies key trends, challenges, and feature requirements.

Mobile phone cameras are imaging tools that are rapidly being adopted by various industries due to their portability and ease of use. Though not currently considered an adopted imaging tool for cultural heritage, there has been increased interest in their potential use within the field. To better understand how cultural heritage professionals considered mobile phone cameras as tools for various types of documentation, a survey was created and administered. A survey was designed and sent to cultural heritage groups involved with imaging with the goal of determining whether these types of cameras are practical imaging devices in circumstances where a studio or a DSLR may not be readily available. Initial results have shown a variety of responses and that mobile phones are being used for various types of documentation.

A continued challenge for preservation is objective data to make informed collection decisions. When considering a shared national print system, this challenge relates to decisions of withdrawal or retention since catalog partners may not have data regarding the condition of others’ volumes. This conundrum led to a national research initiative funded by the Mellon Foundation, “Assessing the Physical Condition of the National Collection.” The project captured and analyzed condition data from 500 “identical” volumes from five American research libraries to explore the following: What is the condition of book collections from 1840–1940? Can condition be predicted by catalog or physical parameters? What assessment tools might indicate a book’s life expectancy? Filling gaps in knowledge about the physicality of our collections is helping identify at-risk collections and explain the cases of dissimilar “same” volumes based on the impact of paper composition. Predictive modeling and assessment tools are also used to improve the understanding of what is typical for specific eras.

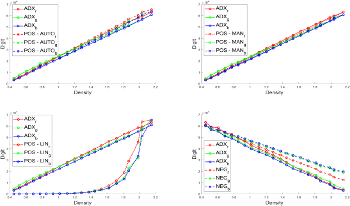

Size reduction of a point cloud or triangulated mesh is an intrinsic part of a three-dimensional (3D) documentation process, reducing the data volume and filtering out erroneous and redundant data obtained during acquisition. Additional reduction has an effect on the geometric accuracy of 3D data compared to the tangible object, and for 3D objects utilized in various cultural heritage applications, the small geometric properties of an object are equally as important as the large ones. In this paper, we investigate several simplification algorithms and various geometric features’ relevance to geometric accuracy during the reduction of a 3D object’s data size, and whether any of these features have a particular relation to the results of an algorithmic approach. Different simplification algorithms have been applied to several primitive geometric shapes at several reduction stages, and measured values for geometric features and accuracy have been tracked across every stage. We then compute and analyze the correlation between these values to see the effect each algorithm has on different geometries, and whether some of them are better suited for a simplification process based on the geometric features of a 3D object.

Since the advent of the Digital Intermediate (DI) and the Cineon system, motion picture film preservation and restoration practices overcame an enormous change derived from the possibility of digitizing and digitally restoring film materials. Today, film materials are scanned using mostly commercial film scanners, which process the frames into the Academy Color Encoding Specification (ACES) and present proprietary LUTs of negative-to-positive conversions, image enhancement, and color correction. The processing operated by scanner systems is not always openly available. The various digitization hardware and software can lead to different approaches and workflows in motion picture film preservation and restoration, resulting in inconsistency among archives and laboratories. This work presents an overview of the main approaches and systems used to digitize and encode motion picture film frames to explain these systems’ potentials and limits.

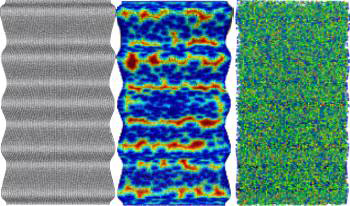

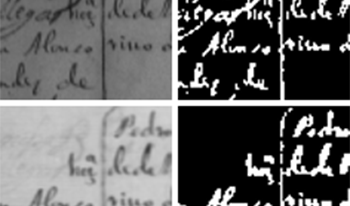

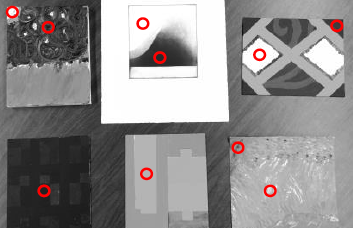

The purpose of this work is to present a new dataset of hyperspectral images of historical documents consisting of 66 historical family tree samples from the 16th and 17th centuries in two spectral ranges: VNIR (400-1000 nm) and SWIR (900-1700 nm). In addition, we performed an evaluation of different binarization algorithms, both using a single spectral band and generating false RGB images from the hyperspectral cube.

Simplification of 3D meshes is a fundamental part of most 3D workflows, where the amount of data is reduced to be more manageable for a user. The unprocessed data includes a lot of redundancies and small errors that occur during a 3D acquisition process which can often safely be removed without jeopardizing is function. Several algorithmic approaches are being used across applications of 3D data, which bring with them their own benefits and drawbacks. There is for the moment no standardized algorithm for cultural heritage. This investigation will make a statistical evaluation of how geometric primitive shapes behave during different simplification approaches and evaluate what information might be lost in a HBIM (Heritage-Building-Information-Modeling) or change-monitoring process of cultural heritage if each of these are applied to more complex manifolds.

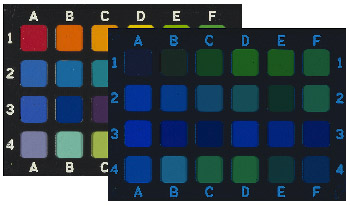

Cultural-heritage imaging is a critical aspect of the efforts to preserve world treasures. This field is so demanding of color accuracy that the inherent limitations of RGB imaging can often be an issue. Various imaging systems of increasing complexity have been proposed, up to and including those that report full spectral reflectance for each pixel. These systems improve color accuracy, but their complexity and slow operational speed hamper their widespread use in this field. A simpler and faster bi-color lighting and dual-RGB processing system is proposed that improves the color accuracy of profiling and verification targets. The system can be used with any off-the-shelf RGB camera, including prosumer models.

The dynamic range that can be captured using traditional image capture devices is limited by their design. While an image sensor cannot capture the entire dynamic range in one exposure that the human eye can see, imaging techniques have been developed to help accomplish this. By incorporating high dynamic range imaging, the range of contrast captured is also increased, helping to improve color accuracy. Cultural heritage institutions face limitations when trying to capture color accurate reproductions of cultural heritage objects and materials. To mitigate this, a team of software engineers at RIT have developed a software application, BeyondRGB, to enable the colorimetric and spectral processing of six-channel spectral images. This work aims to incorporate high dynamic range imaging into the BeyondRGB computational pipeline to improve color accuracy further.