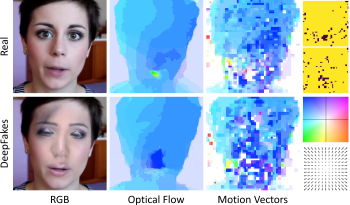

Video DeepFakes are fake media created with Deep Learning (DL) that manipulate a person’s expression or identity. Most current DeepFake detection methods analyze each frame independently, ignoring inconsistencies and unnatural movements between frames. Some newer methods employ optical flow models to capture this temporal aspect, but they are computationally expensive. In contrast, we propose using the related but often ignored Motion Vectors (MVs) and Information Masks (IMs) from the H.264 video codec, to detect temporal inconsistencies in DeepFakes. Our experiments show that this approach is effective and has minimal computational costs, compared with per-frame RGB-only methods. This could lead to new, real-time temporally-aware DeepFake detection methods for video calls and streaming.

In this paper, we propose a multimodal unsupervised video learning algorithm designed to incorporate information from any number of modalities present in the data. We cooperatively train a network corresponding to each modality: at each stage of training, one of these networks is selected to be trained using the output of the other networks. To verify our algorithm, we train a model using RGB, optical flow, and audio. We then evaluate the effectiveness of our unsupervised learning model by performing action classification and nearest neighbor retrieval on a supervised dataset. We compare this triple modality model to contrastive learning models using one or two modalities, and find that using all three modalities in tandem provides a 1.5% improvement in UCF101 classification accuracy, a 1.4% improvement in R@1 retrieval recall, a 3.5% improvement in R@5 retrieval recall, and a 2.4% improvement in R@10 retrieval recall as compared to using only RGB and optical flow, demonstrating the merit of utilizing as many modalities as possible in a cooperative learning model.

In recent years, we have seen significant progress in advanced image upscaling techniques, sometimes called super-resolution, ML-based, or AI-based upscaling. Such algorithms are now available not only in form of specialized software but also in drivers and SDKs supplied with modern graphics cards. Upscaling functions in NVIDIA Maxine SDK is one of the recent examples. However, to take advantage of this functionality in video streaming applications, one needs to (a) quantify the impacts of super-resolution techniques on the perceived visual quality, (b) implement video rendering incorporating super-resolution upscaling techniques, and (c) implement new bitrate+resolution adaptation algorithms in streaming players, enabling such players to deliver better quality of experience or better efficiency (e.g. reduce bandwidth usage) or both. Towards this end, in this paper, we propose several techniques that may be helpful to the implementation community. First, we offer a model quantifying the impacts of super resolution upscaling on the perceived quality. Our model is based on the Westerink-Roufs model connecting the true resolution of images/videos to perceived quality, with several additional parameters added, allowing its tuning to specific implementations of super-resolution techniques. We verify this model by using several recent datasets including MOS scores measured for several conventional up-scaling and super-resolution algorithms. Then, we propose an improved adaptation logic for video streaming players, considering video bitrates, encoded video resolutions, player size, and the upscaling method. This improved logic relies on our modified Westerink-Roufs model to predict perceived quality and suggests choices of renditions that would deliver the best quality for given display and upscaling method characteristics. Finally, we study the impacts of the proposed techniques and show that they can deliver practically appreciable results in terms of the expected QoE improvements and bandwidth savings.

Virtual background has become an increasingly important feature of online video conferencing due to the popularity of remote work in recent years. To enable virtual background, a segmentation mask of the participant needs to be extracted from the real-time video input. Most previous works have focused on image based methods for portrait segmentation. However, portrait video segmentation poses additional challenges due to complicated background, body motion, and inter-frame consistency. In this paper, we utilize temporal guidance to improve video segmentation, and propose several methods to address these challenges including prior mask, optical flow, and visual memory. We leverage an existing portrait segmentation model PortraitNet to incorporate our temporal guided methods. Experimental results show that our methods can achieve improved segmentation performance on portrait videos with minimum latency.

The state-of-the art smartphones have a motion correction function such as an electric image stabilizer and record the video without shaking. As the motion is corrected in various ways according to the set maker, there is a difference in performance and it is difficult to distinguish clearly its performance. This paper defines the Effective angle of View and Motion, for video motion correction performance evaluation. In the case of motion, we classified the motion volume, motion standard deviation, and motion frequency parameters. The performance of motion correction on the electronic device can be scored for each of parameters. In this way, the motion correction performance can be objectively modelled and evaluated.

There is a surging need across the world for protection against gun violence. There are three main areas that we have identified as challenging in research that tries to curb gun violence: temporal location of gunshots, gun type prediction and gun source (shooter) detection. Our task is gun source detection and muzzle head detection, where the muzzle head is the round opening of the firing end of the gun. We would like to locate the muzzle head of the gun in the video visually, and identify who has fired the shot. In our formulation, we turn the problem of muzzle head detection into two sub-problems of human object detection and gun smoke detection. Our assumption is that the muzzle head typically lies between the gun smoke caused by the shot and the shooter. We have interesting results both in bounding the shooter as well as detecting the gun smoke. In our experiments, we are successful in detecting the muzzle head by detecting the gun smoke and the shooter.

The ease in counterfeiting both origin and content of a video necessitates the search for a reliable method to identify the source of a media file - a crucial part of forensic investigation. One of the most accepted solutions to identify the source of a digital image involves comparison of its photo-response non-uniformity (PRNU) fingerprint. However, for videos, prevalent methods are not as efficient as image source identification techniques. This is due to the fact that the fingerprint is affected by the postprocessing steps done to generate the video. In this paper, we answer affirmatively to the question of whether one can use images to generate the reference fingerprint pattern to identify a video source. We introduce an approach called “Hybrid G-PRNU” that provides a scale-invariant solution for video source identification by matching its fingerprint with the one extracted from images. Another goal of our work is to find the optimal parameters to reach an optimal identification rate. Experiments performed demonstrate higher identification rate, while doing asymmetric comparison of video PRNU with the reference pattern generated from images, over several test cases. Further the fingerprint extractor used for this paper is being made freely available for scholars and researchers in the domain.

The most common sensor arrangement of 360 panoramic video cameras is a radial design where a number of sensors are outward looking as in spokes on a wheel. The cameras are typically spaced at approximately human interocular distance with high overlap. We present a novel method of leveraging small form-factor camera units arranged in stereo pairs and interleaved to achieve a fully panoramic view with fully parallel sensor pairs. This arrangement requires less keystone correction to get depth information and the discontinuity between images that have to be stitched together is smaller than in the radial design. The primary benefit for this arrangement is the small form factor of the system with the large number of sensors enabling a high resolving power. We highlight mechanical considerations, system performance and software capabilities of these manufactured and tested imaging units. One is based on the Raspberry Pi cameras and a second based on a 16 camera system leveraging 8 pairs of 13 megapixel AR1335 cell phone sensors. In addition several different variations on the conceptual design were simulated with synthetic projections to compare stitching difficulty of the rendered scenes.