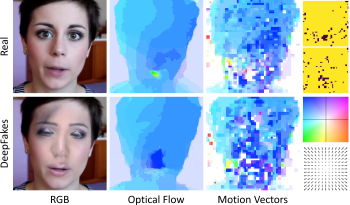

Video DeepFakes are fake media created with Deep Learning (DL) that manipulate a person’s expression or identity. Most current DeepFake detection methods analyze each frame independently, ignoring inconsistencies and unnatural movements between frames. Some newer methods employ optical flow models to capture this temporal aspect, but they are computationally expensive. In contrast, we propose using the related but often ignored Motion Vectors (MVs) and Information Masks (IMs) from the H.264 video codec, to detect temporal inconsistencies in DeepFakes. Our experiments show that this approach is effective and has minimal computational costs, compared with per-frame RGB-only methods. This could lead to new, real-time temporally-aware DeepFake detection methods for video calls and streaming.

Peter Grönquist, Yufan Ren, Qingyi He, Alessio Verardo, Sabine Süsstrunk, "Efficient Temporally-aware DeepFake Detection using H.264 Motion Vectors" in Electronic Imaging, 2024, pp 335-1 - 335-9, https://doi.org/10.2352/EI.2024.36.4.MWSF-335

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed