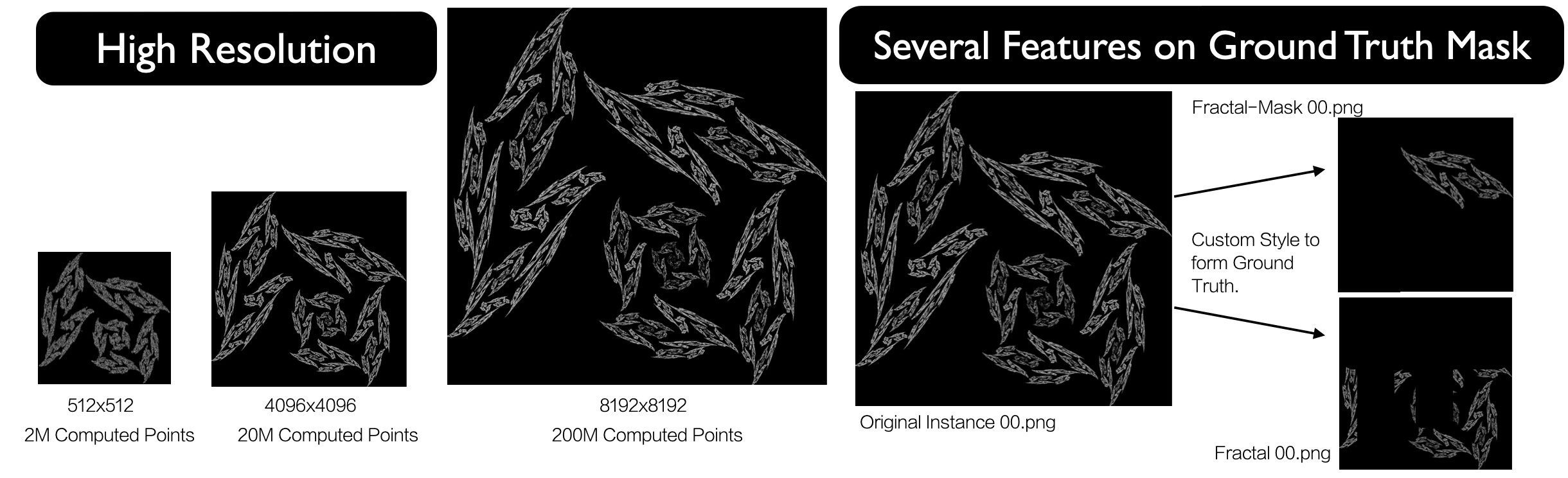

In medical segmentation, the acquisition of high-quality labeled data remains a significant challenge due to the substantial cost and time required for expert annotations. Variability in imaging conditions, patient diversity, and the use of different imaging devices further complicate model training. The high dimensionality of medical images also imposes considerable computational demands, while small lesions or abnormalities can create class imbalance, hindering segmentation accuracy. Pre-training on synthetic datasets in medical imaging may enable Vision Transformers (ViTs) to develop robust feature representations, even during the fine-tuning phase, when high-quality labeled data is limited. In this work, we propose integrating Formula-Driven Supervised Learning (FDSL) synthetic datasets with medical imaging to enhance pre-training for segmentation tasks. We implemented a custom fractal dataset, Style Fractals, capable of generating high-resolution images, including those measuring 8k x 8k pixels. Our results indicate improved performance when using the SAM model for segmentation, in conjunction with robust augmentation techniques, increasing performance from 62.30% to 63.68%. This was followed by fine-tuning on the PAIP dataset, a high-resolution, real-world pathology dataset focused on liver cancer. Additionally, we present results using another synthetic dataset, SegRCDB, for comparative analysis.

This paper presents a method for synthesizing 2D and 3D sensor data for various machine vision tasks. Depending on the task, different processing steps can be applied to a 3D model of an object. For object detection, segmentation and pose estimation, random object arrangements are generated automatically. In addition, objects can be virtually deformed in order to create realistic images of non-rigid objects. For automatic visual inspection, synthetic defects are introduced into the objects. Thus sensor-realistic datasets with typical object defects for quality control applications can be created, even in the absence of defective parts. The simulation of realistic images uses physically based rendering techniques. Material properties and different lighting situations are taken into account in the 3D models. The resulting tuples of 2D images and their ground truth annotations can be used to train a machine learning model, which is subsequently applied to real data. In order to minimize the reality gap, a random parameter set is selected for each image, resulting in images with high variety. Considering the use cases damage detection and object detection, it has been shown that a machine learning model trained only on synthetic data can also achieve very good results on real data.