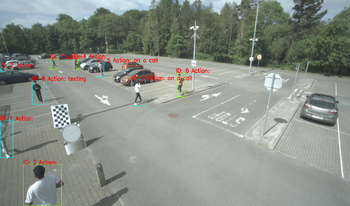

In this paper, we present a database consisting of the annotations of videos showing a number of people performing several actions in a parking lot. The chosen actions represent situations in which the pedestrian could be distracted and not fully aware of her surroundings. Those are “looking behind”, “on a call”, and “texting”, with another one labeled as “no action” when none of the previous actions is performed by the person. In addition to actions, also the speed of the person is labeled. There are three possible values for such speed: “standing”, “walking” and “running”. Bounding boxes of people present in each frame are also provided, along with a unique identifier for each person. The main goal is to provide the research community with examples of actions that can be of interest for surveillance or safe autonomous driving. The addition of the speed of the person when performing the action can also be of interest, as it can be treated as a more dangerous behavior “running” than “waking”, when “on a call” or “looking behind”, for example, providing the researchers with richer information.

The demand for object tracking (OT) applications has been increasing for the past few decades in many areas of interest, including security, surveillance, intelligence gathering, and reconnaissance. Lately, newly-defined requirements for unmanned vehicles have enhanced the interest in OT. Advancements in machine learning, data analytics, and AI/deep learning have facilitated the improved recognition and tracking of objects of interest; however, continuous tracking is currently a problem of interest in many research projects. [1] In our past research, we proposed a system that implements the means to continuously track an object and predict its trajectory based on its previous pathway, even when the object is partially or fully concealed for a period of time. The second phase of this system proposed developing a common knowledge among a mesh of fixed cameras, akin to a real-time panorama. This paper discusses the method to coordinate the cameras' view to a common frame of reference so that the object location is known by all participants in the network.

We present a scheme for securely communicating data over close distances in public settings. Exploiting the ubiquity of cameras in modern day devices and the high resolution of displays, our approach provides secure data communication over short distances by using specialized 2-D barcodes along with an adaptive protocol. Specifically, the barcodes carry public data compatible with their conventional design and additionally private data through specialized orientation modulation in the barcode modules. The latter is reliably decoded when the barcodes are captured at close distances but not from farther distances, a property that we call “proximal privacy”. The adaptive protocol dynamically modifies the strength of the orientation modulation until it is just recoverable by the capture camera. We validate our approach via simulations and by using physical devices to display and capture the specialized barcodes.

The demand for object tracking (OT) applications has been increasing for the past few decades in many areas of interest: security, surveillance, intelligence gathering, and reconnaissance. Lately, newly-defined requirements for unmanned vehicles have enhanced the interest in OT. Advancements in machine learning, data analytics, and deep learning have facilitated the recognition and tracking of objects of interest; however, continuous tracking is currently a problem of interest to many research projects. This paper presents a system implementing a means to continuously track an object and predict its trajectory based on its previous pathway, even when the object is partially or fully concealed for a period of time. The system is composed of six main subsystems: Image Processing, Detection Algorithm, Image Subtractor, Image Tracking, Tracking Predictor, and the Feedback Analyzer. Combined, these systems allow for reasonable object continuity in the face of object concealment.

Overweight vehicles are a common source of pavement and bridge damage. Especially mobile crane vehicles are often beyond legal per-axle weight limits, carrying their lifting blocks and ballast on the vehicle instead of on a separate trailer. To prevent road deterioration, the detection of overweight cranes is desirable for law enforcement. As the source of crane weight is visible, we propose a camera-based detection system based on convolutional neural networks. We iteratively label our dataset to vastly reduce labeling and extensively investigate the impact of image resolution, network depth and dataset size to choose optimal parameters during iterative labeling. We show that iterative labeling with intelligently chosen image resolutions and network depths can vastly improve (up to 70×) the speed at which data can be labeled, to train classification systems for practical surveillance applications. The experiments provide an estimate of the optimal amount of data required to train an effective classification system, which is valuable for classification problems in general. The proposed system achieves an AUC score of 0.985 for distinguishing cranes from other vehicles and an AUC of 0.92 and 0.77 on lifting block and ballast classification, respectively. The proposed classification system enables effective road monitoring for semi-automatic law enforcement and is attractive for rare-class extraction in general surveillance classification problems.