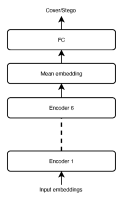

In batch steganography, the sender communicates a secret message by hiding it in a bag of cover objects. The adversary performs the so-called pooled steganalysis in that she inspects the entire bag to detect the presence of secrets. This is typically realized by using a detector trained to detect secrets within a single object, applying it to all objects in the bag, and feeding the detector outputs to a pooling function to obtain the final detection statistic. This paper deals with the problem of building the pooler while keeping in mind that the Warden will need to be able to detect steganography in variable size bags carrying variable payload. We propose a flexible machine learning solution to this challenge in the form of a Transformer Encoder Pooler, which is easily trained to be agnostic to the bag size and payload and offers a better detection accuracy than previously proposed poolers.

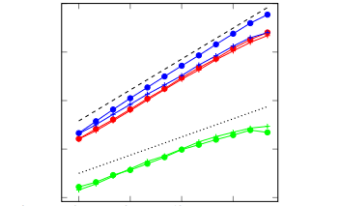

Assuming that Alice commits to an embedding method and the Warden to a detector, we study how much information Alice can communicate at a constant level of statistical detectability over potentially infinitely many uses of the stego channel. When Alice is allowed to allocate her payload across multiple cover objects, we find that certain payload allocation strategies that are informed by a steganography detector exhibit super-square root secure payload (scaling exponent 0.85) for at least tens of thousands of uses of the stego channel. We analyze our experiments with a source model of soft outputs of the detector across images and show how the model determines the scaling of the secure payload.

Practical steganalysis inevitably involves the necessity to deal with a diverse cover source. In the JPEG domain, one key element of the diversification is the JPEG quality factor, or, more generally, the JPEG quantization table used for compression. This paper investigates experimentally the scalability of various steganalysis detectors w.r.t. JPEG quality. In particular, we report that CNN detectors as well as older feature-based detectors have the capacity to contain the complexity of multiple JPEG quality factors within a single model when the quality factors are properly grouped based on their quantization tables. Detectors trained on multiple JPEG qualities show no loss of detection accuracy when compared with dedicated detectors trained for a specific JPEG quality factor. We also demonstrate that CNNs (but not so much feature-based classifiers) trained on multiple qualities can generalize to unseen custom quantization tables compared to detectors trained for specific JPEG qualities. Their ability to generalize to very different quantization tables, however, remains a challenging task. A semi-metric comparing quantization tables is introduced and used to interpret our results.

Image steganography can have legitimate uses, for example, augmenting an image with a watermark for copyright reasons, but can also be utilized for malicious purposes. We investigate the detection of malicious steganography using neural networkbased classification when images are transmitted through a noisy channel. Noise makes detection harder because the classifier must not only detect perturbations in the image but also decide whether they are due to the malicious steganographic modifications or due to natural noise. Our results show that reliable detection is possible even for state-of-the-art steganographic algorithms that insert stego bits not affecting an image’s visual quality. The detection accuracy is high (above 85%) if the payload, or the amount of the steganographic content in an image, exceeds a certain threshold. At the same time, noise critically affects the steganographic information being transmitted, both through desynchronization (destruction of information which bits of the image contain steganographic information) and by flipping these bits themselves. This will force the adversary to use a redundant encoding with a substantial number of error-correction bits for reliable transmission, making detection feasible even for small payloads.

The goal of this article is construction of steganalyzers capable of detecting a variety of embedding algorithms and possibly identifying the steganographic method. Since deep learning today can achieve markedly better performance than other machine learning tools, our detectors are deep residual convolutional neural networks. We explore binary classifiers trained as cover versus all stego, multi-class detectors, and bucket detectors in a feature space obtained as a concatenation of features extracted by networks trained on individual stego algorithms. The accuracy of the detector to identify steganography is compared with dedicated detectors trained for a specific embedding algorithm. While the loss of detection accuracy w.r.t. increasing number of steganographic algorithms increases only slightly as long as the embedding schemes are known, the ability of the detector to generalize to previously unseen steganography remains a challenging task.

The number and availability of stegonographic embedding algorithms continues to grow. Many traditional blind steganalysis frameworks require training examples from every embedding algorithm, but collecting, storing and processing representative examples of each algorithm can quickly become untenable. Our motivation for this paper is to create a straight-forward, nondata-intensive framework for blind steganalysis that only requires examples of cover images and a single embedding algorithm for training. Our blind steganalysis framework addresses the case of algorithm mismatch, where a classifier is trained on one algorithm and tested on another, with four spatial embedding algorithms: LSB matching, MiPOD, S-UNIWARD and WOW. We use RAW image data from the BOSSbase database and and data collected from six iPhone devices. Ensemble Classifiers with Spatial Rich Model features are trained on a single embedding algorithm and tested on each of the four algorithms. Classifiers trained on MiPOD, S-UNIWARD and WOW data achieve decent error rates when testing on all four algorithms. Most notably, an Ensemble Classifier with an adjusted decision threshold trained on LSB matching data achieves decent detection results on MiPOD, S-UNIWARD and WOW data.

In this paper, we present a new reference dataset simulating digital evidence for image (photographic) steganography. Steganography detection is a digital image forensic topic that is relatively unknown in practical forensics, although stego app use in the wild is on the rise. This paper introduces the first database consisting of mobile phone photographs and stego images produced from mobile stego apps, including a rich set of side information, offering simulated digital evidence. StegoAppDB, a steganography apps forensics image database, contains over 810,000 innocent and stego images using a minimum of 10 different phone models from 24 distinct devices, with detailed provenanced data comprising a wide range of ISO and exposure settings, EXIF data, message information, embedding rates, etc. We develop a camera app, Cameraw, specifically for data acquisition, with multiple images per scene, saving simultaneously in both DNG and high-quality JPEG formats. Stego images are created from these original images using selected mobile stego apps through a careful process of reverse engineering. StegoAppDB contains cover-stego image pairs including for apps that resize the stego dimensions. We retain the original devices and continue to enlarge the database, and encourage the image forensics community to use StegoAppDB. While designed for steganography, we discuss uses of this publicly available database to other digital image forensic topics.

Convolutional neural networks offer much more accurate detection of steganography than the outgoing paradigm - classifiers trained on rich representations of images. While training a CNN is scalable with respect to the size of the training set, one cannot directly train on images that are too large due to the memory limitations of current GPUs. Most leading network architectures for steganalysis today require the input image to be a small tile with 256 × 256 or 512 × 512 pixels. Because detecting the presence of steganographic embedding changes really means detecting a very weak noise signal added to the cover image, resizing an image before presenting it to a CNN would be highly suboptimal. Applying the tile detector on disjoint segments of a larger image and fusing the results bring a plethora of new problems of how to properly fuse the outputs. In this paper, we propose a different solution to this problem based on modifying an existing leading network architecture for steganalysis in the spatial domain, the YeNet, to output statistical moments of feature maps to the fully-connected classifier part of the network. On experiments in which we adjust the payload with image size according the square root law for constant statistical detectability, we demonstrate that the proposed architecture can be trained to steganalyze images of various sizes without any or only a small loss with respect to detectors trained for a fixed image size.

In natural steganography, the secret message is hidden by adding to the cover image a noise signal that mimics the heteroscedastic noise introduced naturally during acquisition. The method requires the cover image to be available in its RAW form (the sensor capture). To bring this idea closer to a practical embedding method, in this paper we embed the message in quantized DCT coefficients of a JPEG file by adding independent realizations of the heteroscedastic noise to pixels to make the embedding resemble the same cover image acquired at a larger sensor ISO setting (the so-called cover source switch). To demonstrate the feasibility and practicality of the proposed method and to validate our simplifying assumptions, we work with two digital cameras, one using a monochrome sensor and a second one equipped with a color sensor. We then explore several versions of the embedding algorithm depending on the model of the added noise in the DCT domain and the possible use of demosaicking to convert the raw image values. These experiments indicate that the demosaicking step has a significant impact on statistical detectability for high JPEG quality factors when making independent embedding changes to DCT coefficients. Additionally, for monochrome sensors or low JPEG quality factors very large payload can be embedded with high empirical security.

Deep learning and convolutional neural networks (CNN) have been intensively used in many image processing topics during last years. As far as steganalysis is concerned, the use of CNN allows reaching the state-of-the-art results. The performances of such networks often rely on the size of their learning database. An obvious preliminary assumption could be considering that the bigger a database is, the better the results are. However, it appears that cautions have to be taken when increasing the database size if one desire to improve the classification accuracy i.e. enhance the steganalysis efficiency. To our knowledge, no study has been performed on the enrichment impact of a learning database on the steganalysis performance. What kind of images can be added to the initial learning set? What are the sensitive criteria: the camera models used for acquiring the images, the treatments applied to the images, the cameras proportions in the database, etc? This article continues the work carried out in a previous paper in submission [1], and explores the ways to improve the performances of CNN. It aims at studying the effects of base augmentation on the performance of steganalysis using a CNN. We present the results of this study using various experimental protocols and various databases to define the good practices in base augmentation for steganalysis.