The challenges of film restoration demand versatile tools, making machine learning (ML)—through training custom models—an ideal solution. This research demonstrates that custom models effectively restore color in deteriorated films, even without direct references, and recover spatial features using techniques like gauge and analog video reference recovery. A key advantage of this approach is its ability to address restoration tasks that are difficult or impossible with traditional methods, which rely on spatial and temporal filters. While general-purpose video generation models like Runway, Sora, and Pika Labs have advanced significantly, they often fall short in film restoration due to limitations in temporal consistency, artifact generation, and lack of precise control. Custom ML models offer a solution by providing targeted restoration and overcoming the inherent limitations of conventional filtering techniques. Results from employing these local models are promising; however, developing highly specific models tailored to individual restoration scenarios is crucial for greater efficiency.

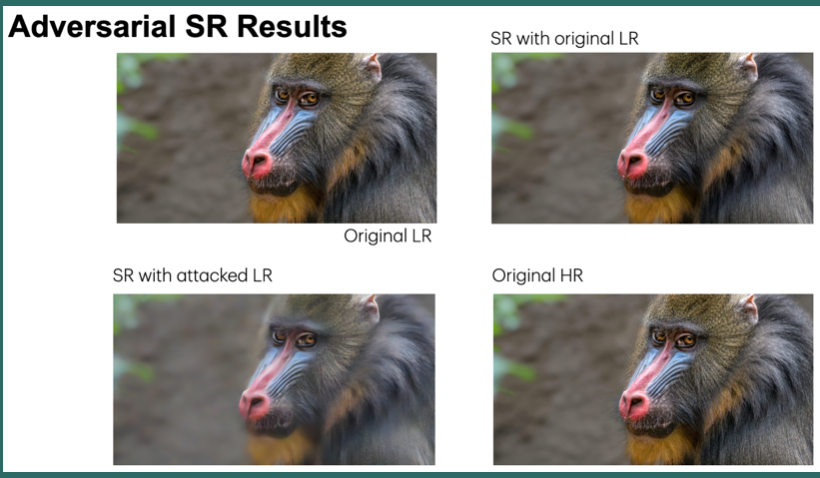

Machine learning and image enhancement models are prone to adversarial attacks, where inputs can be manipulated in order to cause misclassifications. While previous research has focused on techniques like Generative Adversarial Networks (GANs), there’s limited exploration of GANs and Synthetic Minority Oversampling Technique (SMOTE) in image super-resolution, and text and image classification models to perform adversarial attacks. Our study addresses this gap by training various machine learning models and using GANs and SMOTE to generate additional data points aimed at attacking super-resolution and classification algorithms. We extend our investigation to face recognition models, training a Convolutional Neural Network(CNN) and subjecting it to adversarial attacks with fast gradient sign perturbations on key features identified by GradCAM, a technique used to highlight key image characteristics of CNNs use in classification. Our experiments reveal a significant vulnerability in classification models. Specifically, we observe a 20% decrease in accuracy for the top-performing text classification models post-attack, along with a 30% decrease in facial recognition accuracy.

Cancer treatment involves complex decision-making processes. A better understanding of recurrence risk at diagnosis, as well as prediction of treatment response (e.g., to choose the most cost-effective treatment path in an informed manner) are needed to produce the best possible outcomes at the patient level. Prior work shows that the forward/backward (F/B) ratio calculated from Second Harmonic Generation (SHG) imagery can be indicative of risk of recurrence if relevant tissue sub-regions are selected. The choice of which sub-regions to image is currently made by human experts, which is subjective and labor intensive. In this paper, we investigate machine learning methods to automatically identify tissue sub-regions that are most relevant to the prediction of breast cancer recurrence. We formulate the task as a multi-class classification problem and use support vector machine (SVM) classifiers as the inference engine. Given the limited amount of data available, we focus on exploring the feature extraction stage. To that end, we evaluate methods leveraging handcrafted features, deep features extracted from pre-trained models, as well as features extracted via transfer learning. The results show a steady trend of improvement on the classification accuracy as the features become more data-driven and customized to the task at hand. This is an indication that having larger amounts of labeled data could be beneficial for improving automated methods of classification. The best results achieved, using features learned via transfer learning from ResNet-101, correspond to 85% accuracy in a 3-class problem and 94% accuracy in a binary classification problem.

Working in protected workshops places supervisor workers in a work field with concurrent targets. On the one side, the workers with disabilities require a safe space to meet special requirements and on the other side, customers expect comparable time and quality standards than in the normal industry while maintaining cost pressure. We propose a technical solution to support the supervisors with the quality control. We developed a flexible assistance system for people with disabilities working in protected workshops that is based on a Raspberry Pi4 and uses cameras for perception. It is appliable for packaging and picking processes and is supported by additional step by step guidance to reach as many protected workshops as possible. The system tries to support supervisors in quality control and provide information if any action is required to free time for interpersonal matters. An automatic pick-by-light system is included which uses hand recognition. To ensure good speed we used image processing and verified the detections with a machine learning approach for robustness against lighting conditions. In this paper we present the system, which is available open source, itself with its features and the development of the machine learning algorithm.

Developing machine learning models for image classification problems involves various tasks such as model selection, layer design, and hyperparameter tuning for improving the model performance. However, regarding deep learning models, insufficient model interpretability renders it infeasible to understand how they make predictions. To facilitate model interpretation, performance analysis at the class and instance levels with model visualization is essential. We herein present an interactive visual analytics system to provide a wide range of performance evaluations of different machine learning models for image classification. The proposed system aims to overcome challenges by providing visual performance analysis at different levels and visualizing misclassification instances. The system which comprises five views - ranking, projection, matrix, and instance list views, enables the comparison and analysis different models through user interaction. Several use cases of the proposed system are described and the application of the system based on MNIST data is explained. Our demo app is available at https://chanhee13p.github.io/VisMlic/.

Hyperspectral image classification has received more attention from researchers in recent years. Hyperspectral imaging systems utilize sensors, which acquire data mostly from the visible through the near infrared wavelength ranges and capture tens up to hundreds of spectral bands. Using the detailed spectral information, the possibility of accurately classifying materials is increased. Unfortunately conventional spectral cameras sensors use spatial or spectral scanning during acquisition which is only suitable for static scenes like earth observation. In dynamic scenarios, such as in autonomous driving applications, the acquisition of the entire hyperspectral cube in one step is mandatory. To allow hyperspectral classification and enhance terrain drivability analysis for autonomous driving we investigate the eligibility of novel mosaic-snapshot based hyperspectral cameras. These cameras capture an entire hyperspectral cube without requiring moving parts or line-scanning. The sensor is mounted on a vehicle in a driving scenario in rough terrain with dynamic scenes. The captured hyperspectral data is used for terrain classification utilizing machine learning techniques. A major problem, however, is the presence of shadows in captured scenes, which degrades the classification results. We present and test methods to automatically detect shadows by taking advantage of the near-infrared (NIR) part of spectrum to build shadow maps. By utilizing these shadow maps a classifier may be able to produce better results and avoid misclassifications due to shadows. The approaches are tested on our new hand-labeled hyperspectral dataset, acquired by driving through suburban areas, with several hyperspectral snapshotmosaic cameras.

RGB-IR sensor combines the capabilities of RGB sensor and IR sensor in one single sensor. However, the additional IR pixel in the RGBIR sensor reduces the effective number of pixels allocated to visible region introducing aliasing artifacts due to demosaicing. Also, the presence of IR content in R, G and B channels poses new challenges in accurate color reproduction. Sharpness and color reproduction are very important image quality factors for visual aesthetic as well as computer vision algorithms. Demosaicing and color correction module are integral part of any color image processing pipeline and responsible for sharpness and color reproduction respectively. The image processing pipeline has not been fully explored for RGB-IR sensors. We propose a neural network-based approach for demosaicing and color correction for RGB-IR patterned image sensors. In our experimental results, we show that our learning-based approach performs better than the existing demosaicing and color correction methods.

Interferometric tomography can reconstruct 3D refractive index distributions through phase-shift measurements for different beam angles. To reconstruct a complex refractive index distribution, many projections along different directions are required. For the purpose of increasing the number of the projections, we earlier proposed a beam-angle-controllable interferometer with mechanical stages; however, the quality of some of extracted phase images from interferograms included large errors, because the background fringes cannot be precisely controlled. In this study we propose to apply machine learning to phase extraction, which has been generally performed by a sequence of several rule-based algorithms. In order to estimate a phase-shift image, we employ supervised learning in which input is an interferogram and output is the phase-shift image, and both are simulation data. As a result, the network after training can estimate phase-shift images almost correctly from interferograms, in which was difficult for the rule-based algorithms.

For decades, image quality analysis pipeline has been using filters that are derived from human vision system. Although this paradigm is able to capture the basic aspects of human vision, it falls short of characterizing the complex human perception of different visual appearance and image quality. In this work, we propose a new framework that leverages the image recognition capabilities of convolution neural networks to distinguish the visual differences between uniform halftone target samples that are printed on different media using the same printing technology. First, for each scanned target sample, a pre-trained Residual Neural Network is used to generate 2,048-dimension vision feature vector. Then, Principal Component Analysis is used to reduce the dimension to 48 components, which is then used to train a Support Vector Machine to classify the target images. Our model has been tested on various classification and regression tasks and shows very good performance. Further analysis shows that our neural-network-based image quality model learns to makes decisions based on the frequencies of color variations within the target image, and it is capable of characterizing the visual differences under different printer settings.

Hyperspectral imaging increases the amount of information incorporated per pixel in comparison to normal color cameras. Conventional hyperspectral sensors as used in satellite imaging utilize spatial or spectral scanning during acquisition which is only suitable for static scenes. In dynamic scenarios, such as in autonomous driving applications, the acquisition of the entire hyperspectral cube at the same time is mandatory. In this work, we investigate the eligibility of novel snapshot hyperspectral cameras in dynamic scenarios such as in autonomous driving applications. These new sensors capture a hyperspectral cube containing 16 or 25 spectra without requiring moving parts or line-scanning. These sensors were mounted on land vehicles and used in several driving scenarios in rough terrain and dynamic scenes. We captured several hundred gigabytes of hyperspectral data which were used for terrain classification. We propose a random-forest classifier based on hyperspectral and spatial features combined with fully connected conditional random fields ensuring local consistency and context aware semantic scene segmentation. The classification is evaluated against a novel hyperspectral ground truth dataset specifically created for this purpose.