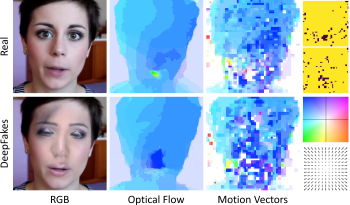

Video DeepFakes are fake media created with Deep Learning (DL) that manipulate a person’s expression or identity. Most current DeepFake detection methods analyze each frame independently, ignoring inconsistencies and unnatural movements between frames. Some newer methods employ optical flow models to capture this temporal aspect, but they are computationally expensive. In contrast, we propose using the related but often ignored Motion Vectors (MVs) and Information Masks (IMs) from the H.264 video codec, to detect temporal inconsistencies in DeepFakes. Our experiments show that this approach is effective and has minimal computational costs, compared with per-frame RGB-only methods. This could lead to new, real-time temporally-aware DeepFake detection methods for video calls and streaming.

Robust multi-camera calibration is a fundamental task for all multi-view camera systems, leveraging discreet camera model fitting from sparse target observations. Stereo systems, photogrammetry and light-field arrays have all demonstrated the need for geometrically consistent calibrations to achieve higherlevels of sub-pixel localization accuracy for improved depth estimation. This work presents a calibration target that leverages multi-directional features to achieve improved dense calibrations of camera systems. We begin by presenting a 2D target that uses an encoded feature set, each with 12 bits of uniqueness for flexible patterning and easy identification. These features combine orthogonal sets of straight and circular binary edges, along with Gaussian peaks. Our proposed feature extraction algorithm uses steerable filters for edge localization, and an ellipsoidal peak fitting for the circle center estimation. Feature uniqueness is used for associativity across views, which is combined into a 3D pose graph for nonlinear optimization. Existing camera models are leveraged for intrinsic and extrinsic estimates, demonstrating a reduction in mean re-projection error of for stereo calibration from 0.2 pixels to 0.01 pixels when using a traditional checkerboard and the proposed target respectively.

In the competitive online fashion market place, it is common for sellers to add artificial elements to their product images, with the hope to improve the aesthetic quality of their products. Among the numerous types of artificial elements, we focus on detecting artificial frames in fashion images in this paper and we propose a novel algorithm based on traditional image processing techniques for this purpose. On the other hand, even though deep learning methods have been very powerful and effective in many image processing tasks in recent years, they do have their drawbacks in some cases, rendering them ineffective compared to our method for this particular task. Experimental results on 1000 testing images show that our algorithm has comparable performance with some of the state-of-the-art deep learning models that have been used for classification.