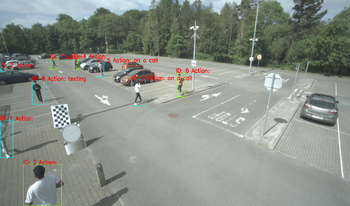

In this paper, we present a database consisting of the annotations of videos showing a number of people performing several actions in a parking lot. The chosen actions represent situations in which the pedestrian could be distracted and not fully aware of her surroundings. Those are “looking behind”, “on a call”, and “texting”, with another one labeled as “no action” when none of the previous actions is performed by the person. In addition to actions, also the speed of the person is labeled. There are three possible values for such speed: “standing”, “walking” and “running”. Bounding boxes of people present in each frame are also provided, along with a unique identifier for each person. The main goal is to provide the research community with examples of actions that can be of interest for surveillance or safe autonomous driving. The addition of the speed of the person when performing the action can also be of interest, as it can be treated as a more dangerous behavior “running” than “waking”, when “on a call” or “looking behind”, for example, providing the researchers with richer information.

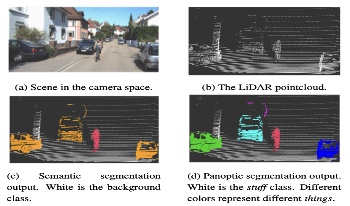

This survey provides a comprehensive overview of LiDAR-based panoptic segmentation methods for autonomous driving. We motivate the importance of panoptic segmentation in autonomous vehicle perception, emphasizing its advantages over traditional 3D object detection in capturing a more detailed and comprehensive understanding of the environment. We summarize and categorize 42 panoptic segmentation methods based on their architectural approaches, with a focus on the kind of clustering utilized: machine learned or non-learned heuristic clustering. We discuss direct methods, most of which use single-stage architectures to predict binary masks for each instance, and clustering-based methods, most of which predict offsets to object centers for efficient clustering. We also highlight relevant datasets, evaluation metrics, and compile performance results on SemanticKITTI and panoptic nuScenes benchmarks. Our analysis reveals trends in the field, including the effectiveness of attention mechanisms, the competitiveness of center-based approaches, and the benefits of sensor fusion. This survey aims to guide practitioners in selecting suitable architectures and to inspire researchers in identifying promising directions for future work in LiDAR-based panoptic segmentation for autonomous driving.

The design and evaluation of complex systems can benefit from a software simulation - sometimes called a digital twin. The simulation can be used to characterize system performance or to test its performance under conditions that are difficult to measure (e.g., nighttime for automotive perception systems). We describe the image system simulation software tools that we use to evaluate the performance of image systems for object (automobile) detection. We describe experiments with 13 different cameras with a variety of optics and pixel sizes. To measure the impact of camera spatial resolution, we designed a collection of driving scenes that had cars at many different distances. We quantified system performance by measuring average precision and we report a trend relating system resolution and object detection performance. We also quantified the large performance degradation under nighttime conditions, compared to daytime, for all cameras and a COCO pre-trained network.

Measuring vehicle locations relative to a driver's vehicle is a critical component in the analysis of driving data from both postanalysis (such as in naturalistic driving studies) or in autonomous vehicle navigation. In this work we describe a method to estimate vehicle positions from a forward-looking video camera using intrinsic camera calibration, estimates of extrinsic parameters, and a convolutional neural network trained to detect and locate vehicles in video data. We compare the measurements we achieve with this method with ground truth and with radar data available from a naturalistic driving study. We identify regions where video is preferred, where radar is preferred, and explore trade-offs between the two methods in regions where the preference is more ambiguous. We describe applications of these measurements for transportation analysis.

Hyperspectral image classification has received more attention from researchers in recent years. Hyperspectral imaging systems utilize sensors, which acquire data mostly from the visible through the near infrared wavelength ranges and capture tens up to hundreds of spectral bands. Using the detailed spectral information, the possibility of accurately classifying materials is increased. Unfortunately conventional spectral cameras sensors use spatial or spectral scanning during acquisition which is only suitable for static scenes like earth observation. In dynamic scenarios, such as in autonomous driving applications, the acquisition of the entire hyperspectral cube in one step is mandatory. To allow hyperspectral classification and enhance terrain drivability analysis for autonomous driving we investigate the eligibility of novel mosaic-snapshot based hyperspectral cameras. These cameras capture an entire hyperspectral cube without requiring moving parts or line-scanning. The sensor is mounted on a vehicle in a driving scenario in rough terrain with dynamic scenes. The captured hyperspectral data is used for terrain classification utilizing machine learning techniques. A major problem, however, is the presence of shadows in captured scenes, which degrades the classification results. We present and test methods to automatically detect shadows by taking advantage of the near-infrared (NIR) part of spectrum to build shadow maps. By utilizing these shadow maps a classifier may be able to produce better results and avoid misclassifications due to shadows. The approaches are tested on our new hand-labeled hyperspectral dataset, acquired by driving through suburban areas, with several hyperspectral snapshotmosaic cameras.

In this paper we propose a data driven model for an autonomous highway pilot. The model is split into two basic parts, an acceleration/deceleration model and a lane change model. For modeling the acceleration, a Bayesian Network is used. For the lane change model, we apply a Hidden Markov Model. The lane change model delivers only discrete lane change events like stay on lane or change to left or right, but no exact trajectories. The model is trained with simulated traffic data, and validated in two different scenarios: in the first scenario, a single model controlled vehicle is embedded into a simulated highway scenario. In the second scenario, all vehicles on a highway are controlled by the model. The proposed model shows reasonable driving behavior in both test scenarios.

Training autonomous vehicles requires lots of driving sequences in all situations[1]. Typically a simulation environment (software-in-the-loop, SiL) accompanies real-world test drives to systematically vary environmental parameters. A missing piece in the optical model of those SiL simulations is the sharpness, given in linear system theory by the point-spread function (PSF) of the optical system. We present a novel numerical model for the PSF of an optical system that can efficiently model both experimental measurements and lens design simulations of the PSF. The numerical basis for this model is a non-linear regression of the PSF with an artificial neural network (ANN). The novelty lies in the portability and the parameterization of this model, which allows to apply this model in basically any conceivable optical simulation scenario, e.g. inserting a measured lens into a computer game to train autonomous vehicles. We present a lens measurement series, yielding a numerical function for the PSF that depends only on the parameters defocus, field and azimuth. By convolving existing images and videos with this PSF model we apply the measured lens as a transfer function, therefore generating an image as if it were seen with the measured lens itself. Applications of this method are in any optical scenario, but we focus on the context of autonomous driving, where quality of the detection algorithms depends directly on the optical quality of the used camera system. With the parameterization of the optical model we present a method to validate the functional and safety limits of camera-based ADAS based on the real, measured lens actually used in the product.

Autonomous driving has the potential to positively impact the daily life of humans. Techniques such as imaging processing, computer vision, and remote sensing have been highly involved in creating reliable and secure robotic cars. Conversely, the interaction between human perception and autonomous driving has not been deeply explored. Therefore, the analysis of human perception during the cognitive driving task, while making critical driving decisions, may provide great benefits for the study of autonomous driving. To achieve such an analysis, eye movement data of human drivers was collected with a mobile eye-tracker while driving in a automotive simulator built around an actual physical car, that mimics a realistic driving experience. Initial experiments have been performed to investigate the potential correlation between the driving behaviors and fixation patterns of the human driver.

Hyperspectral imaging increases the amount of information incorporated per pixel in comparison to normal color cameras. Conventional hyperspectral sensors as used in satellite imaging utilize spatial or spectral scanning during acquisition which is only suitable for static scenes. In dynamic scenarios, such as in autonomous driving applications, the acquisition of the entire hyperspectral cube at the same time is mandatory. In this work, we investigate the eligibility of novel snapshot hyperspectral cameras in dynamic scenarios such as in autonomous driving applications. These new sensors capture a hyperspectral cube containing 16 or 25 spectra without requiring moving parts or line-scanning. These sensors were mounted on land vehicles and used in several driving scenarios in rough terrain and dynamic scenes. We captured several hundred gigabytes of hyperspectral data which were used for terrain classification. We propose a random-forest classifier based on hyperspectral and spatial features combined with fully connected conditional random fields ensuring local consistency and context aware semantic scene segmentation. The classification is evaluated against a novel hyperspectral ground truth dataset specifically created for this purpose.