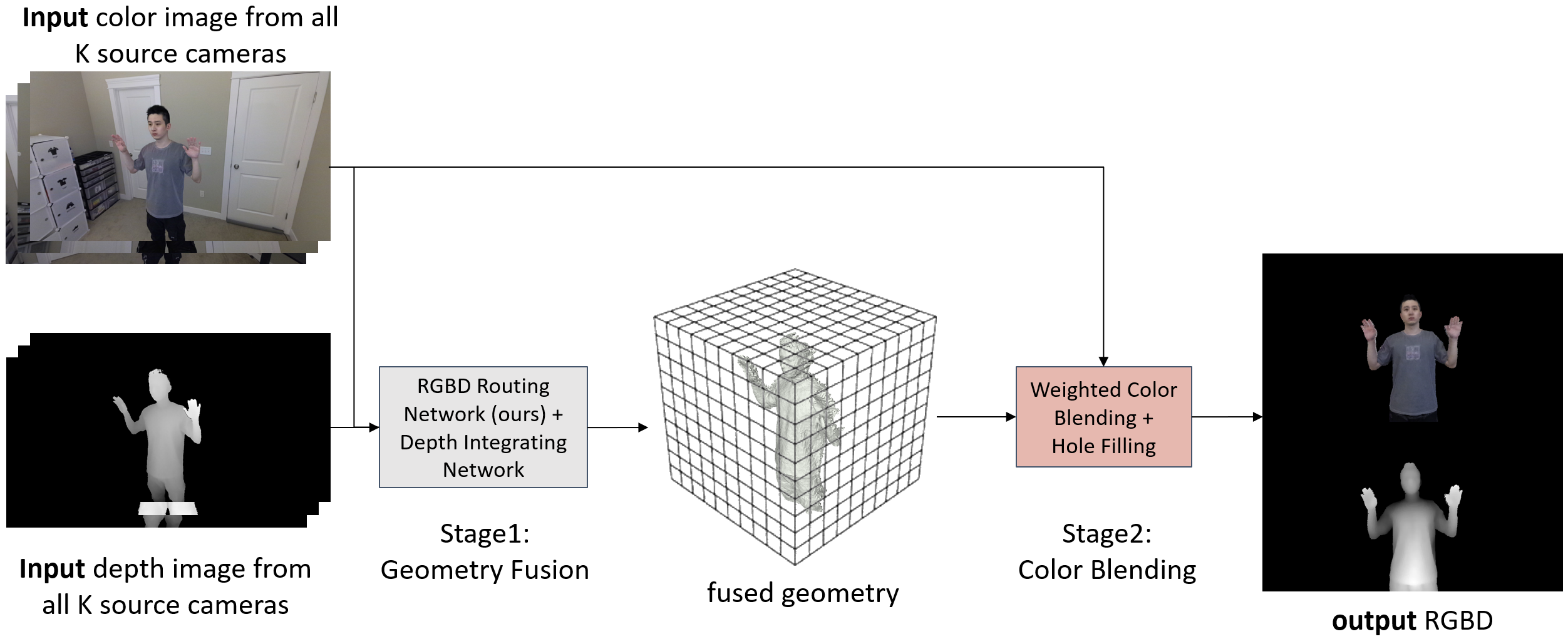

With the widespread use of video conferencing technology for remote communication in the workforce, there is an increasing demand for face-to-face communication between the two parties. To solve the problem of difficulty in acquiring frontal face images, multiple RGB-D cameras have been used to capture and render the frontal faces of target objects. However, the noise of depth cameras can lead to geometry and color errors in the reconstructed 3D surfaces. In this paper, we proposed RGBD Routed Blending, a novel two-stage pipeline for video conferencing that fuses multiple noisy RGB-D images in 3D space and renders virtual color and depth output images from a new camera viewpoint. The first stage is the geometry fusion stage consisting of an RGBD Routing Network followed by a Depth Integrating Network to fuse the RGB-D input images to a 3D volumetric geometry. As an intermediate product, this fused geometry is sent to the second stage, the color blending stage, along with the input color images to render a new color image from the target viewpoint. We quantitatively evaluate our method on two datasets, a synthetic dataset (DeformingThings4D) and a newly collected real dataset, and show that our proposed method outperforms the state-of-the-art baseline methods in both geometry accuracy and color quality.

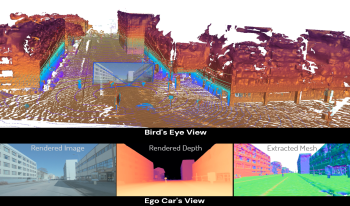

Dense 3D reconstruction has many applications in automated driving including automated annotation validation, multi-modal data augmentation, providing ground truth annotations for systems lacking LiDAR, as well as enhancing auto-labeling accuracy. LiDAR provides highly accurate but sparse depth, whereas camera images enable estimation of dense depth but noisy particularly at long ranges. In this paper, we harness the strengths of both sensors and propose a multimodal 3D scene reconstruction using a framework combining neural implicit surfaces and radiance fields. In particular, our method estimates dense and accurate 3D structures and creates an implicit map representation based on signed distance fields, which can be further rendered into RGB images, and depth maps. A mesh can be extracted from the learned signed distance field and culled based on occlusion. Dynamic objects are efficiently filtered on the fly during sampling using 3D object detection models. We demonstrate qualitative and quantitative results on challenging automotive scenes.

Passive stereo vision systems are useful for estimating 3D geometries from digital images similar to the human biological system. In general, two cameras are situated at a known distance from the object and simultaneously capture images of the same scene from different views. This paper presents a comparative evaluation of 3D geometries of scenes estimated by three disparity estimation algorithms, namely the hybrid stereo matching algorithm (HCS), factor graph-based stereo matching algorithm (FGS), and a multi-resolution FGS algorithm (MR-FGS). Comparative studies were conducted using our stereo imaging system as well as hand-held, consumer-market digital cameras and camera phones of a variety of makes/models. Based on our experimental results, the factor graph algorithm (FGS) and multi-resolution factor graph algorithm (MR-FGS) result in a higher level of 3D reconstruction accuracy than the HCS algorithm. When compared with the FGS algorithm, MR-FGS provides a significant improvement in the disparity contrast along the depth boundaries and minimal depth discontinuities.