Image Aesthetic Assessment (IAA) has attracted increasing attention recently but it is still challenging due to its high abstraction and complexity. In this paper, recent advancements in IAA are explored, emphasizing the goal, complexity, and critical role this task plays in improving visual content. Insights from our recent studies are combined to present a unified perspective on the state of IAA, focusing on methods relying on the use of genetic algorithms, language-based understanding, and composition-attribute guidance. These methods are examined for their potential in practical applications like content selection and quality enhancement, such as autocropping. The discussion concludes with an overview of the challenges and future directions in this field.

High dynamic range (HDR) scenes are known to be challenging for most cameras. The most common artifacts associated with bad HDR scene rendition are clipped bright areas and noisy dark regions, rendering the images unnatural and unpleasing. This paper introduces a novel methodology for automating the perceptual evaluation of detail rendition in these extreme regions of the histogram for images that portray natural scenes. The key contributions include 1) the construction of a robust database in Just Objectionable Distance (JOD) scores, incorporating annotator outlier detection 2) the introduction of a Multitask Convolutional Neural Network (CNN) model that effectively addresses the diverse context and region-of-interest challenges inherent in natural scenes. Our experimental evaluation demonstrates that our approach strongly aligns with human evaluations. The adaptability of our model positions it as a valuable tool for ensuring consistent camera performance evaluation, contributing to the continuous evolution of smartphone technologies.

This study aims to investigate how a specific type of distortion in imaging pipelines, such as read noise, affects the performance of an automatic license plate recognition algorithm. We first evaluated a pretrained three-stage license plate recognition algorithm using undistorted license plate images. Subsequently, we applied 15 different levels of read noise using a well-known imaging pipeline simulation tool and assessed the recognition performance on the distorted images. Our analysis reveals that recognition accuracy decreases as read noise becomes more prevalent in the imaging pipeline. However, we observed that, contrary to expectations, a small amount of noise can increase vehicle detection accuracy, particularly in the case of the YOLO-based vehicle detection module. The part of the automatic license plate recognition system that is mostly prone to errors and is mostly affected by read noise is the optical character recognition module. The results highlight the importance of considering imaging pipeline distortions when designing and deploying automatic license plate recognition systems.

Retinex is a theory of colour vision, and it is also a well-known image enhancement algorithm. The Retinex algorithms reported in the literature are often called path-based or centre-surround. In the path-based approach, an image is processed by calculating (reintegrating along) paths in proximate image regions and averaging amongst the paths. Centre-surround algorithms convolve an image (in log units) with a large-scale centre-surround-type operator. Both types of Retinex algorithms map a high dynamic range image to a lower-range counterpart suitable for display, and both are proposed as a method to simultaneously enhance an image for preference. In this paper, we reformulate one of the most common variants of the path-based approach and show that it can be recast as a centre-surround algorithm at multiple scales. Significantly, our new method processes images more quickly and is potentially biologically plausible. To the extent that Retinex produces pleasing images, it produces equivalent outputs. Experiments validate our method.

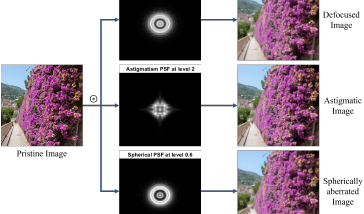

For image quality assessment, the availability of diverse databases is vital for the development and evaluation of image quality metrics. Existing databases have played an important role in promoting the understanding of various types of distortion and in the evaluation of image quality metrics. However, a comprehensive representation of optical aberrations and their impact on image quality is lacking. This paper addresses this gap by introducing a novel image quality database that focuses on optical aberrations. We conduct a subjective experiment to capture human perceptual responses on a set of images with optical aberrations. We then test the performance of selected objective image quality metrics to assess these aberrations. This approach not only ensures the relevance of our database to real-world scenarios but also contributes to ensuring the performance of the selected image quality metrics. The database is available for download at https: // www. ntnu. edu/ colourlab/ software .

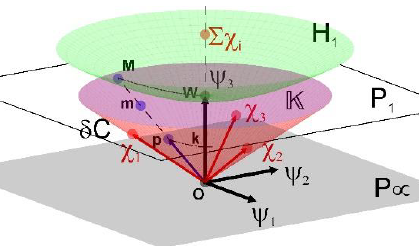

The visual system decomposes light entering the eye into an achromatic and a chromatic signal. Knowing whether this decomposition is additive or multiplicative is still a current research domain. Luminance has been found to be additive when measured physiologically but multiplicative in appearance. Demosaicing multispectral single shoot images show how additive decomposition is a linear solution to the inverse problem of mosaicing (sampling through a color/spectral filter array). But for reflectance estimation, a multiplicative decomposition would be preferred. I will show that these two decompositions imply two different geometries that share their vector spaces but not their metric.

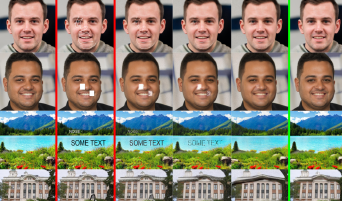

This paper introduces an innovative blind in-painting technique designed for image quality enhancement and noise removal. Employing Monte-Carlo simulations, the proposed method approximates the optimal mask necessary for automatic image in-painting. This involves the progressive construction of a noise removal mask, initially sampled randomly from a binomial distribution. A confidence map is iteratively generated, providing a pixel-wise indicator map that discerns whether a particular pixel resides within the dataset domain. Notably, the proposed method eliminates the manual creation of an image mask to eradicate noise, a process prone to additional time overhead, especially when noise is dispersed across the entire image. Furthermore, the proposed method simplifies the determination of pixels involved in the in-painting process, excluding normal pixels and thereby preserving the integrity of the original image content. Computer simulations demonstrate the efficacy of this method in removing various types of noise, including brush painting and random salt and pepper noise. The proposed technique successfully restores similarity between the original and normalized datasets, yielding a Binary Cross Entropy (BCE) of 0.69 and a Peak-Signal-to-Noise-Ratio (PSNR) of 20.069. With its versatile applications, this method proves beneficial in diverse industry and medical contexts.

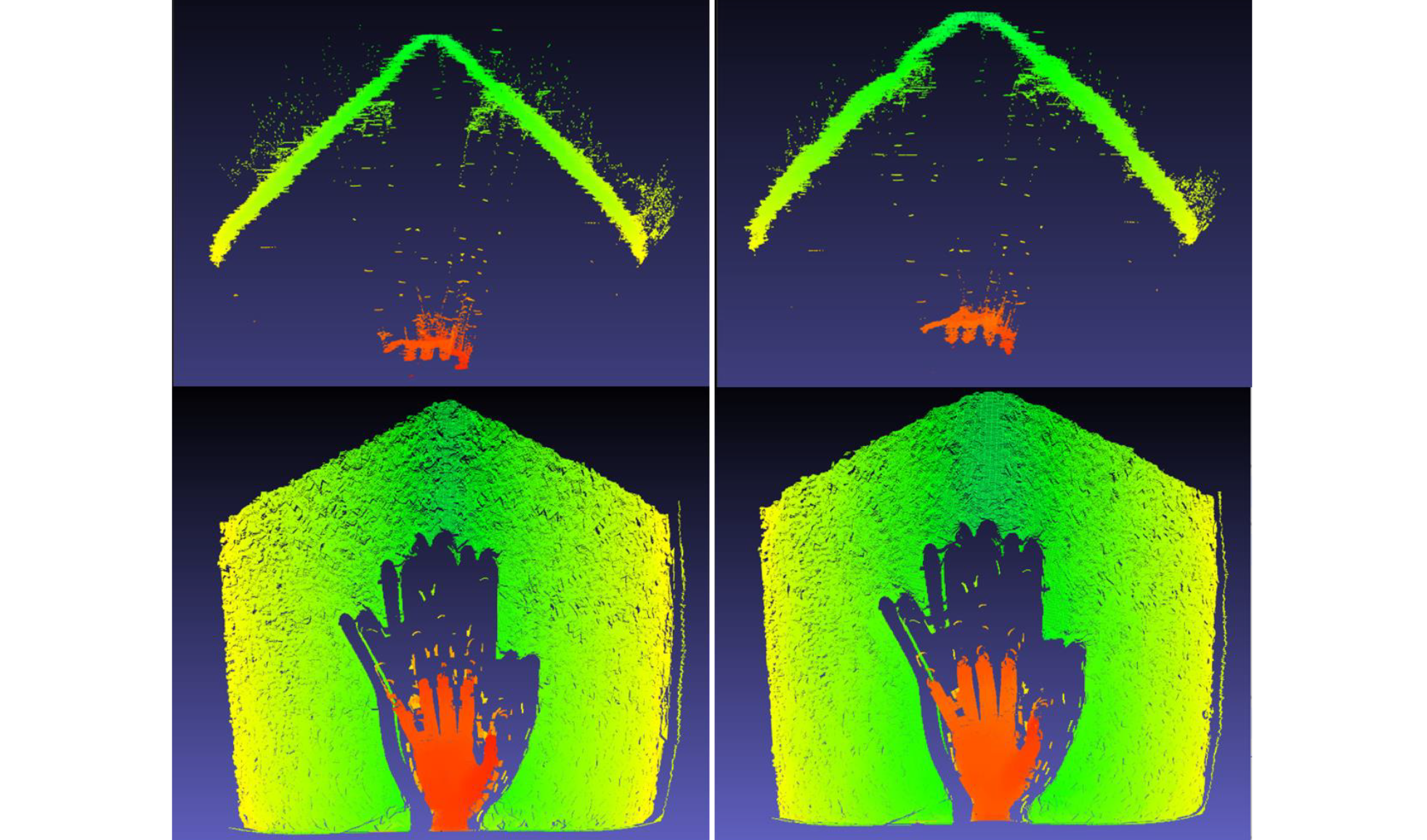

We introduce an innovative 3D depth sensing scheme that seamlessly integrates various depth sensing modalities and technologies into a single compact device. Our approach dynamically switches between depth sensing modes, including iTOF and structured light, enabling real-time data fusion of depth images. We successfully demonstrated iToF depth imaging without multipath interference (MPI), simultaneously achieving high image resolution (VGA) and high depth accuracy at a frame rate of 30 fps.