References

1ShashiranganaJ.PadmasiriH.MeedeniyaD.PereraC.2021Automated license plate recognition: A survey on methods and techniquesIEEE Access9112031122511203–2510.1109/ACCESS.2020.3047929

2PrajapatiR. K.BhardwajY.JainR. K.HiranK. K.A review paper on automatic number plate recognition using machine learning: An in-depth analysis of machine learning techniques in automatic number plate recognition: Opportunities and limitationsInt’l. Conf. on Computational Intelligence, Communication Technology and Networking (CICTN)2023IEEEPiscataway, NJ10.1109/CICTN57981.2023.10141318

3DuS.IbrahimM.ShehataM.BadawyW.2013Automatic license plate recognition (ALPR): A state-of-the-art reviewIEEE Trans. Circuits Syst. Video Technol.23311325311–2510.1109/TCSVT.2012.2203741

4LiH.WangP.ShenC.2017Towards end-to-end car license plate detection and recognition with deep neural networksIEEE Trans. Intell. Transp. Systems20112611361126–3610.1109/TITS.2018.2847291

5LiH.WangP.YouM.ShenC.2018Reading car license plates using deep neural networksImage Vis. Comput.72142314–2310.1016/j.imavis.2018.02.002

6MontazzolliS.JungC.Real-time Brazilian license plate detection and recognition using deep convolutional neural networksConf. on Graphics, Patterns and Images (SIBGRAPI)2017IEEEPiscataway, NJ556255–6210.1109/SIBGRAPI.2017.14

7XuZ.YangW.MengA.LuN.HuangH.YingC.HuangL.Towards end-to-end license plate detection and recognition: A large dataset and baselineEuropean Conf. on Computer Vision (ECCV) ECCV 20182018Vol. 11217SpringerCham10.1007/978-3-030-01261-8_16

8RedmonJ.DivvalaS.GirshickR.FarhadiA.You only look once: Unified, real-time object detectionIEEE/CVF Computer Vision and Pattern Recognition Conf. (CVPR)2016IEEEPiscataway, NJ779788779–8810.1109/CVPR.2016.91

9LiuW.AnguelovD.ErhanD.SzegedyC.ReedS.FuC.-Y.Berg.Alexander C.SSD: Single shot multibox detectorEuropean Conf. on Computer Vision2016SpringerCham10.1007/978-3-319-46448-0_2

10RenS.HeK.GirshickR.SunJ.2016Faster R-CNN: Towards real-time object detection with region proposal networksIEEE Trans. Pattern Analysis and Machine Int.39113711491137–4910.1109/TPAMI.2016.2577031

11JaderbergM.SimonyanK.ZissermanA.KavukcuogluK.Spatial transformer networksConf. on Neural Information Processing Systems (NeurIPS)2015Curran AssociatesRed Hook, NY

12SilvaS. M.JungC. R.License plate detection and recognition in unconstrained scenariosEuropean Conf. on Computer Vision (ECCV)2018SpringerCham10.1007/978-3-030-01258-8_36

13AwalgaonkarN.BratakkeP.ChauguleR.Automatic license plate recognition system using SSDInt’l. Symposium of Asian Control Association on Intelligent Robotics and Industrial Automation (IRIA)2021IEEEPiscataway, NJ394399394–910.1109/IRIA53009.2021.9588707

14SaidaniT.TouatiY. E.2021A vehicle plate recognition system based on deep learning algorithmsMultimedia Tools Appl.8010.1007/s11042-021-11233-z

15BakshiA.UdmaleS. S.ALPR: A method for identifying license plates using sequential informationComputer Analysis of Images and Patterns (CAIP)2023SpringerCham10.1007/978-3-031-44237-7_27

16FarhatA.HommosO.Al-ZawqariA.Al-QahtaniA.BensaaliF.AmiraA.ZhaiX.2018Optical character recognition on heterogeneous SoC for HD automatic number plate recognition systemJ. Image and Video Process.2018

17KakaniB. V.GandhiD.J.SagarImproved OCR base automatic vehicle number plate recognition using features trained neural networkInt’l. Conf. on Computing, Communication and Networking Technologies (ICCCNT)2017IEEEPiscataway, NJ10.1109/ICCCNT.2017.8203916

18LarocaR.SeveroE.ZanlorensiL. A.OliveiraL. S.GoncalvesG. R.SchwartzW. R.MenottiD.A robust real-time automatic license plate recognition based on the YOLO detectorInt’l. Joint Conf. on Neural Networks (IJCN)2018IEEEPiscataway, NJ10.1109/IJCNN.2018.8489629

19WeberM.PeronaP.CarsC.1999

20HsuG.-S.ChenJ.-C.ChungY.-Z.2012Application-oriented license plate recognitionIEEE Trans. Veh. Technol.62552561552–6110.1109/TVT.2012.2226218

21

22LiH.WangP.ShenC.2019Toward end-to-end car license plate detection and recognition with deep neural networksIEEE Trans. Intell. Transp. Syst.23112611361126–3610.1109/TITS.2018.2847291

23GeirhosR.TemmeC. R. M.RauberJ.SchuttH. H.BethgeM.WichmannF. A.Generalisation in humans and deep neural networksConf. on Neural Information Processing Systems (NeurIPS)2018Curran AssociatesRed Hook, NY

24MichaelisC.MitzkusB.GeirhosR.RusakE.BringmannO.EckerA. S.BethgeM.BrendelW.Bench-marking robustness in object detection: Autonomus driving when winter is comingConf. on Neural Information Processing Systems (NeurIPS)2019Curran AssociatesRed Hook, NY

25DengxinD.GoolL. V.Dark model adaptation: Semantic image segmentation from daytime to nighttimeInt’l. Conf. on Intelligent Transportation Systems (ITSC)2018IEEEPiscataway, NJ381938243819–2410.1109/ITSC.2018.8569387

26YimJ.SohnK.-A.Enhancing the performance of convolutional neural networks on quality degraded datasetsInt’l. Conf. on Digital Image Computing: Techniques and Applications (DICTA)2017IEEEPiscataway, NJ10.1109/DICTA.2017.8227427

27KongL.WenH.GuoL.WangQ.HanY.2015Improvement of linear filter in image denoisingProc. SPIE980898083F

28GaiS.BaoZ.2019New image denoising algorithm via improved deep convolutional neural network with perceptive lossExpert Syst. Appl.13811281510.1016/j.eswa.2019.07.032

29KimE.KimJ.LeeH.KimS.2021Adaptive data augmentation to achieve noise robustness and overcome data deficiency for deep learningAppl. Sci.11

30MomenyM.LatifA. M.SarramM. A.SheikhpourR.ZhangY. D.2021A noise robust convolutional neural network for image classificationResults Eng.1010022510.1016/j.rineng.2021.100225

31Rio-AlvarezA.de Andres-SuarezJ.Gonzales-RodriguezM.Fernandez-LanvinD.Lopez PerezB.2019Effects of challenging weather and illumination on learning-based license plate detection in noncontrolled environmentsSci. Program.689734516

32SpanhelJ.SochorJ.JuranekR.HeroutA.MarsikL.ZemcikP.Holistic recognition of low quality license plates by CNN using track annotated dataIEEE Int’l. Conf. on Advanced Video and Signal Based Surveillance (AVSS)2017IEEEPiscataway, NJ10.1109/AVSS.2017.8078501

33XuF.ChenC.ShangZ.PengY.LiX.2023A CRNN-based Method for chinese ship license plate recognitionIET Image Process.18

34YanJ.YuJ.XiaoX.2023HyperLPR3 - high performance license plate recognition framework

35

36RedmonJ.FarhadiA.YOLO9000: Better, faster, strongerIEEE Conf. on Computer Vision and Pattern Recognition (CVPR)2017IEEEPiscataway, NJ

37GuZ.SuY.LiuC.LyuY.JianY.LiH.CaoZ.WangL.2020Adversarial attacks on license plate recognition systemsComput. Mater. Continua65143714521437–5210.32604/cmc.2020.011834

38ZhaM.MengG.LinC.ZhouZ.ChenK.RoLMA: A practical adversarial attack against deep learning-based LPR systemsInformation Security and Cryptology. Inscrypt 2019Lecture Notes in Computer Science2020Vol. 12020SpringerCham10.1007/978-3-030-42921-8_6

39

40YuanY.ZouW.ZhaoY.WangX.HuX.KomodakisN.2017A robust and efficient approach to license plate detectionIEEE Trans. Image Process.26

41LarocaR.CardosoE. V.LucioD. R.EstevamV.MenottiD.On the cross-dataset generalization in license plate recognitionInt’l. Conf. on Computer Vision Theory and Applications (VIS- APP)2022

42ChenJ.TanC.-H.HouJ.ChauL.-P.LiH.Robust video content alignment and compensation for rain removal in a CNN frameworkIEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR)2018IEEEPiscataway, NJ628662956286–9510.1109/CVPR.2018.00658

43BulanO.KozitskyV.RameshP.ShreveM.2017Segmentation- and annotation-free license plate recognition with deep localization and failure identificatonIEEE Trans. Intell. Transp. Syst.18235123632351–6310.1109/TITS.2016.2639020

44BakshiA.GulhaneS.SawantT.SambheV.UdmaleS. S.ALPR - An intelligent approach towards detection and recognition of license plates in uncontrolled environmentsInt’l. Conf. Distributed Computing and Intelligent Technology (ICDCIT)2023SpringerCham253269253–6910.1007/978-3-031-24848-1_18

45

46FarellJ. E.XiaoF.CatrysseP. B.WandellB. A.2003A simulation tool for evaluating digital camera image qualityProc. SPIE5294

47WeiK.FuY.ZhengY.YangJ.2023Physics-based noise modeling for extreme low-light photographyIEEE Trans. Pattern Anal. Mach. Intell.44852085378520–37

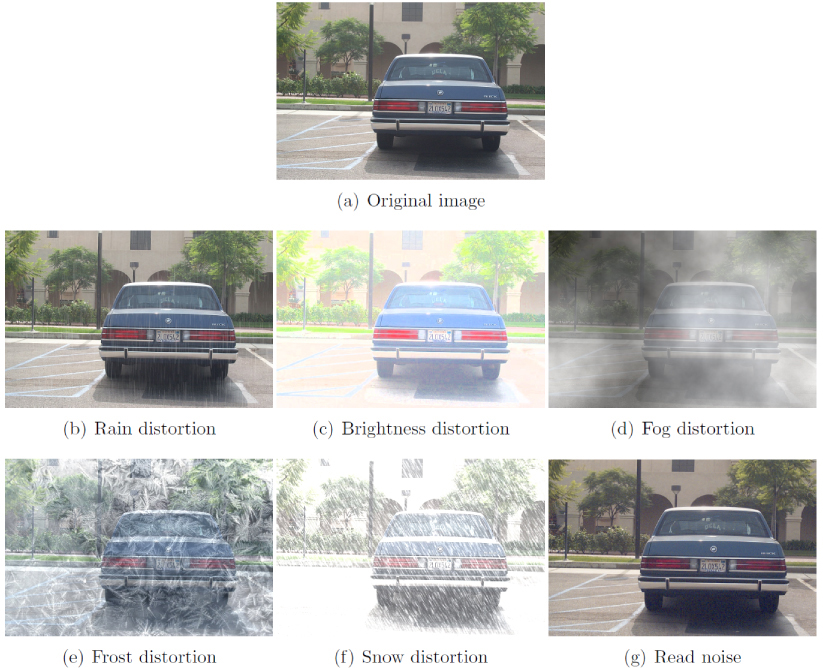

48PlavacN.AmirshahiS. A.PedersenM.TriantaphillidouS.2024The influence of read noise on an automatic license plate recognition systemLondon Imaging Meeting51210.2352/lim.2024.5.1.3

49KamannC.RotherC.Benchmarking the robustness of semantic segmentation modelsIEEE/CVF Computer Vision and Pattern Recognition Conf. (CVPR)2020IEEEPiscataway, NJ882588358825–3510.1109/CVPR42600.2020.00885

50RothmeierT.WachtelD.von dem Bussche-HunnefeldT.HuberW.I had a bad day: Challenges of object detection in bad visibility conditionsIEEE Intelligent Vehicles Symposium2023IEEEPiscataway, NJ161–610.1109/IV55152.2023.10186674

51KireevK.AndriuschchenkoM.FlammarionN.On the effectiveness of adversarial training against common corruptionsConf. on Uncertainty in Artificial Intelligence2021Microtome PublishingBrookline, MA

52TripathiA. K.MukhopadhyayS.2012Removal of fog from images: A reviewIETE Tech. Rev.29

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed

Open access

Open access