References

1MantiukR. K.MyszkowskiK.SeidelH.-P.High Dynamic Range Imaging2015Wiley Encyclopedia of Electrical and Electronics Engineering10.1002/047134608X.W8265

2DalyS. J.FengX.2003Bit-depth extension using spatiotemporal microdither based on models of the equivalent input noise of the visual systemProc. SPIE5008455466455–6610.1117/12.472016

3SongQ.SuG.-M.CosmanP. C.2016Hardware-efficient debanding and visual enhancement filter for inverse tone mapped high dynamic range images and videos2016 IEEE Int’l. Conf. on Image Processing (ICIP)329933033299–303IEEEPiscataway, NJ10.1109/ICIP.2016.7532970

4LuzardoG.AeltermanJ.LuongH.PhilipsW.OchoaD.2017Real-time false-contours removal for inverse tone mapped HDR contentProc. 25th ACM Int’l. Conf. on Multimedia147214791472–9ACMNew York, NY10.1145/3123266.3123400

5MukherjeeS.SuG.-M.ChengI.2018Adaptive dithering using curved Markov–Gaussian noise in the quantized domain for mapping SDR to HDR imageInt’l. Conf. on Smart Multimedia193203193–203SpringerCham10.1007/978-3-030-04375-9_17

6BanterleF.LeddaP.DebattistaK.ChalmersA.2006Inverse tone mappingProc. 4th Int’l. Conf. on Computer Graphics and Interactive Techniques in Australasia and Southeast Asia349356349–56ACMNew York, NY10.1145/1174429.1174489

7BanterleF.LeddaP.DebattistaK.ChalmersA.BlojM.2007A framework for inverse tone mappingVis. Comput.23467478467–7810.1007/s00371-007-0124-9

8De SimoneF.ValenziseG.LaugaP.DufauxF.BanterleF.2014Dynamic range expansion of video sequences: A subjective quality assessment study2014 IEEE Global Conf. on Signal and Information Processing (GlobalSIP)106310671063–7IEEEPiscataway, NJ10.1109/GlobalSIP.2014.7032284

9MasiaB.SerranoA.GutierrezD.2017Dynamic range expansion based on image statisticsMultimedia Tools Appl.76631648631–4810.1007/s11042-015-3036-0

10ZhangX.BrainardD. H.2004Estimation of saturated pixel values in digital color imagingJ. Opt. Soc. Am. A21230123102301–1010.1364/JOSAA.21.002301

11XuD.DoutreC.NasiopoulosP.2011Correction of clipped pixels in color imagesIEEE Trans. Vis. Comput. Graph.17333344333–4410.1109/TVCG.2010.63

12KangS. B.UyttendaeleM.WinderS.SzeliskiR.2003High dynamic range videoACM Trans. Graph. (TOG)22319325319–2510.1145/882262.882270

13KalantariN. K.RamamoorthiR.2017Deep high dynamic range imaging of dynamic scenesACM Trans. Graph.361121–1210.1145/3072959.3073609

14KalantariN. K.RamamoorthiR.2019Deep hdr video from sequences with alternating exposuresComputer Graphics ForumVol. 38193205193–205WileyHoboken, NJ10.1111/cgf.13630

15ChenG.ChenC.GuoS.LiangZ.WongK.-Y. K.ZhangL.2021HDR video reconstruction: A coarse-to-fine network and a real-world benchmark datasetProc. IEEE/CVF Int’l. Conf. on Computer Vision250225112502–11IEEEPiscataway, NJ10.1109/ICCV48922.2021.00250

16WuS.XuJ.TaiY.-W.TangC.-K.2018Deep high dynamic range imaging with large foreground motionsEuropean Conf. on Computer Vision ECCV 2018: Computer Vision – ECCV 2018120135120–35SpringerCham10.1007/978-3-030-01216-8_8

17YanQ.GongD.ShiQ.HengelA. v. d.ShenC.ReidI.ZhangY.2019Attention-guided network for ghost-free high dynamic range imagingProc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition175117601751–60IEEEPiscataway, NJ10.1109/CVPR.2019.00185

18YanQ.ZhangL.LiuY.ZhuY.SunJ.ShiQ.ZhangY.2020Deep HDR imaging via a non-local networkIEEE Trans. Image Process.29430843224308–2210.1109/TIP.2020.2971346

19WangX.ChanK. C. K.YuK.DongC.LoyC. C.2019EDVR: Video restoration with enhanced deformable convolutional networksProc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition Workshops195419631954–63IEEEPiscataway, NJ10.1109/CVPRW.2019.00247

20LedigC.TheisL.HuszárF.CaballeroJ.CunninghamA.AcostaA.AitkenA.TejaniA.TotzJ.WangZ.ShiW.2017Photo-realistic single image super-resolution using a generative adversarial networkProc. IEEE Conf. on Computer Vision and Pattern Recognition105114105–14IEEEPiscataway, NJ10.1109/CVPR.2017.19

21ZhaoH.GalloO.FrosioI.KautzJ.2016Loss functions for image restoration with neural networksIEEE Trans. Computational Imaging3475747–5710.1109/TCI.2016.2644865

22NayarS. K.MitsunagaT.2000High dynamic range imaging: Spatially varying pixel exposuresProc. IEEE Conf. on Computer Vision and Pattern Recognition. CVPR 2000 (Cat. No. PR00662)Vol. 1472479472–9IEEEPiscataway, NJ10.1109/CVPR.2000.855857

23NayarS. K.BranzoiV.BoultT. E.2004Programmable imaging using a digital micromirror arrayProc. 2004 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, 2004. CVPR 2004Vol. 1IEEEPiscataway, NJ10.1109/CVPR.2004.1315065

24TocciM. D.KiserC.TocciN.SenP.2011A versatile HDR video production systemACM Trans. Graph. (TOG)301101–1010.1145/2010324.1964936

25KronanderJ.GustavsonS.BonnetG.UngerJ.2013Unified HDR reconstruction from raw CFA dataIEEE Int’l. Conf. on Computational Photography (ICCP)191–9IEEEPiscataway, NJ10.1109/ICCPhot.2013.6528315

26FroehlichJ.GrandinettiS.EberhardtB.WalterS.SchillingA.BrendelH.2014Creating cinematic wide gamut HDR-video for the evaluation of tone mapping operators and HDR-displaysProc. SPIE9023279288279–8810.1117/12.2040003

27LuléT.KellerH.WagnerM.BöhmM.1999LARS II-a high dynamic range image sensor with a-si: H photo conversion layer1999 IEEE Workshop on Charge-Coupled Devices and Advanced Image Sensors, Nagano, Japan, CiteseerIEEEPiscataway, NJ

28SegerU.ApelU.HöfflingerB.1999HDRC-imagers for natural visual perceptionHandbook Comput. Vis. Appl.12

29KavadiasS.DierickxB.SchefferD.AlaertsA.UwaertsD.BogaertsJ.2000A logarithmic response CMOS image sensor with on-chip calibrationIEEE J. Solid-State Circuits35114611521146–5210.1109/4.859503

30ReinhardE.StarkM.ShirleyP.FerwerdaJ.2002Photographic tone reproduction for digital imagesProc. 29th Annual Conf. on Computer Graphics and Interactive Techniques267276267–76ACMNew York, NY10.1145/566654.566575

31MeylanL.DalyS.SüsstrunkS.2006The reproduction of specular highlights on high dynamic range displaysProc. IS&T/SID CIC14: Fourteenth Color Imaging Conf.333338333–8IS&TSpringfield, VA10.2352/CIC.2006.14.1.art00061

32RempelA. G.TrentacosteM.SeetzenH.YoungH. D.HeidrichW.WhiteheadL.WardG.2007Ldr2hdr: on-the-fly reverse tone mapping of legacy video and photographsACM Trans. Graph. (TOG)2610.1145/1276377.1276426

33BanterleF.LeddaP.DebattistaK.ChalmersA.2008Expanding low dynamic range videos for high dynamic range applicationsProc. 24th Spring Conf. on Computer Graphics334133–41ACMNew York, NY10.1145/1921264.1921275

34KovaleskiR. P.OliveiraM. M.2014High-quality reverse tone mapping for a wide range of exposures2014 27th SIBGRAPI Conf. on Graphics, Patterns and Images495649–56IEEEPiscataway, NJ10.1109/SIBGRAPI.2014.29

35DidykP.MantiukR.HeinM.SeidelH.-P.2008Enhancement of bright video features for HDR displaysComputer Graphics ForumVol. 27126512741265–74WileyHoboken, NJ10.1111/j.1467-8659.2008.01265.x

36EilertsenG.KronanderJ.DenesG.MantiukR. K.UngerJ.2017HDR image reconstruction from a single exposure using deep CNNsACM Trans. Graph. (TOG)361151–1510.1145/3130800.3130816

37EndoY.KanamoriY.MitaniJ.2017Deep reverse tone mappingACM Trans. Graph.3610.1145/3130800.3130834

38LiuY.-L.LaiW.-S.ChenY.-S.KaoY.-L.YangM.-H.ChuangY.-Y.HuangJ.-B.2020Single-image HDR reconstruction by learning to reverse the camera pipelineProc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition165116601651–60IEEEPiscataway, NJ10.1109/CVPR42600.2020.00172

39MangiatS.GibsonJ.2010High dynamic range video with ghost removalProc. SPIE779877981210.1117/12.862492

40MangiatS.GibsonJ.2011Spatially adaptive filtering for registration artifact removal in HDR video2011 18th IEEE Int’l. Conf. on Image Processing131713201317–20IEEEPiscataway, NJ10.1109/ICIP.2011.6115678

41KalantariN. K.ShechtmanE.BarnesC.DarabiS.GoldmanD. B.SenP.2013Patch-based high dynamic range videoACM Trans. Graph.32181–810.1145/2508363.2508402

42GryaditskayaY.PouliT.ReinhardE.MyszkowskiK.SeidelH.-P.2015Motion aware exposure bracketing for HDR videoComputer Graphics ForumVol. 34119130119–30WileyHoboken, NJ10.1111/cgf.12684

43LiY.LeeC.MongaV.2016A maximum a posteriori estimation framework for robust high dynamic range video synthesisIEEE Trans. Image Process.26114311571143–5710.1109/TIP.2016.2642790

44EilertsenG.MantiukR. K.UngerJ.2019Single-frame regularization for temporally stable cnnsProc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition111761118511176–85IEEEPiscataway, NJ10.1109/CVPR.2019.01143

45KimS. Y.OhJ.KimM.2020Jsi-gan: Gan-based joint super-resolution and inverse tone-mapping with pixel-wise task-specific filters for uhd hdr videoProc. AAAI Conf. on Artificial IntelligenceVol. 34112871129511287–95AAAIWashington, DC

46ZhangH.SongL.GanW.XieR.2023Multi-scale-based joint super-resolution and inverse tone-mapping with data synthesis for UHD HDR videoDisplays7910249210.1016/j.displa.2023.102492

47ChenX.ZhangZ.RenJ. S.TianL.QiaoY.DongC.2021A new journey from SDRTV to HDRTVProc. IEEE/CVF Int’l. Conf. on Computer Vision450045094500–9IEEEPiscataway, NJ10.1109/ICCV48922.2021.00446

48AnandM.HarilalN.KumarC.RamanS.2021HDRVideo-GAN: deep generative HDR video reconstructionProc. Twelfth Indian Conf. on Computer Vision, Graphics and Image Processing191–9ACMNew York, NY10.1145/3490035.3490266

49YangY.HanJ.LiangJ.SatoI.ShiB.

50YangQ.LiuY.YangJ.

51CogalanU.BemanaM.MyszkowskiK.SeidelH.-P.RitschelT.2022Learning HDR video reconstruction for dual-exposure sensors with temporally-alternating exposuresComput. Graph.105577257–7210.1016/j.cag.2022.04.008

52LiuZ.LiZ.ChenW.WuX.LiuZ.2023Unsupervised optical flow estimation for differently exposed images in ldr domainIEEE Trans. Circuits Syst. Video Technol.111–10.1109/TCSVT.2023.3252007

53MartorellO.BuadesA.2022Variational temporal optical flow for multi-exposure videoVISIGRAPP (4: VISAPP)666673666–73SciTePressSetubal

54JiangY.ChoiI.JiangJ.GuJ.

55CogalanU.BemanaM.MyszkowskiK.SeidelH.-P.RitschelT.2022Learning HDR video reconstruction for dual-exposure sensors with temporally-alternating exposuresComput. Graph.105577257–7210.1016/j.cag.2022.04.008

56DaiJ.QiH.XiongY.LiY.ZhangG.HuH.WeiY.2017Deformable convolutional networksProc. IEEE Int’l. Conf. on Computer Vision764773764–73IEEEPiscataway, NJ10.1109/ICCV.2017.89

57WooS.ParkJ.LeeJ.-Y.KweonI. S.2018Cbam: Convolutional block attention moduleProc. European Conf. on Computer Vision (ECCV)3193–19SpringerCham10.1007/978-3-030-01234-2_1

58LiX.WangW.HuX.YangJ.2019Selective kernel networksProc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition510519510–9IEEEPiscataway, NJ10.1109/CVPR.2019.00060

59HowardA. G.ZhuM.ChenB.KalenichenkoD.WangW.WeyandT.AndreettoM.AdamH.

60TianY.ZhangY.FuY.XuC.2020Tdan: Temporally-deformable alignment network for video super-resolutionProc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition336033693360–9IEEEPiscataway, NJ10.1109/CVPR42600.2020.00342

61ZhangY.TianY.KongY.ZhongB.FuY.2018Residual dense network for image super-resolutionProc. IEEE Conf. on Computer Vision and Pattern Recognition247224812472–81IEEEPiscataway, NJ10.1109/CVPR.2018.00262

62DebevecP. E.MalikJ.1997Recovering high dynamic range radiance maps from photographsSIGGRAPH ’97: Proc. 24th Annual Conf. on Computer Graphics and Interactive Techniques369378369–78ACMNew York, NY10.1145/258734.258884

63GlorotX.BengioY.2010Understanding the difficulty of training deep feedforward neural networksProc. Thirteenth Int’l. Conf. on Artificial Intelligence and Statistics, JMLR Workshop and Conf. Proc.249256249–56JMLRCambridge, MA

64MantiukR.DalyS.KerofskyL.2008Display adaptive tone mappingACM Trans. Graphics271101–1010.1145/1360612.1360667

65SjälanderM.JahreM.TufteG.ReissmannN.

66NarwariaM.Da SilvaM. P.Le CalletP.2015HDR-VQM: An objective quality measure for high dynamic range videoSignal Process., Image Commun.35466046–6010.1016/j.image.2015.04.009

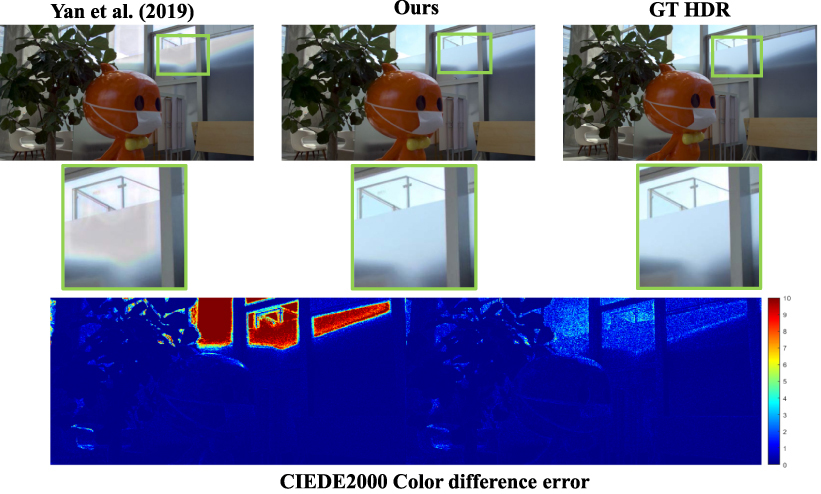

67LuoM. R.CuiG.RiggB.2001The development of the CIE 2000 colour-difference formula: CIEDE2000Color Res. Appl.26340350340–50

68KronanderJ.GustavsonS.BonnetG.YnnermanA.UngerJ.2014A unified framework for multi-sensor HDR video reconstructionSignal Process., Image Commun.29203215203–1510.1016/j.image.2013.08.018

69XueT.ChenB.WuJ.WeiD.T. FreemanW.2019Video enhancement with task-oriented flowInt. J. Comput. Vis.127110611251106–2510.1007/s11263-018-01144-2

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed

Open access

Open access