References

1

2TsumuraN.BabaK.YamamotoS.SambongiM.2015Estimating reflectance property from refocused images and its application to auto material appearance balancingJ. Imaging Sci. Technol.5930501-130501-630501-1–610.2352/J.ImagingSci.Technol.2015.59.3.030501

3

4MotoyoshiI.NishidaS.SharanL.AdelsonE. H.2007Image statistics and the perception of surface qualitiesNature447206209206–910.1038/nature05724

5SawayamaM.NishidaS.2015Visual perception of surface wetnessJ. Vis.1593710.1167/15.12.937

6KobikiH.NonakaR.BabaM.2013Specular reflection control technology to increase glossiness of imagesToshiba Rev.68384138–41

7FlemingR. W.2014Visual perception of materials and their propertiesVis. Res.94627562–7510.1016/j.visres.2013.11.004

8MihálikA.Durikovi£R.2009Material appearance transfer between imagesSCCG’09, Proc. Spring Conf., CG.555855–8ACMNew York, NY

9NguyenC. H.RitschelT.MyszkowskiK.EisemannE.SeidelH. P.20123D material style transferComputer Graphics Forum31431438431–810.1111/j.1467-8659.2012.03022.x

10ReinhardE.AdhikhminM.GoochB.ShirleyP.2001Color transfer between imagesProc. IEEE CG Appl.21344034–40IEEEPiscataway, NJ10.1109/38.946629

11RudermanD. L.CroninT. W.ChiaoC. C.1998Statistics of cone responses to natural images: implications for visual codingJ. Opt. Soc. Am. A15203620452036–4510.1364/JOSAA.15.002036

12GuC.LuX.ZhangC.2022Example-based color transfer with Gaussian mixture modelingJ. Pattern Recognit.12910871610.1016/j.patcog.2022.108716

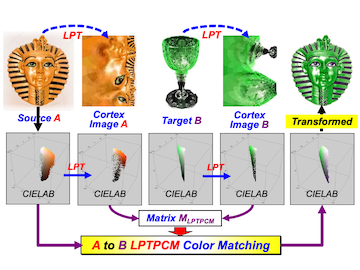

13KoteraH.TominagaS.SchettiniR.TrémeauA.HoriuchiT.2019Material appearance transfer with visual cortex imageComputational Color Imaging. CCIW 2019Lecture Notes in Computer ScienceVol. 11418334348334–48SpringerCham10.1007/978-3-030-13940-7_25

14KoteraH.MorimotoT.SaitoR.1998Object-oriented color matching by image clusteringProc. IS&T/SID CIC6: Sixth Color Imaging Conf.154158154–8IS&TSpringfield, VA

15KoteraH.SuzukiM.ChenH. S.2001Object-to-object color mapping by image segmentationJ. Electron. Imaging10977987977–8710.1117/1.1407263

16KoteraH.HoriuchiT.2004Automatic interchange in scene colors by image segmentationProc. IS&T/SID CIC12: Twelfth Color Imaging Conf.939993–9IS&TSpringfield, VA

17KoteraH.MatsusakiY.HoriuchiT.SaitoR.Automatic color interchange between imagesProc. Congress of the Int’l. Color Association2005Vol. AIC 05101910221019–22

18KoteraH.2006Intelligent Image ProcessingJ. SID.14745754745–54

19PitiéF.KokaramA.2007The linear monge-kantorovitch colour mapping for example-based colour transferProc. IET CVMP.233123–31IETLondon

20SchwartzE. L.1977Spatial mapping in the primate sensory projection: analytic structure and relevance to perceptionBiol. Cybern.25181194181–9410.1007/BF01885636

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed

Open access

Open access