A novel method is presented for evaluating the efficacy of object recognition algorithms on occluded images, called the occluded image function (OIF). The OIF describes system behavior in occluded environments and thus gives qualitative insight into their mechanisms; derivative metrics from OIF can also be used to quantitatively compare classifiers. To showcase the utility of the OIF, an experiment is performed by obstructing optical gait images from two biped robot models and using four binary machine learning classifiers to distinguish between them. The OIF diagrams are created from each experiment, and the resulting insights about the classifiers are discussed. Using the OIF, it is shown that the primitive classifiers can sometimes perform better under occlusion conditions, possibly due to pre-filtering of gait data by uniform occlusions. This result serves to demonstrate that the OIF is a useful tool for classifier evaluation.

Dichromats recognize colors using two out of three cone cells; L, M, and S. In order to extend the ability of dichromats to recognize the color difference, the authors propose a method to expand the color difference when observed by dichromats. Most methods analyze the color in color space, while their method analyzes the color in image space. Namely, they analyze the color between the neighboring pixels not in intensity space but in chromaticity space and form a Poisson equation. Solving the Poisson equation results in an image for dichromats whose relative color difference between neighboring pixels is as close as the image observed by trichromats.

There are three critical problems that need to be tackled in target detection when both the target and the photodetector platform are in flight. First, the background is a sky–ground joint background. Second, the background motion is slow when detecting targets from a long distance, and the targets are small, lacking shape information as well as large in number. Third, when approaching the target, the photodetector platform follows the target in violent movements and the background moves fast. This article is comprised of three parts. The first part is the sky–ground joint background separation algorithm, which extracts the boundary between the sky background and the ground background based on their different characteristics. The second part is the algorithm for the detection of small flying targets against the slow moving background (DSFT-SMB), where the double Gaussian background model is used to extract the target pixel points, then the missed targets are supplemented by correlating target trajectories, and the false alarm targets are filtered out using trajectory features. The third part is the algorithm for the detection of flying targets against the fast moving background (DFT-FMB), where the spectral residual model of target is used to extract the target pixel points for the target feature point optical flow, then the speed of target feature point optical flow is calculated in the sky background and the ground background respectively, thereby targets are detected using the density clustering algorithm. Experimental results show that the proposed algorithms exhibit excellent detection performance, with the recall rate higher than 94%, the precision rate higher than 84%, and the F-measure higher than 89% in the DSFT-SMB, and the recall rate higher than 77%, the precision rate higher than 55%, and the F-measure higher than 65% in the DFT-FMB.

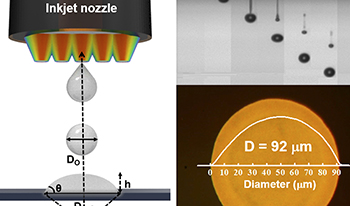

In this study, uniform films of nanocomposite glass frits (GFs) were printed on a ceramic surface using an inkjet printing technique. For the uniform inkjet printing of GF films, a theoretical model was employed to predict the optimal pitch of ink droplets for the inkjet printing of uniform lines. This model predicted that uniform GF lines could be printed at ink-droplet pitches smaller than 62 μm. Using this theoretical understanding, uniform and crack-free GF films with a thickness of 6 μm were printed by one-time printing. The inkjet-printed GF films were sintered at 850∘C to enhance the film density and surface roughness. The thickness of the post-sintered film decreased by half compared to the as-deposited film. The root-mean-square roughness of the GF films also decreased from 43 nm for the as-printed film to 8 nm for the post-sintered film. This work opens up an opportunity to inkjet print not only the GF coating layers but also complex GF patterns for various industrial applications.

This study investigates the reverse image search performance of Google, in terms of Average Precisions (APs) and Average Normalized Recalls (ANRs) at various cut-off points, on finding out similar images by using fresh Image Queries (IQs) from the five categories “Fashion,” “Computer,” “Home,” “Sports,” and “Toys.” The aim is to have an insight about retrieval effectiveness of Google on reverse image search and then motivate researchers and inform users. Five fresh IQs with different main concepts were created for each of the five categories. These 25 IQs were run on the search engine, and for each, the first 100 images retrieved were evaluated with binary relevance judgment. At the cut-off points 20, 40, 60, 80, and 100, both APs and ANRs were calculated for each category and for all 25 IQs. The AP range is from 41.60% (Toys—cut-off point 100) to 71% (Home—cut-off point 20). The ANR range is from 47.21% (Toys—cut-off point 20) to 71.31% (Computer—cut-off point 100). If the categories are ignored; when more images were evaluated, the performance of displaying relevant images in higher ranks increased, whereas the performance of retrieving relevant images decreased. It seems that the information retrieval effectiveness of Google on reverse image search needs to be improved.

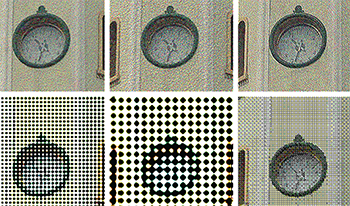

Halftoning is a crucial part of image reproduction in print. For large format prints, especially at higher resolutions, it is important to have very fast and computationally feasible halftoning methods of good quality. The authors have already introduced an approach to obtain image-independent threshold matrices generating both first- and second-order frequency modulated (FM) halftones with different clustered dot sizes. Predetermined and image-independent threshold matrices make the proposed halftoning method a point-by-point process and thereby very fast. In this article, they report a comprehensive quality evaluation of first- and second-order FM halftones generated by this technique and compare them with each other, employing several quality metrics. These generated halftones are also compared with error diffusion (ED) halftones employing two different error filters. The results indicate that the second-order FM halftoning with small clustered dot size performs best in almost all studied quality aspects than the first- and second-order FM halftoning with larger clustered dot size. It is also shown that the first- and second-order FM halftones with small clustered dot sizes are of almost the same quality as ED halftones using Floyd–Steinberg error filter and of higher quality than halftones generated by ED employing Jarvis, Judice, and Ninke error filter.

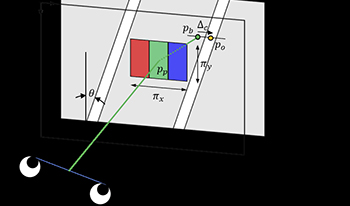

In this paper, we present an efficient 3D rendering method capable of parallel processing for a glasses-free 3D display based on eye-tracking. The proposed 1WDSR (1-way directional subpixel rendering) method allocates subpixel values according to the average position of the viewer’s left and right eyes, and has high computational efficiency without deteriorating 3D image quality. Experimental results have shown that 3D rendering time is reduced to less than 50% compared to the directional subpixel rendering (DSR) method previously proposed by the author while maintaining 3D rendering accuracy.

This article uses LabVIEW, a software program to develop a whitefly feature identification and counting technology, and machine learning algorithms for whitefly monitoring, identification, and counting applications. In addition, a high-magnification CCD camera is used for on-demand image photography, and then the functional programs of the VI library of LabVIEW NI-DAQ and LabVIEW NI Vision Development Module are used to develop image recognition functions. The grayscale-value pyramid-matching algorithm is used for image conversion and recognition in the machine learning mode. The built graphical user interface and device hardware provide convenient and effective whitefly feature identification and sample counting. This monitoring technology exhibits features such as remote monitoring, counting, data storage, and statistical analysis.

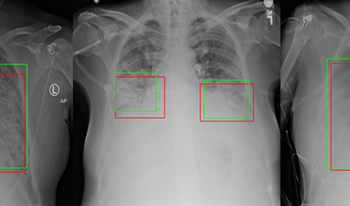

General convolutional neural networks are unable to automatically adjust their receptive fields for the detection of pneumonia lesion regions. This study, therefore, proposes a pneumonia detection algorithm with automatic receptive field adjustment. This algorithm is a modified form of RetinaNet with selective kernel convolution incorporated into the feature extraction network ResNet. The resulting SK-ResNet automatically adjusts the size of the receptive field. The convolutional neural network can then generate prediction bounds with sizes corresponding to those of the targets. In addition, the authors aggregated the detection results with SK-ResNet50 and SK-ResNet152 for the feature extraction network to further enhancing average precision (AP). With a data set provided by the Radiological Society of North America, the proposed algorithm with SK-ResNet50 as the feature extraction network resulted in AP50 that was 1.5% higher than that returned by RetinaNet. The number of images processed per second differed by only 0.45, which indicated that AP was increased while detection speed was maintained. After the detection results with the SK-ResNet50 and SK-ResNet152 as the feature extraction network were combined, AP50 increased by 3.3% compared to the RetinaNet algorithm. The experimental results show that the proposed algorithm is effective at automatically adjusting the size of the receptive field based on the size of the target, as well as increasing AP with minimal reduction in speed.